AI winter

Encyclopedia

In the history of artificial intelligence

, an AI winter is a period of reduced funding and interest in artificial intelligence

research. The process of hype

, disappointment and funding cuts are common in many emerging technologies (consider the railway mania

or the dot-com bubble

), but the problem has been particularly acute for AI. The pattern has occurred many times:

The worst times for AI were 1974−80 and 1987−93. Sometimes one or the other of these periods (or some part of them) is referred to as "the" AI winter.

The term first appeared in 1984 as the topic of a public debate at the annual meeting of AAAI

(then called the "American Association of Artificial Intelligence"). It was coined by analogy with the relentless spiral of a nuclear winter

. It is a chain reaction that begins with pessimism in the AI community, followed by pessimism in the press, followed by a severe cutback in funding, followed by the end of serious research. At the meeting, Roger Schank

and Marvin Minsky

—two leading AI researchers who had survived the "winter" of the 1970s—warned the business community that enthusiasm for AI had spiraled out of control in the '80s and that disappointment would certainly follow. Just three years later, the billion-dollar AI industry began to collapse.

The historical episodes known as AI winters are collapses only in the perception of AI by government bureaucrats and venture capitalists. Despite the rise and fall of AI's reputation, it has continued to develop new and successful technologies. AI researcher Rodney Brooks

would complain in 2002 that "there's this stupid myth out there that AI has failed, but AI is around you every second of the day." Ray Kurzweil agrees: "Many observers still think that the AI winter was the end of the story and that nothing since has come of the AI field. Yet today many thousands of AI applications are deeply embedded in the infrastructure of every industry." He adds unequivocally: "the AI winter is long since over."

, the US government was particularly interested in the automatic, instant translation of Russian

documents and scientific reports. The government aggressively supported efforts at machine translation

starting in 1954. At the outset, the researchers were optimistic. Noam Chomsky

's new work in grammar

was streamlining the translation process and there were "many predictions of imminent 'breakthroughs'".

However, researchers had underestimated the profound difficulty of disambiguation. In order to translate a sentence, a machine needed to have some idea what the sentence was about, otherwise it made ludicrous mistakes. An anecdotal example was "the spirit is willing but the flesh is weak." Translated back and forth with Russian, it became "the vodka is good but the meat is rotten." Similarly, "out of sight, out of mind" became "blind idiot." Later researchers would call this the commonsense knowledge problem.

By 1964, National Research Council

had become concerned about the lack of progress and formed the Automatic Language Processing Advisory Committee (ALPAC

) to look into the problem. They concluded, in a famous 1966 report, that machine translation was more expensive, less accurate and slower than human translation. After spending some 20 million dollars, the NRC

ended all support. Careers were destroyed and research ended.

Machine translation is still an open

research problem in the 21st century.

Some of the earliest work in AI used networks or circuits of connected units to simulate intelligent behavior. Examples of this kind of work, called "connectionism

", include Walter Pitts

and Warren McCullough's first description of a neural network

for logic and Marvin Minsky

's work on the SNARC

system. In the late '50s, most of these approaches were abandoned when researchers began to explore symbolic

reasoning as the essence of intelligence, following the success of programs like the Logic Theorist

and the General Problem Solver

.

However, one type of connectionist work continued: the study of perceptrons, invented by Frank Rosenblatt

, who kept the field alive with his salesmanship and the sheer force of his personality.

He optimistically predicted that the "perceptron may eventually be able to learn, make decisions, and translate languages."

Mainstream research into perceptrons came to an abrupt end in 1969, when Marvin Minsky

and Seymour Papert

published the book Perceptrons

which was perceived as outlining the limits of what perceptrons could do.

Connectionist approaches were abandoned for the next decade or so. While important work, such as Paul Werbos

' discovery of backpropagation

, continued in a limited way, major funding for connectionist projects was difficult to find in the 1970s and early '80s.

The "winter" of connectionist research came to an end in the middle '80s, when the work of John Hopfield, David Rumelhart

and others revived large scale interest in neural network

s. Rosenblatt did not live to see this, however. He died in a boating accident shortly after Perceptrons was published.

was asked by Parliament

to evaluate the state of AI research in the United Kingdom

. His report, now called the Lighthill report

, criticized the utter failure of AI to achieve its "grandiose objectives." He concluded that nothing being done in AI couldn't be done in other sciences. He specifically mentioned the problem of "combinatorial explosion

" or "intractability", which implied that many of AI's most successful algorithms would grind to a halt on real world problems and were only suitable for solving "toy" versions. (John McCarthy

would later write in response that "the combinatorial explosion problem has been recognized in AI from the beginning.")

The report was contested in a debate broadcast in the BBC "Controversy" series in 1973. The debate "The general purpose robot is a mirage" from the Royal Institute was Lighthill

versus the team of Michie

, McCarthy and Gregory

.

The report led to the complete dismantling of AI research in England. AI research continued in only a few top universities (Edinburgh, Essex and Sussex). This "created a bow-wave effect that led to funding cuts across Europe," writes James Hendler

. Research would not revive on a large scale until 1983, when Alvey

(a research project of the British Government) began to fund AI again from a war chest of £350 million in response to the Japanese Fifth Generation Project (see below). Alvey had a number of UK-only requirements which did not sit well internationally, especially with US partners, and lost Phase 2 funding.

(then known as "ARPA", now known as "DARPA") provided millions of dollars for AI research with almost no strings attached. DARPA's director in those years, J. C. R. Licklider

believed in "funding people, not projects" and allowed AI's leaders (such as Marvin Minsky

, John McCarthy

, Herbert Simon

or Allen Newell

) to spend it almost any way they liked.

This attitude changed after the passage of Mansfield Amendment in 1969, which required DARPA to fund "mission-oriented direct research, rather than basic undirected research." Pure undirected research of the kind that had gone on in the '60s would no longer be funded by DARPA. Researchers now had to show that their work would soon produce some useful military technology. AI research proposals were held to a very high standard. The situation was not helped when the Lighthill report

and DARPA's own study (the American Study Group) suggested that most AI research was unlikely to produce anything truly useful in the foreseeable future. DARPA's money was directed at specific projects with identifiable goals, such as autonomous tanks and battle management systems. By 1974, funding for AI projects was hard to find.

AI researcher Hans Moravec

blamed the crisis on the unrealistic predictions of his colleagues: "Many researchers were caught up in a web of increasing exaggeration. Their initial promises to DARPA had been much too optimistic. Of course, what they delivered stopped considerably short of that. But they felt they couldn't in their next proposal promise less than in the first one, so they promised more." The result, Moravec claims, is that some of the staff at DARPA had lost patience with AI research. "It was literally phrased at DARPA that 'some of these people were going to be taught a lesson[by] having their two-million-dollar-a-year contracts cut to almost nothing!'" Moravec told Daniel Crevier

.

While the autonomous tank project was a failure, the battle management system (the Dynamic Analysis and Replanning Tool

) proved to be enormously successful, saving billions in the first Gulf War

, repaying all of DARPAs investment in AI and justifying DARPA's pragmatic policy.

program at Carnegie Mellon University

. DARPA had hoped for, and felt it had been promised, a system that could respond to voice commands from a pilot. The SUR team had developed a system which could recognize spoken English, but only if the words were spoken in a particular order. DARPA felt it had been duped and, in 1974, they cancelled a three million dollar a year grant.

Many years later, successful commercial speech recognition

systems would use the technology developed by the Carnegie Mellon team (such as hidden Markov models) and the market for speech recognition

systems would reach $4 billion by 2001.

" was adopted by corporations around the world. The first commercial expert system was XCON

, developed at Carnegie Mellon for Digital Equipment Corporation

, and it was an enormous success: it was estimated to have saved the company 40 million dollars over just six years of operation. Corporations around the world began to develop and deploy expert systems and by 1985 they were spending over a billion dollars on AI, most of it to in-house AI departments. An industry grew up to support them, including software companies like Teknowledge and Intellicorp (KEE)

, and hardware companies like Symbolics

and Lisp Machines Inc. who built specialized computers, called Lisp machines

, that were optimized to process the programming language Lisp

, the preferred language for AI.

In 1987 (three years after Minsky

and Schank

's prediction) the market for specialized AI hardware collapsed. Workstations by companies like Sun Microsystems offered a powerful alternative to LISP machines and companies like Lucid offered a LISP environment for this new class of workstations. The performance of these general workstations became an increasingly difficult challenge for LISP Machines. Companies like Lucid and Franz LISP offered increasingly more powerful versions of LISP. For example, benchmarks were published showing workstations maintaining a performance advantage over LISP machines. Later desktop computers built by Apple

and IBM

would also offer a simpler and more popular architecture to run LISP applications on. By 1987 they had become more powerful than the more expensive Lisp machines

. The desktop computers had rule-based engines such as CLIPS

available. These alternatives left consumers with no reason to buy an expensive machine specialized for running LISP. An entire industry worth half a billion dollars was replaced in a single year.

Commercially, many Lisp machine

companies failed, like Symbolics

, Lisp Machines Inc., Lucid Inc.

, etc. Other companies, like Texas Instruments

and Xerox

abandoned the field. However, a number of customer companies (that is, companies using systems written in Lisp and developed on Lisp machine platforms) continued to maintain systems. In some cases, this maintenance involved the assumption of the resulting support work.

, proved too expensive to maintain. They were difficult to update, they could not learn, they were "brittle" (i.e., they could make grotesque mistakes when given unusual inputs), and they fell prey to problems (such as the qualification problem

) that had been identified years earlier in research in nonmonotonic logic. Expert systems proved useful, but only in a few special contexts. Another problem dealt with the computational hardness of truth maintenance

efforts for general knowledge. KEE

used an assumption-based approach (see NASA, TEXSYS) supporting multiple-world scenarios that was difficult to understand and apply.

The few remaining expert system shell

companies were eventually forced to downsize and search for new markets and software paradigms, like case based reasoning or universal database

access. The maturation of Common Lisp saved many systems such as ICAD

which found application in knowledge-based engineering

. Other systems, such as Intellicorp's KEE

, moved from Lisp to a C++ (variant) on the PC and helped establish object-oriented technology (including providing major support for the development of UML

).

set aside $850 million for the Fifth generation computer

project. Their objectives were to write programs and build machines that could carry on conversations, translate languages, interpret pictures, and reason like human beings. By 1991, the impressive list of goals penned in 1981 had not been met. Indeed, some of them had not been met in 2001. As with other AI projects, expectations had run much higher than what was actually possible.

. As originally proposed the project would begin with practical, achievable goals, which even included strong AI

as long term objective. The program was under the direction of the Information Processing Technology Office

(IPTO) and was also directed at supercomputing and microelectronics. By 1985 it had spent $100 million and 92 projects were underway at 60 institutions, half in industry, half in universities and government labs. AI research was generously funded by the SCI.

Jack Schwarz, who ascended to the leadership of IPTO in 1987, dismissed expert systems as "clever programming" and cut funding to AI "deeply and brutally," "eviscerating" SCI. Schwarz felt that DARPA should focus its funding only on those technologies which showed the most promise, in his words, DARPA should "surf", rather than "dog paddle", and he felt strongly AI was not "the next wave". Insiders in the program cited problems in communication, organization and integration. A few projects survived the funding cuts, including pilot's assistant and an autonomous land vehicle (which were never delivered) and the DART

battle management system, which (as noted above) was successful.

, machine learning

, knowledge-based systems

, business rules management

, cognitive systems, intelligent systems, intelligent agents or computational intelligence

, to indicate that their work emphasizes particular tools or is directed at a particular sub-problem. Although this may be partly because they consider their field to be fundamentally different from AI, it is also true that the new names help to procure funding by avoiding the stigma of false promises attached to the name "artificial intelligence."

explains "A lot of cutting edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it's not labeled AI anymore." Rodney Brooks

adds "there's this stupid myth out there that AI has failed, but AI is around you every second of the day."

Technologies developed by AI researchers have achieved commercial success in a number of domains, such as

machine translation

,

data mining

,

industrial robotics,

logistics

,

speech recognition

,

banking software,

medical diagnosis and

Google

's search engine.

Fuzzy logic

controllers have been developed for automatic gearboxes in automobiles (the 2006 Audi TT, VW Toureg and VW Caravell feature the DSP transmission which utilizes Fuzzy logic, a number of Škoda variants (Škoda Fabia

) also currently include a Fuzzy Logic based controller). Camera sensors widely utilize fuzzy logic to enable focus.

Heuristic search

and data analytics are both technologies that have developed from the evolutionary computing and machine learning

subdivision of the AI research community. Again, these techniques have been applied to a wide range of real world problems with considerable commercial success.

In the case of Heuristic Search, ILOG

has developed a large number of applications including deriving job shop schedules for many manufacturing installations http://findarticles.com/p/articles/mi_m0KJI/is_7_117/ai_n14863928. Many telecommunications companies also make use of this technology in the management of their workforces, for example BT Group

has deployed heuristic search in a scheduling application that provides the work schedules of 20000 engineers.

Data analytics technology utilizing algorithms for the automated formation of classifiers that were developed in the supervised machine learning community in the 1990s (for example, TDIDT, Support Vector Machines, Neural Nets, IBL) are now used pervasively by companies for marketing survey targeting and discovery of trends and features in data sets.

.

As of 2007, DARPA is soliciting AI research proposals under a number of programs including The Grand Challenge Program

, Cognitive Technology Threat Warning System

(CT2WS), "Human Assisted Neural Devices (SN07-43)", "Autonomous Real-Time Ground Ubiquitous Surveillance-Imaging System (ARGUS-IS)" and "Urban Reasoning and Geospatial Exploitation Technology (URGENT)"

Perhaps best known, is DARPA's Grand Challenge Program

which has developed fully automated road vehicles that can successfully navigate real world terrain in a fully autonomous fashion.

DARPA has also supported programs on the Semantic Web

with a great deal of emphasis on intelligent management of content and automated understanding. However James Hendler, the manager of the DARPA program at the time, expressed some disappointment with the outcome of the program.

The EU-FP7 funding program, provides financial support to researchers within the European Union. Currently it funds AI research under the Cognitive Systems: Interaction and Robotics Programme (€193m), the Digital Libraries and Content Programme (€203m) and the FET programme (€185m)

in that decade from both government and industry.

James Hendler

in 2008, observed that AI funding both in the EU and the US were being channeled more into applications and cross-breeding with traditional sciences, such as bioinformatics. This shift away from basic research is happening at the same time as there's a drive towards applications of e.g. the semantic web

. Invoking the pipeline argument, (see underlying causes) Hendler saw a parallel with the '80s winter and warned of a coming AI winter in the '10s.

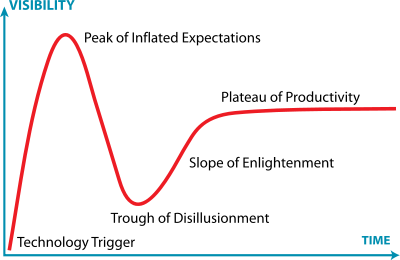

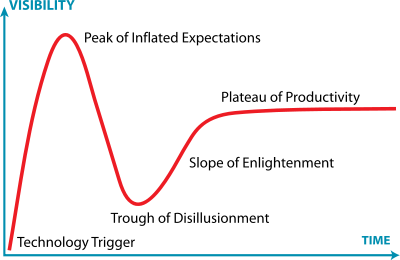

The AI winters can be partly understood as a sequence of over-inflated expectations and subsequent crash seen in stock-markets and exemplified by the railway mania and dotcom bubble. The hype cycle

The AI winters can be partly understood as a sequence of over-inflated expectations and subsequent crash seen in stock-markets and exemplified by the railway mania and dotcom bubble. The hype cycle

concept for new technology looks at perception of technology in more detail. It describes a common pattern in development of new technology, where an event, typically a technological breakthrough, creates publicity which feeds on itself to create a "peak of inflated expectations" followed by a "trough of disillusionment" and later recovery and maturation of the technology. The key point is that since scientific and technological progress can't keep pace with the publicity-fueled increase in expectations among investors and other stakeholders, a crash must follow. AI technology seems to be no exception to this rule.

which involve lecturers from Philosophy

to Mechanical Engineering

. AI is therefore prone to the same problems other types of interdisciplinary research face. Funding is channeled through the established departments and during budget cuts, there will be a tendency to shield the "core contents" of each department, at the expense of interdisciplinary and less traditional research projects.

in 2008, claiming that the fall of expert systems in the late '80s were not due to an inherent and unavoidable brittleness of expert systems, but to funding cuts in basic research in the '70s. These expert systems advanced in the '80s through applied research and product development, but by the end of the decade, the pipeline had run dry and expert systems were unable to produce improvements that could have overcome the brittleness and secured further funding.

, with the Lisp machine makers being marginalized. Expert systems were carried over to the new desktop computers by for instance CLIPS

, so the fall of the Lisp machine market and the fall of expert systems are strictly speaking two separate events. Still, the failure to adapt to such a change in the outside computing milieu is cited as one reason for the '80s AI winter.

History of artificial intelligence

The history of artificial intelligence began in antiquity, with myths, stories and rumors of artificial beings endowed with intelligence or consciousness by master craftsmen; as Pamela McCorduck writes, AI began with "an ancient wish to forge the gods."...

, an AI winter is a period of reduced funding and interest in artificial intelligence

Artificial intelligence

Artificial intelligence is the intelligence of machines and the branch of computer science that aims to create it. AI textbooks define the field as "the study and design of intelligent agents" where an intelligent agent is a system that perceives its environment and takes actions that maximize its...

research. The process of hype

Hype cycle

A hype cycle is a graphic representation of the maturity, adoption and social application of specific technologies. The term was coined by Gartner, Inc.-Rationale:...

, disappointment and funding cuts are common in many emerging technologies (consider the railway mania

Railway Mania

The Railway Mania was an instance of speculative frenzy in Britain in the 1840s. It followed a common pattern: as the price of railway shares increased, more and more money was poured in by speculators, until the inevitable collapse...

or the dot-com bubble

Dot-com bubble

The dot-com bubble was a speculative bubble covering roughly 1995–2000 during which stock markets in industrialized nations saw their equity value rise rapidly from growth in the more...

), but the problem has been particularly acute for AI. The pattern has occurred many times:

- 1966: the failure of machine translationMachine translationMachine translation, sometimes referred to by the abbreviation MT is a sub-field of computational linguistics that investigates the use of computer software to translate text or speech from one natural language to another.On a basic...

, - 1970: the abandonment of connectionismConnectionismConnectionism is a set of approaches in the fields of artificial intelligence, cognitive psychology, cognitive science, neuroscience and philosophy of mind, that models mental or behavioral phenomena as the emergent processes of interconnected networks of simple units...

, - 1971−75: DARPA's frustration with the Speech Understanding ResearchSpeech recognitionSpeech recognition converts spoken words to text. The term "voice recognition" is sometimes used to refer to recognition systems that must be trained to a particular speaker—as is the case for most desktop recognition software...

program at Carnegie Mellon UniversityCarnegie Mellon UniversityCarnegie Mellon University is a private research university in Pittsburgh, Pennsylvania, United States....

, - 1973: the large decrease in AI research in the United Kingdom in response to the Lighthill reportLighthill reportThe Lighthill report is the name commonly used for the paper "Artificial Intelligence: A General Survey" by James Lighthill, published in Artificial Intelligence: a paper symposium in 1973....

, - 1973−74: DARPA's cutbacks to academic AI research in general,

- 1987: the collapse of the Lisp machineLisp machineLisp machines were general-purpose computers designed to efficiently run Lisp as their main software language. In a sense, they were the first commercial single-user workstations...

market, - 1988: the cancellation of new spending on AI by the Strategic Computing InitiativeStrategic Computing InitiativeThe United States government's Strategic Computing Initiative funded research into advanced computer hardware and artificial intelligence from 1983 to 1993...

, - 1993: expert systems slowly reaching the bottom,

- 1990s: the quiet disappearance of the fifth-generation computer project's original goals,

The worst times for AI were 1974−80 and 1987−93. Sometimes one or the other of these periods (or some part of them) is referred to as "the" AI winter.

The term first appeared in 1984 as the topic of a public debate at the annual meeting of AAAI

Association for the Advancement of Artificial Intelligence

The Association for the Advancement of Artificial Intelligence or AAAI is an international, nonprofit, scientific society devoted to advancing the scientific understanding of the mechanisms underlying thought and intelligent behavior and their embodiment in machines...

(then called the "American Association of Artificial Intelligence"). It was coined by analogy with the relentless spiral of a nuclear winter

Nuclear winter

Nuclear winter is a predicted climatic effect of nuclear war. It has been theorized that severely cold weather and reduced sunlight for a period of months or even years could be caused by detonating large numbers of nuclear weapons, especially over flammable targets such as cities, where large...

. It is a chain reaction that begins with pessimism in the AI community, followed by pessimism in the press, followed by a severe cutback in funding, followed by the end of serious research. At the meeting, Roger Schank

Roger Schank

Roger Schank is an American artificial intelligence theorist, cognitive psychologist, learning scientist, educational reformer, and entrepreneur.-Academic career:...

and Marvin Minsky

Marvin Minsky

Marvin Lee Minsky is an American cognitive scientist in the field of artificial intelligence , co-founder of Massachusetts Institute of Technology's AI laboratory, and author of several texts on AI and philosophy.-Biography:...

—two leading AI researchers who had survived the "winter" of the 1970s—warned the business community that enthusiasm for AI had spiraled out of control in the '80s and that disappointment would certainly follow. Just three years later, the billion-dollar AI industry began to collapse.

The historical episodes known as AI winters are collapses only in the perception of AI by government bureaucrats and venture capitalists. Despite the rise and fall of AI's reputation, it has continued to develop new and successful technologies. AI researcher Rodney Brooks

Rodney Brooks

Rodney Allen Brooks is the former Panasonic professor of robotics at the Massachusetts Institute of Technology. Since 1986 he has authored a series of highly influential papers which have inaugurated a fundamental shift in artificial intelligence research...

would complain in 2002 that "there's this stupid myth out there that AI has failed, but AI is around you every second of the day." Ray Kurzweil agrees: "Many observers still think that the AI winter was the end of the story and that nothing since has come of the AI field. Yet today many thousands of AI applications are deeply embedded in the infrastructure of every industry." He adds unequivocally: "the AI winter is long since over."

Machine translation and the ALPAC report of 1966

During the Cold WarCold War

The Cold War was the continuing state from roughly 1946 to 1991 of political conflict, military tension, proxy wars, and economic competition between the Communist World—primarily the Soviet Union and its satellite states and allies—and the powers of the Western world, primarily the United States...

, the US government was particularly interested in the automatic, instant translation of Russian

Russian language

Russian is a Slavic language used primarily in Russia, Belarus, Uzbekistan, Kazakhstan, Tajikistan and Kyrgyzstan. It is an unofficial but widely spoken language in Ukraine, Moldova, Latvia, Turkmenistan and Estonia and, to a lesser extent, the other countries that were once constituent republics...

documents and scientific reports. The government aggressively supported efforts at machine translation

Machine translation

Machine translation, sometimes referred to by the abbreviation MT is a sub-field of computational linguistics that investigates the use of computer software to translate text or speech from one natural language to another.On a basic...

starting in 1954. At the outset, the researchers were optimistic. Noam Chomsky

Noam Chomsky

Avram Noam Chomsky is an American linguist, philosopher, cognitive scientist, and activist. He is an Institute Professor and Professor in the Department of Linguistics & Philosophy at MIT, where he has worked for over 50 years. Chomsky has been described as the "father of modern linguistics" and...

's new work in grammar

Grammar

In linguistics, grammar is the set of structural rules that govern the composition of clauses, phrases, and words in any given natural language. The term refers also to the study of such rules, and this field includes morphology, syntax, and phonology, often complemented by phonetics, semantics,...

was streamlining the translation process and there were "many predictions of imminent 'breakthroughs'".

However, researchers had underestimated the profound difficulty of disambiguation. In order to translate a sentence, a machine needed to have some idea what the sentence was about, otherwise it made ludicrous mistakes. An anecdotal example was "the spirit is willing but the flesh is weak." Translated back and forth with Russian, it became "the vodka is good but the meat is rotten." Similarly, "out of sight, out of mind" became "blind idiot." Later researchers would call this the commonsense knowledge problem.

By 1964, National Research Council

United States National Research Council

The National Research Council of the USA is the working arm of the United States National Academies, carrying out most of the studies done in their names.The National Academies include:* National Academy of Sciences...

had become concerned about the lack of progress and formed the Automatic Language Processing Advisory Committee (ALPAC

ALPAC

ALPAC was a committee of seven scientists led by John R. Pierce, established in 1964 by the U. S. Government in order to evaluate the progress in computational linguistics in general and machine translation in particular...

) to look into the problem. They concluded, in a famous 1966 report, that machine translation was more expensive, less accurate and slower than human translation. After spending some 20 million dollars, the NRC

United States National Research Council

The National Research Council of the USA is the working arm of the United States National Academies, carrying out most of the studies done in their names.The National Academies include:* National Academy of Sciences...

ended all support. Careers were destroyed and research ended.

Machine translation is still an open

Open problem

In science and mathematics, an open problem or an open question is a known problem that can be accurately stated, and has not yet been solved . Some questions remain unanswered for centuries before solutions are found...

research problem in the 21st century.

The abandonment of connectionism in 1969

- See also: PerceptronsPerceptrons (book)Perceptrons: an introduction to computational geometry is a book authored by Marvin Minsky and Seymour Papert, published in 1969. An edition with handwritten corrections and additions was released in the early 1970s...

and Frank RosenblattFrank RosenblattFrank Rosenblatt was a New York City born computer scientist who completed the Perceptron, or MARK 1, computer at Cornell University in 1960...

Some of the earliest work in AI used networks or circuits of connected units to simulate intelligent behavior. Examples of this kind of work, called "connectionism

Connectionism

Connectionism is a set of approaches in the fields of artificial intelligence, cognitive psychology, cognitive science, neuroscience and philosophy of mind, that models mental or behavioral phenomena as the emergent processes of interconnected networks of simple units...

", include Walter Pitts

Walter Pitts

Walter Harry Pitts, Jr. was a logician who worked in the field of cognitive psychology.He proposed landmark theoretical formulations of neural activity and emergent processes that influenced diverse fields such as cognitive sciences and psychology, philosophy, neurosciences, computer science,...

and Warren McCullough's first description of a neural network

Neural network

The term neural network was traditionally used to refer to a network or circuit of biological neurons. The modern usage of the term often refers to artificial neural networks, which are composed of artificial neurons or nodes...

for logic and Marvin Minsky

Marvin Minsky

Marvin Lee Minsky is an American cognitive scientist in the field of artificial intelligence , co-founder of Massachusetts Institute of Technology's AI laboratory, and author of several texts on AI and philosophy.-Biography:...

's work on the SNARC

SNARC

SNARC is a neural net machine designed by Marvin Lee Minsky. It is a randomly connected network of Hebb synapses....

system. In the late '50s, most of these approaches were abandoned when researchers began to explore symbolic

Physical symbol system

A physical symbol system takes physical patterns , combining them into structures and manipulating them to produce new expressions....

reasoning as the essence of intelligence, following the success of programs like the Logic Theorist

Logic Theorist

Logic Theorist is a computer program written in 1955 and 1956 by Allen Newell, Herbert Simon and J. C. Shaw. It was the first program deliberately engineered to mimic the problem solving skills of a human being and is called "the first artificial intelligence program." It would eventually prove 38...

and the General Problem Solver

General Problem Solver

General Problem Solver was a computer program created in 1959 by Herbert Simon, J.C. Shaw, and Allen Newell intended to work as a universal problem solver machine. Any formalized symbolic problem can be solved, in principle, by GPS. For instance: theorems proof, geometric problems and chess...

.

However, one type of connectionist work continued: the study of perceptrons, invented by Frank Rosenblatt

Frank Rosenblatt

Frank Rosenblatt was a New York City born computer scientist who completed the Perceptron, or MARK 1, computer at Cornell University in 1960...

, who kept the field alive with his salesmanship and the sheer force of his personality.

He optimistically predicted that the "perceptron may eventually be able to learn, make decisions, and translate languages."

Mainstream research into perceptrons came to an abrupt end in 1969, when Marvin Minsky

Marvin Minsky

Marvin Lee Minsky is an American cognitive scientist in the field of artificial intelligence , co-founder of Massachusetts Institute of Technology's AI laboratory, and author of several texts on AI and philosophy.-Biography:...

and Seymour Papert

Seymour Papert

Seymour Papert is an MIT mathematician, computer scientist, and educator. He is one of the pioneers of artificial intelligence, as well as an inventor of the Logo programming language....

published the book Perceptrons

Perceptrons (book)

Perceptrons: an introduction to computational geometry is a book authored by Marvin Minsky and Seymour Papert, published in 1969. An edition with handwritten corrections and additions was released in the early 1970s...

which was perceived as outlining the limits of what perceptrons could do.

Connectionist approaches were abandoned for the next decade or so. While important work, such as Paul Werbos

Paul Werbos

Paul J. Werbos is a scientist best known for his 1974 Harvard University Ph.D. thesis, which first described the process of training artificial neural networks through backpropagation of errors. The thesis, and some supplementary information, can be found in his book, The Roots of Backpropagation...

' discovery of backpropagation

Backpropagation

Backpropagation is a common method of teaching artificial neural networks how to perform a given task. Arthur E. Bryson and Yu-Chi Ho described it as a multi-stage dynamic system optimization method in 1969 . It wasn't until 1974 and later, when applied in the context of neural networks and...

, continued in a limited way, major funding for connectionist projects was difficult to find in the 1970s and early '80s.

The "winter" of connectionist research came to an end in the middle '80s, when the work of John Hopfield, David Rumelhart

David Rumelhart

David Everett Rumelhart was an American psychologist who made many contributions to the formal analysis of human cognition, working primarily within the frameworks of mathematical psychology, symbolic artificial intelligence, and parallel distributed processing...

and others revived large scale interest in neural network

Neural network

The term neural network was traditionally used to refer to a network or circuit of biological neurons. The modern usage of the term often refers to artificial neural networks, which are composed of artificial neurons or nodes...

s. Rosenblatt did not live to see this, however. He died in a boating accident shortly after Perceptrons was published.

The Lighthill report

In 1973, professor Sir James LighthillJames Lighthill

Sir Michael James Lighthill, FRS was a British applied mathematician, known for his pioneering work in the field of aeroacoustics.-Biography:...

was asked by Parliament

Parliament of the United Kingdom

The Parliament of the United Kingdom of Great Britain and Northern Ireland is the supreme legislative body in the United Kingdom, British Crown dependencies and British overseas territories, located in London...

to evaluate the state of AI research in the United Kingdom

United Kingdom

The United Kingdom of Great Britain and Northern IrelandIn the United Kingdom and Dependencies, other languages have been officially recognised as legitimate autochthonous languages under the European Charter for Regional or Minority Languages...

. His report, now called the Lighthill report

Lighthill report

The Lighthill report is the name commonly used for the paper "Artificial Intelligence: A General Survey" by James Lighthill, published in Artificial Intelligence: a paper symposium in 1973....

, criticized the utter failure of AI to achieve its "grandiose objectives." He concluded that nothing being done in AI couldn't be done in other sciences. He specifically mentioned the problem of "combinatorial explosion

Combinatorial explosion

In administration and computing, a combinatorial explosion is the rapidly accelerating increase in lines of communication as organizations are added in a process...

" or "intractability", which implied that many of AI's most successful algorithms would grind to a halt on real world problems and were only suitable for solving "toy" versions. (John McCarthy

John McCarthy (computer scientist)

John McCarthy was an American computer scientist and cognitive scientist. He coined the term "artificial intelligence" , invented the Lisp programming language and was highly influential in the early development of AI.McCarthy also influenced other areas of computing such as time sharing systems...

would later write in response that "the combinatorial explosion problem has been recognized in AI from the beginning.")

The report was contested in a debate broadcast in the BBC "Controversy" series in 1973. The debate "The general purpose robot is a mirage" from the Royal Institute was Lighthill

James Lighthill

Sir Michael James Lighthill, FRS was a British applied mathematician, known for his pioneering work in the field of aeroacoustics.-Biography:...

versus the team of Michie

Donald Michie

Donald Michie was a British researcher in artificial intelligence. During World War II, Michie worked for the Government Code and Cypher School at Bletchley Park, contributing to the effort to solve "Tunny," a German teleprinter cipher.-Early life and career:Michie was born in Rangoon, Burma...

, McCarthy and Gregory

Richard Gregory

Richard Langton Gregory, CBE, MA, D.Sc., FRSE, FRS was a British psychologist and Emeritus Professor of Neuropsychology at the University of Bristol.-Life and career:...

.

The report led to the complete dismantling of AI research in England. AI research continued in only a few top universities (Edinburgh, Essex and Sussex). This "created a bow-wave effect that led to funding cuts across Europe," writes James Hendler

James Hendler

James Hendler is an artificial intelligence researcher at Rensselaer Polytechnic Institute, USA, and one of the originators of the Semantic Web.-Background and research:...

. Research would not revive on a large scale until 1983, when Alvey

Alvey

The Alvey Programme was a British government sponsored research program in information technology that ran from 1983 to 1987. The program was a reaction to the Japanese Fifth generation computer project.Focus areas for the Alvey Programme included:...

(a research project of the British Government) began to fund AI again from a war chest of £350 million in response to the Japanese Fifth Generation Project (see below). Alvey had a number of UK-only requirements which did not sit well internationally, especially with US partners, and lost Phase 2 funding.

DARPA's funding cuts of the early '70s

During the 1960s, the Defense Advanced Research Projects AgencyDefense Advanced Research Projects Agency

The Defense Advanced Research Projects Agency is an agency of the United States Department of Defense responsible for the development of new technology for use by the military...

(then known as "ARPA", now known as "DARPA") provided millions of dollars for AI research with almost no strings attached. DARPA's director in those years, J. C. R. Licklider

J. C. R. Licklider

Joseph Carl Robnett Licklider , known simply as J.C.R. or "Lick" was an American computer scientist, considered one of the most important figures in computer science and general computing history...

believed in "funding people, not projects" and allowed AI's leaders (such as Marvin Minsky

Marvin Minsky

Marvin Lee Minsky is an American cognitive scientist in the field of artificial intelligence , co-founder of Massachusetts Institute of Technology's AI laboratory, and author of several texts on AI and philosophy.-Biography:...

, John McCarthy

John McCarthy (computer scientist)

John McCarthy was an American computer scientist and cognitive scientist. He coined the term "artificial intelligence" , invented the Lisp programming language and was highly influential in the early development of AI.McCarthy also influenced other areas of computing such as time sharing systems...

, Herbert Simon

Herbert Simon

Herbert Alexander Simon was an American political scientist, economist, sociologist, and psychologist, and professor—most notably at Carnegie Mellon University—whose research ranged across the fields of cognitive psychology, cognitive science, computer science, public administration, economics,...

or Allen Newell

Allen Newell

Allen Newell was a researcher in computer science and cognitive psychology at the RAND corporation and at Carnegie Mellon University’s School of Computer Science, Tepper School of Business, and Department of Psychology...

) to spend it almost any way they liked.

This attitude changed after the passage of Mansfield Amendment in 1969, which required DARPA to fund "mission-oriented direct research, rather than basic undirected research." Pure undirected research of the kind that had gone on in the '60s would no longer be funded by DARPA. Researchers now had to show that their work would soon produce some useful military technology. AI research proposals were held to a very high standard. The situation was not helped when the Lighthill report

Lighthill report

The Lighthill report is the name commonly used for the paper "Artificial Intelligence: A General Survey" by James Lighthill, published in Artificial Intelligence: a paper symposium in 1973....

and DARPA's own study (the American Study Group) suggested that most AI research was unlikely to produce anything truly useful in the foreseeable future. DARPA's money was directed at specific projects with identifiable goals, such as autonomous tanks and battle management systems. By 1974, funding for AI projects was hard to find.

AI researcher Hans Moravec

Hans Moravec

Hans Moravec is an adjunct faculty member at the Robotics Institute of Carnegie Mellon University. He is known for his work on robotics, artificial intelligence, and writings on the impact of technology. Moravec also is a futurist with many of his publications and predictions focusing on...

blamed the crisis on the unrealistic predictions of his colleagues: "Many researchers were caught up in a web of increasing exaggeration. Their initial promises to DARPA had been much too optimistic. Of course, what they delivered stopped considerably short of that. But they felt they couldn't in their next proposal promise less than in the first one, so they promised more." The result, Moravec claims, is that some of the staff at DARPA had lost patience with AI research. "It was literally phrased at DARPA that 'some of these people were going to be taught a lesson

Daniel Crevier

Daniel Crevier is a Canadian entrepreneur and artificial intelligence and image processing researcher. He is also the author of AI: the Tumultuous History of the Search for Artificial Intelligence. In 1974 Crevier received a Ph.D. degree from Massachusetts Institute of Technology...

.

While the autonomous tank project was a failure, the battle management system (the Dynamic Analysis and Replanning Tool

Dynamic Analysis and Replanning Tool

The Dynamic Analysis and Replanning Tool, commonly abbreviated to DART, is an artificial intelligence program used by the U.S. military to optimize and schedule the transportation of supplies or personnel and solve other logistical problems....

) proved to be enormously successful, saving billions in the first Gulf War

Gulf War

The Persian Gulf War , commonly referred to as simply the Gulf War, was a war waged by a U.N.-authorized coalition force from 34 nations led by the United States, against Iraq in response to Iraq's invasion and annexation of Kuwait.The war is also known under other names, such as the First Gulf...

, repaying all of DARPAs investment in AI and justifying DARPA's pragmatic policy.

The SUR debacle

DARPA was deeply disappointed with researchers working on the Speech Understanding ResearchSpeech recognition

Speech recognition converts spoken words to text. The term "voice recognition" is sometimes used to refer to recognition systems that must be trained to a particular speaker—as is the case for most desktop recognition software...

program at Carnegie Mellon University

Carnegie Mellon University

Carnegie Mellon University is a private research university in Pittsburgh, Pennsylvania, United States....

. DARPA had hoped for, and felt it had been promised, a system that could respond to voice commands from a pilot. The SUR team had developed a system which could recognize spoken English, but only if the words were spoken in a particular order. DARPA felt it had been duped and, in 1974, they cancelled a three million dollar a year grant.

Many years later, successful commercial speech recognition

Speech recognition

Speech recognition converts spoken words to text. The term "voice recognition" is sometimes used to refer to recognition systems that must be trained to a particular speaker—as is the case for most desktop recognition software...

systems would use the technology developed by the Carnegie Mellon team (such as hidden Markov models) and the market for speech recognition

Speech recognition

Speech recognition converts spoken words to text. The term "voice recognition" is sometimes used to refer to recognition systems that must be trained to a particular speaker—as is the case for most desktop recognition software...

systems would reach $4 billion by 2001.

The collapse of the Lisp machine market in 1987

In the 1980s a form of AI program called an "expert systemExpert system

In artificial intelligence, an expert system is a computer system that emulates the decision-making ability of a human expert. Expert systems are designed to solve complex problems by reasoning about knowledge, like an expert, and not by following the procedure of a developer as is the case in...

" was adopted by corporations around the world. The first commercial expert system was XCON

Xcon

The R1 program was a production-rule-based system written in OPS5 by John P. McDermott of CMU in 1978 to assist in the ordering of DEC's VAX computer systems by automatically selecting the computer system components based on the customer's requirements...

, developed at Carnegie Mellon for Digital Equipment Corporation

Digital Equipment Corporation

Digital Equipment Corporation was a major American company in the computer industry and a leading vendor of computer systems, software and peripherals from the 1960s to the 1990s...

, and it was an enormous success: it was estimated to have saved the company 40 million dollars over just six years of operation. Corporations around the world began to develop and deploy expert systems and by 1985 they were spending over a billion dollars on AI, most of it to in-house AI departments. An industry grew up to support them, including software companies like Teknowledge and Intellicorp (KEE)

IntelliCorp (Software)

IntelliCorp is a software company that provides, develops, and markets SAP application lifecycle management, business process management and data management software for SAP customers and partners...

, and hardware companies like Symbolics

Symbolics

Symbolics refers to two companies: now-defunct computer manufacturer Symbolics, Inc., and a privately held company that acquired the assets of the former company and continues to sell and maintain the Open Genera Lisp system and the Macsyma computer algebra system.The symbolics.com domain was...

and Lisp Machines Inc. who built specialized computers, called Lisp machines

Lisp Machines

Lisp Machines, Inc. was a company formed in 1979 by Richard Greenblatt of MIT's Artificial Intelligence Laboratory to build Lisp machines. It was based in Cambridge, Massachusetts....

, that were optimized to process the programming language Lisp

Lisp programming language

Lisp is a family of computer programming languages with a long history and a distinctive, fully parenthesized syntax. Originally specified in 1958, Lisp is the second-oldest high-level programming language in widespread use today; only Fortran is older...

, the preferred language for AI.

In 1987 (three years after Minsky

Marvin Minsky

Marvin Lee Minsky is an American cognitive scientist in the field of artificial intelligence , co-founder of Massachusetts Institute of Technology's AI laboratory, and author of several texts on AI and philosophy.-Biography:...

and Schank

Roger Schank

Roger Schank is an American artificial intelligence theorist, cognitive psychologist, learning scientist, educational reformer, and entrepreneur.-Academic career:...

's prediction) the market for specialized AI hardware collapsed. Workstations by companies like Sun Microsystems offered a powerful alternative to LISP machines and companies like Lucid offered a LISP environment for this new class of workstations. The performance of these general workstations became an increasingly difficult challenge for LISP Machines. Companies like Lucid and Franz LISP offered increasingly more powerful versions of LISP. For example, benchmarks were published showing workstations maintaining a performance advantage over LISP machines. Later desktop computers built by Apple

Apple Computer

Apple Inc. is an American multinational corporation that designs and markets consumer electronics, computer software, and personal computers. The company's best-known hardware products include the Macintosh line of computers, the iPod, the iPhone and the iPad...

and IBM

IBM

International Business Machines Corporation or IBM is an American multinational technology and consulting corporation headquartered in Armonk, New York, United States. IBM manufactures and sells computer hardware and software, and it offers infrastructure, hosting and consulting services in areas...

would also offer a simpler and more popular architecture to run LISP applications on. By 1987 they had become more powerful than the more expensive Lisp machines

Lisp Machines

Lisp Machines, Inc. was a company formed in 1979 by Richard Greenblatt of MIT's Artificial Intelligence Laboratory to build Lisp machines. It was based in Cambridge, Massachusetts....

. The desktop computers had rule-based engines such as CLIPS

CLIPS

CLIPS is a public domain software tool for building expert systems. The name is an acronym for "C Language Integrated Production System." The syntax and name was inspired by Charles Forgy's OPS...

available. These alternatives left consumers with no reason to buy an expensive machine specialized for running LISP. An entire industry worth half a billion dollars was replaced in a single year.

Commercially, many Lisp machine

Lisp machine

Lisp machines were general-purpose computers designed to efficiently run Lisp as their main software language. In a sense, they were the first commercial single-user workstations...

companies failed, like Symbolics

Symbolics

Symbolics refers to two companies: now-defunct computer manufacturer Symbolics, Inc., and a privately held company that acquired the assets of the former company and continues to sell and maintain the Open Genera Lisp system and the Macsyma computer algebra system.The symbolics.com domain was...

, Lisp Machines Inc., Lucid Inc.

Lucid Inc.

Lucid Incorporated was a software development company founded by Richard P. Gabriel in 1984 which went bankrupt in 1994.-Beginnings:Gabriel had been working for Lawrence Livermore National Labs on a computer hardware project called "S1", the first incarnation of which used a CISC processor...

, etc. Other companies, like Texas Instruments

Texas Instruments

Texas Instruments Inc. , widely known as TI, is an American company based in Dallas, Texas, United States, which develops and commercializes semiconductor and computer technology...

and Xerox

Xerox

Xerox Corporation is an American multinational document management corporation that produced and sells a range of color and black-and-white printers, multifunction systems, photo copiers, digital production printing presses, and related consulting services and supplies...

abandoned the field. However, a number of customer companies (that is, companies using systems written in Lisp and developed on Lisp machine platforms) continued to maintain systems. In some cases, this maintenance involved the assumption of the resulting support work.

The fall of expert systems

By the early 90s, the earliest successful expert systems, such as XCONXcon

The R1 program was a production-rule-based system written in OPS5 by John P. McDermott of CMU in 1978 to assist in the ordering of DEC's VAX computer systems by automatically selecting the computer system components based on the customer's requirements...

, proved too expensive to maintain. They were difficult to update, they could not learn, they were "brittle" (i.e., they could make grotesque mistakes when given unusual inputs), and they fell prey to problems (such as the qualification problem

Qualification problem

In philosophy and AI , the qualification problem is concerned with the impossibility of listing all the preconditions required for a real-world action to have its intended effect. It might be posed as how to deal with the things that prevent me from achieving my intended result...

) that had been identified years earlier in research in nonmonotonic logic. Expert systems proved useful, but only in a few special contexts. Another problem dealt with the computational hardness of truth maintenance

Truth maintenance system

Reason maintenance is a knowledge representation approach to efficient handling of inferred information that is explicitly stored. Reason maintenance distinguishes between base facts, which can be defeated, and derived facts. As such it differs from belief revision which, in its basic form,...

efforts for general knowledge. KEE

IntelliCorp (Software)

IntelliCorp is a software company that provides, develops, and markets SAP application lifecycle management, business process management and data management software for SAP customers and partners...

used an assumption-based approach (see NASA, TEXSYS) supporting multiple-world scenarios that was difficult to understand and apply.

The few remaining expert system shell

Expert system

In artificial intelligence, an expert system is a computer system that emulates the decision-making ability of a human expert. Expert systems are designed to solve complex problems by reasoning about knowledge, like an expert, and not by following the procedure of a developer as is the case in...

companies were eventually forced to downsize and search for new markets and software paradigms, like case based reasoning or universal database

Database

A database is an organized collection of data for one or more purposes, usually in digital form. The data are typically organized to model relevant aspects of reality , in a way that supports processes requiring this information...

access. The maturation of Common Lisp saved many systems such as ICAD

ICAD

ICAD was a Knowledge-Based Engineering system that was based upon the Lisp programming language...

which found application in knowledge-based engineering

Knowledge-based engineering

Knowledge-based engineering is a discipline with roots in computer-aided design and knowledge-based systems but has several definitions and roles depending upon the context. An early role was support tool for a design engineer generally within the context of product design...

. Other systems, such as Intellicorp's KEE

IntelliCorp (Software)

IntelliCorp is a software company that provides, develops, and markets SAP application lifecycle management, business process management and data management software for SAP customers and partners...

, moved from Lisp to a C++ (variant) on the PC and helped establish object-oriented technology (including providing major support for the development of UML

UML Partners

UML Partners was a consortium of system integrators and vendors convened in 1996 to specify the Unified Modeling Language . Initially the consortium was led by Grady Booch, Ivar Jacobson, and James Rumbaugh of Rational Software...

).

The fizzle of the fifth generation

In 1981, the Japanese Ministry of International Trade and IndustryMinistry of International Trade and Industry

The Ministry of International Trade and Industry was one of the most powerful agencies of the Government of Japan. At the height of its influence, it effectively ran much of Japanese industrial policy, funding research and directing investment...

set aside $850 million for the Fifth generation computer

Fifth generation computer

The Fifth Generation Computer Systems project was an initiative by Japan'sMinistry of International Trade and Industry, begun in 1982, to create a "fifth generation computer" which was supposed to perform much calculation using massive parallel processing...

project. Their objectives were to write programs and build machines that could carry on conversations, translate languages, interpret pictures, and reason like human beings. By 1991, the impressive list of goals penned in 1981 had not been met. Indeed, some of them had not been met in 2001. As with other AI projects, expectations had run much higher than what was actually possible.

Cutbacks at the Strategic Computing Initiative

In 1983, in response to the fifth generation project, DARPA again began to fund AI research through the Strategic Computing InitiativeStrategic Computing Initiative

The United States government's Strategic Computing Initiative funded research into advanced computer hardware and artificial intelligence from 1983 to 1993...

. As originally proposed the project would begin with practical, achievable goals, which even included strong AI

Strong AI

Strong AI is artificial intelligence that matches or exceeds human intelligence — the intelligence of a machine that can successfully perform any intellectual task that a human being can. It is a primary goal of artificial intelligence research and an important topic for science fiction writers and...

as long term objective. The program was under the direction of the Information Processing Technology Office

Information Processing Technology Office

The Information Processing Techniques Office is part of the Defense Advanced Research Projects Agency of the United States Department of Defense whose stated mission is:...

(IPTO) and was also directed at supercomputing and microelectronics. By 1985 it had spent $100 million and 92 projects were underway at 60 institutions, half in industry, half in universities and government labs. AI research was generously funded by the SCI.

Jack Schwarz, who ascended to the leadership of IPTO in 1987, dismissed expert systems as "clever programming" and cut funding to AI "deeply and brutally," "eviscerating" SCI. Schwarz felt that DARPA should focus its funding only on those technologies which showed the most promise, in his words, DARPA should "surf", rather than "dog paddle", and he felt strongly AI was not "the next wave". Insiders in the program cited problems in communication, organization and integration. A few projects survived the funding cuts, including pilot's assistant and an autonomous land vehicle (which were never delivered) and the DART

Dynamic Analysis and Replanning Tool

The Dynamic Analysis and Replanning Tool, commonly abbreviated to DART, is an artificial intelligence program used by the U.S. military to optimize and schedule the transportation of supplies or personnel and solve other logistical problems....

battle management system, which (as noted above) was successful.

The winter that wouldn't end

A survey of recent reports suggests that AI's reputation is still less than pristine:- Alex Castro, quoted in The Economist, 7 June 2007: "[Investors] were put off by the term 'voice recognition' which, like 'artificial intelligence', is associated with systems that have all too often failed to live up to their promises."

- Patty Tascarella in Pittsburgh Business Times, 2006: "Some believe the word 'robotics' actually carries a stigma that hurts a company's chances at funding."

- John Markoff in the New York Times, 2005: "At its low point, some computer scientists and software engineers avoided the term artificial intelligence for fear of being viewed as wild-eyed dreamers."

AI under different names

Many researchers in AI today deliberately call their work by other names, such as informaticsInformatics (academic field)

Informatics is the science of information, the practice of information processing, and the engineering of information systems. Informatics studies the structure, algorithms, behavior, and interactions of natural and artificial systems that store, process, access and communicate information...

, machine learning

Machine learning

Machine learning, a branch of artificial intelligence, is a scientific discipline concerned with the design and development of algorithms that allow computers to evolve behaviors based on empirical data, such as from sensor data or databases...

, knowledge-based systems

Knowledge-based systems

Knowledge based systems are artificial intelligent tools working in a narrow domain to provide intelligent decisions with justification. Knowledge is acquired and represented using various knowledge representation techniques rules, frames and scripts...

, business rules management

BRMS

A BRMS or Business Rule Management System is a software system used to define, deploy, execute, monitor and maintain the variety and complexity of decision logic that is used by operational systems within an organization or enterprise...

, cognitive systems, intelligent systems, intelligent agents or computational intelligence

Computational intelligence

Computational intelligence is a set of Nature-inspired computational methodologies and approaches to address complex problems of the real world applications to which traditional methodologies and approaches are ineffective or infeasible. It primarily includes Fuzzy logic systems, Neural Networks...

, to indicate that their work emphasizes particular tools or is directed at a particular sub-problem. Although this may be partly because they consider their field to be fundamentally different from AI, it is also true that the new names help to procure funding by avoiding the stigma of false promises attached to the name "artificial intelligence."

AI behind the scenes

"Many observers still think that the AI winter was the end of the story and that nothing since come of the AI field," writes Ray Kurzweil, "yet today many thousands of AI applications are deeply embedded in the infrastructure of every industry." In the late '90s and early 21st century, AI technology became widely used as elements of larger systems, but the field is rarely credited for these successes. Nick BostromNick Bostrom

Nick Bostrom is a Swedish philosopher at the University of Oxford known for his work on existential risk and the anthropic principle. He holds a PhD from the London School of Economics...

explains "A lot of cutting edge AI has filtered into general applications, often without being called AI because once something becomes useful enough and common enough it's not labeled AI anymore." Rodney Brooks

Rodney Brooks

Rodney Allen Brooks is the former Panasonic professor of robotics at the Massachusetts Institute of Technology. Since 1986 he has authored a series of highly influential papers which have inaugurated a fundamental shift in artificial intelligence research...

adds "there's this stupid myth out there that AI has failed, but AI is around you every second of the day."

Technologies developed by AI researchers have achieved commercial success in a number of domains, such as

machine translation

Machine translation

Machine translation, sometimes referred to by the abbreviation MT is a sub-field of computational linguistics that investigates the use of computer software to translate text or speech from one natural language to another.On a basic...

,

data mining

Data mining

Data mining , a relatively young and interdisciplinary field of computer science is the process of discovering new patterns from large data sets involving methods at the intersection of artificial intelligence, machine learning, statistics and database systems...

,

industrial robotics,

logistics

Logistics

Logistics is the management of the flow of goods between the point of origin and the point of destination in order to meet the requirements of customers or corporations. Logistics involves the integration of information, transportation, inventory, warehousing, material handling, and packaging, and...

,

speech recognition

Speech recognition

Speech recognition converts spoken words to text. The term "voice recognition" is sometimes used to refer to recognition systems that must be trained to a particular speaker—as is the case for most desktop recognition software...

,

banking software,

medical diagnosis and

Google

Google Inc. is an American multinational public corporation invested in Internet search, cloud computing, and advertising technologies. Google hosts and develops a number of Internet-based services and products, and generates profit primarily from advertising through its AdWords program...

's search engine.

Fuzzy logic

Fuzzy logic

Fuzzy logic is a form of many-valued logic; it deals with reasoning that is approximate rather than fixed and exact. In contrast with traditional logic theory, where binary sets have two-valued logic: true or false, fuzzy logic variables may have a truth value that ranges in degree between 0 and 1...

controllers have been developed for automatic gearboxes in automobiles (the 2006 Audi TT, VW Toureg and VW Caravell feature the DSP transmission which utilizes Fuzzy logic, a number of Škoda variants (Škoda Fabia

Škoda Fabia

The Škoda Fabia is a supermini produced by Czech manufacturer Škoda Auto since 1999. It was the successor to the Škoda Felicia, which was discontinued in 2001...

) also currently include a Fuzzy Logic based controller). Camera sensors widely utilize fuzzy logic to enable focus.

Heuristic search

Search algorithm

In computer science, a search algorithm is an algorithm for finding an item with specified properties among a collection of items. The items may be stored individually as records in a database; or may be elements of a search space defined by a mathematical formula or procedure, such as the roots...

and data analytics are both technologies that have developed from the evolutionary computing and machine learning

Machine learning

Machine learning, a branch of artificial intelligence, is a scientific discipline concerned with the design and development of algorithms that allow computers to evolve behaviors based on empirical data, such as from sensor data or databases...

subdivision of the AI research community. Again, these techniques have been applied to a wide range of real world problems with considerable commercial success.

In the case of Heuristic Search, ILOG

ILOG

ILOG is an international software company owned by IBM. It creates enterprise software products for supply chain, business rule management, visualization and optimization....

has developed a large number of applications including deriving job shop schedules for many manufacturing installations http://findarticles.com/p/articles/mi_m0KJI/is_7_117/ai_n14863928. Many telecommunications companies also make use of this technology in the management of their workforces, for example BT Group

BT Group

BT Group plc is a global telecommunications services company headquartered in London, United Kingdom. It is one of the largest telecommunications services companies in the world and has operations in more than 170 countries. Through its BT Global Services division it is a major supplier of...

has deployed heuristic search in a scheduling application that provides the work schedules of 20000 engineers.

Data analytics technology utilizing algorithms for the automated formation of classifiers that were developed in the supervised machine learning community in the 1990s (for example, TDIDT, Support Vector Machines, Neural Nets, IBL) are now used pervasively by companies for marketing survey targeting and discovery of trends and features in data sets.

AI funding

Primarily the way researchers and economists judge the status of an AI winter is by reviewing which AI projects are being funded, how much and by whom. Trends in funding are often set by major funding agencies in the developed world. Currently, DARPA and a civilian funding program called EU-FP7 provide much of the funding for AI research in the US and European UnionEuropean Union

The European Union is an economic and political union of 27 independent member states which are located primarily in Europe. The EU traces its origins from the European Coal and Steel Community and the European Economic Community , formed by six countries in 1958...

.

As of 2007, DARPA is soliciting AI research proposals under a number of programs including The Grand Challenge Program

DARPA Grand Challenge

The DARPA Grand Challenge is a prize competition for driverless vehicles, funded by the Defense Advanced Research Projects Agency, the most prominent research organization of the United States Department of Defense...

, Cognitive Technology Threat Warning System

Cognitive Technology Threat Warning System

The Cognitive Technology Threat Warning System, otherwise known as , is an artificial cognitive science program designed to analyze sensory-data and then alert foot-soldiers to any possible threats, passive or direct. CT2WS is part of U.S...

(CT2WS), "Human Assisted Neural Devices (SN07-43)", "Autonomous Real-Time Ground Ubiquitous Surveillance-Imaging System (ARGUS-IS)" and "Urban Reasoning and Geospatial Exploitation Technology (URGENT)"

Perhaps best known, is DARPA's Grand Challenge Program

DARPA Grand Challenge

The DARPA Grand Challenge is a prize competition for driverless vehicles, funded by the Defense Advanced Research Projects Agency, the most prominent research organization of the United States Department of Defense...

which has developed fully automated road vehicles that can successfully navigate real world terrain in a fully autonomous fashion.

DARPA has also supported programs on the Semantic Web

Semantic Web

The Semantic Web is a collaborative movement led by the World Wide Web Consortium that promotes common formats for data on the World Wide Web. By encouraging the inclusion of semantic content in web pages, the Semantic Web aims at converting the current web of unstructured documents into a "web of...

with a great deal of emphasis on intelligent management of content and automated understanding. However James Hendler, the manager of the DARPA program at the time, expressed some disappointment with the outcome of the program.

The EU-FP7 funding program, provides financial support to researchers within the European Union. Currently it funds AI research under the Cognitive Systems: Interaction and Robotics Programme (€193m), the Digital Libraries and Content Programme (€203m) and the FET programme (€185m)

Fear of another winter

Concerns are sometimes raised that a new AI winter could be triggered by any overly ambitious or unrealistic promise by prominent AI scientists. For example, some researchers feared that the widely publicized promises in the early 1990s that Cog would show the intelligence of a human two-year-old might lead to an AI winter. In fact, the Cog project and the success of Deep Blue seems to have led to an increase of interest in strong AIStrong AI

Strong AI is artificial intelligence that matches or exceeds human intelligence — the intelligence of a machine that can successfully perform any intellectual task that a human being can. It is a primary goal of artificial intelligence research and an important topic for science fiction writers and...

in that decade from both government and industry.

James Hendler

James Hendler

James Hendler is an artificial intelligence researcher at Rensselaer Polytechnic Institute, USA, and one of the originators of the Semantic Web.-Background and research:...

in 2008, observed that AI funding both in the EU and the US were being channeled more into applications and cross-breeding with traditional sciences, such as bioinformatics. This shift away from basic research is happening at the same time as there's a drive towards applications of e.g. the semantic web

Semantic Web

The Semantic Web is a collaborative movement led by the World Wide Web Consortium that promotes common formats for data on the World Wide Web. By encouraging the inclusion of semantic content in web pages, the Semantic Web aims at converting the current web of unstructured documents into a "web of...

. Invoking the pipeline argument, (see underlying causes) Hendler saw a parallel with the '80s winter and warned of a coming AI winter in the '10s.

Hope of another spring

There are also constant reports that another AI spring is imminent or has already occurred:- Raj Reddy, in his presidential address to AAAI, 1988: "[T]he field is more exciting than ever. Our recent advances are significant and substantial. And the mythical AI winter may have turned into an AI spring. I see many flowers blooming."