Byte

Encyclopedia

The byte is a unit of digital information

in computing

and telecommunications that most commonly consists of eight bit

s. Historically, a byte was the number of bits used to encode a single character

of text in a computer and for this reason it is the basic addressable

element in many computer architecture

s.

The size of the byte has historically been hardware dependent and no definitive standards exist that mandate the size. The de facto standard

of eight bits is a convenient power of two

permitting the values 0 through 255 for one byte. Many types of applications use variables representable in eight or fewer bits, and processor designers optimize for this common usage. The popularity of major commercial computing architectures have aided in the ubiquitous acceptance of the 8-bit size.

The term octet

was defined to explicitly denote a sequence of 8 bits because of the ambiguity associated with the term byte.

in July 1956, during the early design phase for the IBM Stretch

computer.

It is a respelling of bite to avoid accidental mutation to bit.

Early computers used a variety of 4-bit binary coded decimal (BCD) representations and the 6-bit

codes for printable graphic patterns common in the U.S. Army (Fieldata

) and Navy. These representations included alphanumeric characters and special graphical symbols. These sets were expanded in 1963 to 7 bits of coding, called the American Standard Code for Information Interchange

(ASCII) as the Federal Information Processing Standard

which replaced the incompatible teleprinter codes in use by different branches of the U.S. government. ASCII included the distinction of upper and lower case alphabets and a set of control character

s to facilitate the transmission of written language as well as printing device functions, such as page and line feeds, and the physical or logical control of data flow over the transmission media. During the early 1960s, since with just only one bit more an eight bits allows two four-bit patterns to efficiently encode two digits with binary coded decimal, the eight-bit EBCDIC

(see EBCDIC

history) character encoding was later adopted and promulgated as a standard by the IBM in the System/360

.

In the early 1960s, AT&T introduced digital telephony

first on long-distance trunk lines. These used the 8-bit µ-law encoding. This large investment promised to reduce transmission costs for 8-bit data. The use of 8-bit codes for digital telephony also caused 8-bit data octets to be adopted as the basic data unit of the early Internet

.

The development of 8-bit

microprocessor

s in the 1970s popularized this storage size. Microprocessors such as the Intel 8008

, the direct predecessor of the 8080

and the 8086

, used in early personal computers, could also perform a small number of operations on four bits, such as the DAA (decimal adjust) instruction, and the auxiliary carry (AC/NA) flag, which were used to implement decimal arithmetic routines. These four-bit quantities are sometimes called nibble

s, and correspond to hexadecimal

digits.

The term octet

is used to unambiguously specify a size of eight bits, and is used extensively in protocol definitions, for example.

and the Metric Interchange Format as the upper-case character B, while other standards, such as the International Electrotechnical Commission

(IEC) standard IEC 60027

, appear silent on the subject.

In the International System of Units

(SI), B is the symbol of the bel

, a unit of logarithmic power ratios named after Alexander Graham Bell

. The usage of B for byte therefore conflicts with this definition. It is also not consistent with the SI convention that only units named after persons should be capitalized. However, there is little danger of confusion because the bel is a rarely used unit. It is used primarily in its decadic fraction, the decibel

(dB), for signal strength

and sound pressure level measurements, while a unit for one tenth of a byte, i.e. the decibyte, is never used.

The unit symbol kB is commonly used for kilobyte

, but may be confused with the common meaning of kb for kilobit

. IEEE 1541 specifies the lower case character b as the symbol for bit

; however, the IEC 60027 and Metric-Interchange-Format specify bit (e.g., Mbit for megabit) for the symbol, a sufficient disambiguation from byte.

The lowercase letter o for octet

is a commonly used symbol in several non-English languages (e.g., French

and Romanian

), and is also used with metric prefixes (for example, ko and Mo)

Today the harmonized ISO/IEC 80000-13:2008 – Quantities and units — Part 13: Information science and technology

standard cancels and replaces subclauses 3.8 and 3.9 of IEC 60027-2:2005, namely those related to Information theory and Prefixes for binary multiples.

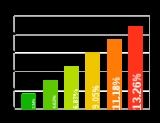

There has been considerable confusion about the meanings of SI (or metric) prefixes

used with the unit byte, especially concerning prefixes such as kilo (k or K) and mega (M) as shown in the chart Prefixes for bit and byte. Since computer memory is designed with binary logic, multiples are expressed in powers of 2

, rather than 10. The software and computer industries often use binary estimates of the SI-prefixed quantities, while producers of computer storage devices prefer the SI values. This is the reason for specifying computer hard drive capacities of, say, 100 GB, when it contains 93 GiB of storage space.

While the numerical difference between the decimal and binary interpretations is small for the prefixes kilo and mega, it grows to over 20% for prefix yotta, illustrated in the linear-log graph (at right) of difference versus storage size.

in certain programming language

s. The C

and C++

programming languages, for example, define byte as an "addressable unit of data storage large enough to hold any member of the basic character set of the execution environment" (clause 3.6 of the C standard). The C standard requires that the

primitive

In data transmission systems a byte is defined as a contiguous sequence of binary bits in a serial data stream, such as in modem or satellite communications, which is the smallest meaningful unit of data. These bytes might include start bits, stop bits, or parity bits, and thus could vary from 7 to 12 bits to contain a single 7-bit ASCII code.

Units of information

In computing and telecommunications, a unit of information is the capacity of some standard data storage system or communication channel, used to measure the capacities of other systems and channels...

in computing

Computing

Computing is usually defined as the activity of using and improving computer hardware and software. It is the computer-specific part of information technology...

and telecommunications that most commonly consists of eight bit

Bit

A bit is the basic unit of information in computing and telecommunications; it is the amount of information stored by a digital device or other physical system that exists in one of two possible distinct states...

s. Historically, a byte was the number of bits used to encode a single character

Character (computing)

In computer and machine-based telecommunications terminology, a character is a unit of information that roughly corresponds to a grapheme, grapheme-like unit, or symbol, such as in an alphabet or syllabary in the written form of a natural language....

of text in a computer and for this reason it is the basic addressable

Address space

In computing, an address space defines a range of discrete addresses, each of which may correspond to a network host, peripheral device, disk sector, a memory cell or other logical or physical entity.- Overview :...

element in many computer architecture

Computer architecture

In computer science and engineering, computer architecture is the practical art of selecting and interconnecting hardware components to create computers that meet functional, performance and cost goals and the formal modelling of those systems....

s.

The size of the byte has historically been hardware dependent and no definitive standards exist that mandate the size. The de facto standard

De facto standard

A de facto standard is a custom, convention, product, or system that has achieved a dominant position by public acceptance or market forces...

of eight bits is a convenient power of two

Power of two

In mathematics, a power of two means a number of the form 2n where n is an integer, i.e. the result of exponentiation with as base the number two and as exponent the integer n....

permitting the values 0 through 255 for one byte. Many types of applications use variables representable in eight or fewer bits, and processor designers optimize for this common usage. The popularity of major commercial computing architectures have aided in the ubiquitous acceptance of the 8-bit size.

The term octet

Octet (computing)

An octet is a unit of digital information in computing and telecommunications that consists of eight bits. The term is often used when the term byte might be ambiguous, as there is no standard for the size of the byte.-Overview:...

was defined to explicitly denote a sequence of 8 bits because of the ambiguity associated with the term byte.

History

The term byte was coined by Dr. Werner BuchholzWerner Buchholz

Werner Buchholz is a noted American computer scientist. In July 1956, he coined the term byte, a unit of digital information to describe an ordered group of bits, as the smallest amount of data that a computer could process .As a member of the team at International Business Machines that designed...

in July 1956, during the early design phase for the IBM Stretch

IBM 7030

The IBM 7030, also known as Stretch, was IBM's first transistorized supercomputer. The first one was delivered to Los Alamos National Laboratory in 1961....

computer.

It is a respelling of bite to avoid accidental mutation to bit.

Early computers used a variety of 4-bit binary coded decimal (BCD) representations and the 6-bit

Sixbit

Six-bit character codes were designed for use on computers with word lengths a multiple of 6. Six bits can only encode 64 distinct characters, so these codes generally include only the upper-case letters, the numerals, some punctuation characters, and sometimes control characters...

codes for printable graphic patterns common in the U.S. Army (Fieldata

Fieldata

Fieldata was a pioneering computer project run by the US Army Signal Corps in the late 1950s that intended to create a single standard for collecting and distributing battlefield information...

) and Navy. These representations included alphanumeric characters and special graphical symbols. These sets were expanded in 1963 to 7 bits of coding, called the American Standard Code for Information Interchange

ASCII

The American Standard Code for Information Interchange is a character-encoding scheme based on the ordering of the English alphabet. ASCII codes represent text in computers, communications equipment, and other devices that use text...

(ASCII) as the Federal Information Processing Standard

Federal Information Processing Standard

A Federal Information Processing Standard is a publicly announced standardization developed by the United States federal government for use in computer systems by all non-military government agencies and by government contractors, when properly invoked and tailored on a contract...

which replaced the incompatible teleprinter codes in use by different branches of the U.S. government. ASCII included the distinction of upper and lower case alphabets and a set of control character

Control character

In computing and telecommunication, a control character or non-printing character is a code point in a character set, that does not in itself represent a written symbol.It is in-band signaling in the context of character encoding....

s to facilitate the transmission of written language as well as printing device functions, such as page and line feeds, and the physical or logical control of data flow over the transmission media. During the early 1960s, since with just only one bit more an eight bits allows two four-bit patterns to efficiently encode two digits with binary coded decimal, the eight-bit EBCDIC

EBCDIC

Extended Binary Coded Decimal Interchange Code is an 8-bit character encoding used mainly on IBM mainframe and IBM midrange computer operating systems....

(see EBCDIC

EBCDIC

Extended Binary Coded Decimal Interchange Code is an 8-bit character encoding used mainly on IBM mainframe and IBM midrange computer operating systems....

history) character encoding was later adopted and promulgated as a standard by the IBM in the System/360

System/360

The IBM System/360 was a mainframe computer system family first announced by IBM on April 7, 1964, and sold between 1964 and 1978. It was the first family of computers designed to cover the complete range of applications, from small to large, both commercial and scientific...

.

In the early 1960s, AT&T introduced digital telephony

Digital telephony

Digital telephony is the use of digital electronics in the provision of digital telephone services and systems. Since the 1960s a digital core network has almost entirely replaced the old analog system, and much of the access network has also been digitized...

first on long-distance trunk lines. These used the 8-bit µ-law encoding. This large investment promised to reduce transmission costs for 8-bit data. The use of 8-bit codes for digital telephony also caused 8-bit data octets to be adopted as the basic data unit of the early Internet

Internet

The Internet is a global system of interconnected computer networks that use the standard Internet protocol suite to serve billions of users worldwide...

.

The development of 8-bit

8-bit

The first widely adopted 8-bit microprocessor was the Intel 8080, being used in many hobbyist computers of the late 1970s and early 1980s, often running the CP/M operating system. The Zilog Z80 and the Motorola 6800 were also used in similar computers...

microprocessor

Microprocessor

A microprocessor incorporates the functions of a computer's central processing unit on a single integrated circuit, or at most a few integrated circuits. It is a multipurpose, programmable device that accepts digital data as input, processes it according to instructions stored in its memory, and...

s in the 1970s popularized this storage size. Microprocessors such as the Intel 8008

Intel 8008

The Intel 8008 was an early byte-oriented microprocessor designed and manufactured by Intel and introduced in April 1972. It was an 8-bit CPU with an external 14-bit address bus that could address 16KB of memory...

, the direct predecessor of the 8080

Intel 8080

The Intel 8080 was the second 8-bit microprocessor designed and manufactured by Intel and was released in April 1974. It was an extended and enhanced variant of the earlier 8008 design, although without binary compatibility...

and the 8086

Intel 8086

The 8086 is a 16-bit microprocessor chip designed by Intel between early 1976 and mid-1978, when it was released. The 8086 gave rise to the x86 architecture of Intel's future processors...

, used in early personal computers, could also perform a small number of operations on four bits, such as the DAA (decimal adjust) instruction, and the auxiliary carry (AC/NA) flag, which were used to implement decimal arithmetic routines. These four-bit quantities are sometimes called nibble

Nibble

In computing, a nibble is a four-bit aggregation, or half an octet...

s, and correspond to hexadecimal

Hexadecimal

In mathematics and computer science, hexadecimal is a positional numeral system with a radix, or base, of 16. It uses sixteen distinct symbols, most often the symbols 0–9 to represent values zero to nine, and A, B, C, D, E, F to represent values ten to fifteen...

digits.

The term octet

Octet (computing)

An octet is a unit of digital information in computing and telecommunications that consists of eight bits. The term is often used when the term byte might be ambiguous, as there is no standard for the size of the byte.-Overview:...

is used to unambiguously specify a size of eight bits, and is used extensively in protocol definitions, for example.

Unit symbol

The unit symbol for the byte is specified in IEEE 1541IEEE 1541

IEEE 1541-2002 is a standard issued by the Institute of Electrical and Electronics Engineers concerning the use of prefixes for binary multiples of units of measurement related to digital electronics and computing....

and the Metric Interchange Format as the upper-case character B, while other standards, such as the International Electrotechnical Commission

International Electrotechnical Commission

The International Electrotechnical Commission is a non-profit, non-governmental international standards organization that prepares and publishes International Standards for all electrical, electronic and related technologies – collectively known as "electrotechnology"...

(IEC) standard IEC 60027

IEC 60027

IEC 60027 is the International Electrotechnical Commission's standard on Letter symbols to be used in electrical technology...

, appear silent on the subject.

In the International System of Units

International System of Units

The International System of Units is the modern form of the metric system and is generally a system of units of measurement devised around seven base units and the convenience of the number ten. The older metric system included several groups of units...

(SI), B is the symbol of the bel

Decibel

The decibel is a logarithmic unit that indicates the ratio of a physical quantity relative to a specified or implied reference level. A ratio in decibels is ten times the logarithm to base 10 of the ratio of two power quantities...

, a unit of logarithmic power ratios named after Alexander Graham Bell

Alexander Graham Bell

Alexander Graham Bell was an eminent scientist, inventor, engineer and innovator who is credited with inventing the first practical telephone....

. The usage of B for byte therefore conflicts with this definition. It is also not consistent with the SI convention that only units named after persons should be capitalized. However, there is little danger of confusion because the bel is a rarely used unit. It is used primarily in its decadic fraction, the decibel

Decibel

The decibel is a logarithmic unit that indicates the ratio of a physical quantity relative to a specified or implied reference level. A ratio in decibels is ten times the logarithm to base 10 of the ratio of two power quantities...

(dB), for signal strength

Signal strength

In telecommunications, particularly in radio, signal strength refers to the magnitude of the electric field at a reference point that is a significant distance from the transmitting antenna. It may also be referred to as received signal level or field strength. Typically, it is expressed in...

and sound pressure level measurements, while a unit for one tenth of a byte, i.e. the decibyte, is never used.

The unit symbol kB is commonly used for kilobyte

Kilobyte

The kilobyte is a multiple of the unit byte for digital information. Although the prefix kilo- means 1000, the term kilobyte and symbol KB have historically been used to refer to either 1024 bytes or 1000 bytes, dependent upon context, in the fields of computer science and information...

, but may be confused with the common meaning of kb for kilobit

Kilobit

The kilobit is a multiple of the unit bit for digital information or computer storage. The prefix kilo is defined in the International System of Units as a multiplier of 103 , and therefore,...

. IEEE 1541 specifies the lower case character b as the symbol for bit

Bit

A bit is the basic unit of information in computing and telecommunications; it is the amount of information stored by a digital device or other physical system that exists in one of two possible distinct states...

; however, the IEC 60027 and Metric-Interchange-Format specify bit (e.g., Mbit for megabit) for the symbol, a sufficient disambiguation from byte.

The lowercase letter o for octet

Octet (computing)

An octet is a unit of digital information in computing and telecommunications that consists of eight bits. The term is often used when the term byte might be ambiguous, as there is no standard for the size of the byte.-Overview:...

is a commonly used symbol in several non-English languages (e.g., French

French language

French is a Romance language spoken as a first language in France, the Romandy region in Switzerland, Wallonia and Brussels in Belgium, Monaco, the regions of Quebec and Acadia in Canada, and by various communities elsewhere. Second-language speakers of French are distributed throughout many parts...

and Romanian

Romanian language

Romanian Romanian Romanian (or Daco-Romanian; obsolete spellings Rumanian, Roumanian; self-designation: română, limba română ("the Romanian language") or românește (lit. "in Romanian") is a Romance language spoken by around 24 to 28 million people, primarily in Romania and Moldova...

), and is also used with metric prefixes (for example, ko and Mo)

Today the harmonized ISO/IEC 80000-13:2008 – Quantities and units — Part 13: Information science and technology

ISO/IEC 80000

International standard ISO 80000 or IEC 80000—depending on which of the two international standards bodies International Organization for Standardization and International Electrotechnical Commission is in charge of each respective part—is a style guide for the use of physical quantities and units...

standard cancels and replaces subclauses 3.8 and 3.9 of IEC 60027-2:2005, namely those related to Information theory and Prefixes for binary multiples.

Unit multiples

There has been considerable confusion about the meanings of SI (or metric) prefixes

SI prefix

The International System of Units specifies a set of unit prefixes known as SI prefixes or metric prefixes. An SI prefix is a name that precedes a basic unit of measure to indicate a decadic multiple or fraction of the unit. Each prefix has a unique symbol that is prepended to the unit symbol...

used with the unit byte, especially concerning prefixes such as kilo (k or K) and mega (M) as shown in the chart Prefixes for bit and byte. Since computer memory is designed with binary logic, multiples are expressed in powers of 2

Power of two

In mathematics, a power of two means a number of the form 2n where n is an integer, i.e. the result of exponentiation with as base the number two and as exponent the integer n....

, rather than 10. The software and computer industries often use binary estimates of the SI-prefixed quantities, while producers of computer storage devices prefer the SI values. This is the reason for specifying computer hard drive capacities of, say, 100 GB, when it contains 93 GiB of storage space.

While the numerical difference between the decimal and binary interpretations is small for the prefixes kilo and mega, it grows to over 20% for prefix yotta, illustrated in the linear-log graph (at right) of difference versus storage size.

Common uses

The byte is also defined as a data typeData type

In computer programming, a data type is a classification identifying one of various types of data, such as floating-point, integer, or Boolean, that determines the possible values for that type; the operations that can be done on values of that type; the meaning of the data; and the way values of...

in certain programming language

Programming language

A programming language is an artificial language designed to communicate instructions to a machine, particularly a computer. Programming languages can be used to create programs that control the behavior of a machine and/or to express algorithms precisely....

s. The C

C (programming language)

C is a general-purpose computer programming language developed between 1969 and 1973 by Dennis Ritchie at the Bell Telephone Laboratories for use with the Unix operating system....

and C++

C++

C++ is a statically typed, free-form, multi-paradigm, compiled, general-purpose programming language. It is regarded as an intermediate-level language, as it comprises a combination of both high-level and low-level language features. It was developed by Bjarne Stroustrup starting in 1979 at Bell...

programming languages, for example, define byte as an "addressable unit of data storage large enough to hold any member of the basic character set of the execution environment" (clause 3.6 of the C standard). The C standard requires that the

char integral data type is capable of holding at least 255 different values, and is represented by at least 8 bits (clause 5.2.4.2.1). Various implementations of C and C++ reserve 8, 9, 16, 32, or 36 bits for the storage of a byte. The actual number of bits in a particular implementation is documented as CHAR_BIT as implemented in the limits.h file. Java'sJava (programming language)

Java is a programming language originally developed by James Gosling at Sun Microsystems and released in 1995 as a core component of Sun Microsystems' Java platform. The language derives much of its syntax from C and C++ but has a simpler object model and fewer low-level facilities...

primitive

byte data type is always defined as consisting of 8 bits and being a signed data type, holding values from −128 to 127.In data transmission systems a byte is defined as a contiguous sequence of binary bits in a serial data stream, such as in modem or satellite communications, which is the smallest meaningful unit of data. These bytes might include start bits, stop bits, or parity bits, and thus could vary from 7 to 12 bits to contain a single 7-bit ASCII code.