Cache algorithms

Encyclopedia

In computing

, cache algorithms (also frequently called replacement algorithms or replacement policies) are optimizing

instructions – algorithm

s – that a computer program

or a hardware-maintained structure can follow to manage a cache

of information stored on the computer. When the cache is full, the algorithm must choose which items to discard to make room for the new ones.

The "hit rate" of a cache describes how often a searched-for item is actually found in the cache.

More efficient replacement policies keep track of more usage information in order to improve the hit rate (for a given cache size).

The "latency" of a cache describes how long after requesting a desired item the cache can return that item (when there is a hit).

Faster replacement strategies typically keep track of less usage information—or, in the case of direct-mapped cache, no information—to reduce the amount of time required to update that information.

Each replacement strategy is a compromise between hit rate and latency.

(PLRU): For caches with large associativity (generally >4 ways), the implementation cost of LRU becomes prohibitive. If a scheme that almost always discards one of the least recently used items is sufficient, the PLRU algorithm can be used which only needs one bit per cache item to work.

. It admits efficient stochastic simulation.

s where even PLRU is too slow. The address of a new item is used to calculate one of two possible locations in the cache where it is allowed to go. The LRU of the two is discarded. This requires one bit per pair of cache lines, to indicate which of the two was the least recently used.

(LFU): LFU counts how often an item is needed. Those that are used least often are discarded first.

(ARC): constantly balances between LRU and LFU, to improve combined result. See also Clock with Adaptive Replacement

(CAR).

Other things to consider:

Various algorithms also exist to maintain cache coherency

. This applies only to situation where multiple independent caches are used for the same data (for example multiple database servers updating the single shared data file).

Computing

Computing is usually defined as the activity of using and improving computer hardware and software. It is the computer-specific part of information technology...

, cache algorithms (also frequently called replacement algorithms or replacement policies) are optimizing

Optimization (computer science)

In computer science, program optimization or software optimization is the process of modifying a software system to make some aspect of it work more efficiently or use fewer resources...

instructions – algorithm

Algorithm

In mathematics and computer science, an algorithm is an effective method expressed as a finite list of well-defined instructions for calculating a function. Algorithms are used for calculation, data processing, and automated reasoning...

s – that a computer program

Computer program

A computer program is a sequence of instructions written to perform a specified task with a computer. A computer requires programs to function, typically executing the program's instructions in a central processor. The program has an executable form that the computer can use directly to execute...

or a hardware-maintained structure can follow to manage a cache

Cache

In computer engineering, a cache is a component that transparently stores data so that future requests for that data can be served faster. The data that is stored within a cache might be values that have been computed earlier or duplicates of original values that are stored elsewhere...

of information stored on the computer. When the cache is full, the algorithm must choose which items to discard to make room for the new ones.

The "hit rate" of a cache describes how often a searched-for item is actually found in the cache.

More efficient replacement policies keep track of more usage information in order to improve the hit rate (for a given cache size).

The "latency" of a cache describes how long after requesting a desired item the cache can return that item (when there is a hit).

Faster replacement strategies typically keep track of less usage information—or, in the case of direct-mapped cache, no information—to reduce the amount of time required to update that information.

Each replacement strategy is a compromise between hit rate and latency.

Belady's Algorithm

The most efficient caching algorithm would be to always discard the information that will not be needed for the longest time in the future. This optimal result is referred to as Belady's optimal algorithm or the clairvoyant algorithm. Since it is generally impossible to predict how far in the future information will be needed, this is generally not implementable in practice. The practical minimum can be calculated only after experimentation, and one can compare the effectiveness of the actually chosen cache algorithm.Least Recently Used

Least Recently Used (LRU): discards the least recently used items first. This algorithm requires keeping track of what was used when, which is expensive if one wants to make sure the algorithm always discards the least recently used item. General implementations of this technique require keeping "age bits" for cache-lines and track the "Least Recently Used" cache-line based on age-bits. In such implementation, every time a cache-line is used, the age of all other cache-lines changes. LRU is actually a family of caching algorithms with members including: 2Q by Theodore Johnson and Dennis Shasha and LRU/K by Pat O'Neil, Betty O'Neil and Gerhard Weikum.Most Recently Used

Most Recently Used (MRU): discards, in contrast to LRU, the most recently used items first. In findings presented at the 11th VLDB conference, Chou and Dewitt noted that "When a file is being repeatedly scanned in a [Looping Sequential] reference pattern, MRU is the best replacement algorithm." Subsequently other researchers presenting at the 22nd VLDB conference noted that for random access patterns and repeated scans over large datasets (sometimes known as cyclic access patterns) MRU cache algorithms have more hits than LRU due to their tendency to retain older data. MRU algorithms are most useful in situations where the older an item is, the more likely it is to be accessed.Pseudo-LRU

Pseudo-LRUPseudo-LRU

Pseudo-LRU usually refers two cache replacement algorithms: tree-PLRU and bit-PLRU.Tree-PLRU, is an efficient algorithm to find an item that most likely has not been accessed very recently, given a set of items and a sequence of access events to the items...

(PLRU): For caches with large associativity (generally >4 ways), the implementation cost of LRU becomes prohibitive. If a scheme that almost always discards one of the least recently used items is sufficient, the PLRU algorithm can be used which only needs one bit per cache item to work.

Random Replacement

Random Replacement (RR): randomly select a candidate item and discard it to make space when necessary. This algorithm does not require keeping any information about the access history. For its simplicity, it has been used in ARM processorsARM architecture

ARM is a 32-bit reduced instruction set computer instruction set architecture developed by ARM Holdings. It was named the Advanced RISC Machine, and before that, the Acorn RISC Machine. The ARM architecture is the most widely used 32-bit ISA in numbers produced...

. It admits efficient stochastic simulation.

Segmented LRU

Segmented LRU (SLRU): An SLRU cache is divided into two segments. a probationary segment and a protected segment. Lines in each segment are ordered from the most to the least recently accessed. Data from misses is added to the cache at the most recently accessed end of the probationary segment. Hits are removed from wherever they currently reside and added to the most recently accessed end of the protected segment. Lines in the protected segment have thus been accessed at least twice. The protected segment is finite. so migration of a line from the probationary segment to the protected segment may force the migration of the LRU line in the protected segment to the most recently used (MRU) end of the probationary segment, giving this line another chance to be accessed before being replaced. The size limit on the protected segment is an SLRU parameter that varies according to the I/O workloadpatterns. Whenever data must be discarded from the cache, lines are obtained from the LRU end of the probationary segment."2-Way Set Associative

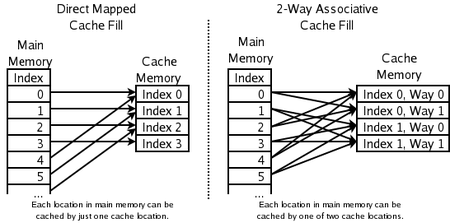

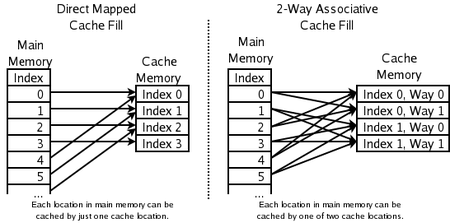

2-way set associative: for high-speed CPU cacheCPU cache

A CPU cache is a cache used by the central processing unit of a computer to reduce the average time to access memory. The cache is a smaller, faster memory which stores copies of the data from the most frequently used main memory locations...

s where even PLRU is too slow. The address of a new item is used to calculate one of two possible locations in the cache where it is allowed to go. The LRU of the two is discarded. This requires one bit per pair of cache lines, to indicate which of the two was the least recently used.

Direct-mapped cache

Direct-mapped cache: for the highest-speed CPU caches where even 2-way set associative caches are too slow. The address of the new item is used to calculate the one location in the cache where it is allowed to go. Whatever was there before is discarded.Least-Frequently Used

Least Frequently UsedLeast frequently used

In computer science, the term "Least Frequently Used" refers to a cache algorithm for memory management. The expiration policy removes entities from the cache that are used the least...

(LFU): LFU counts how often an item is needed. Those that are used least often are discarded first.

Adaptive Replacement Cache

Adaptive Replacement CacheAdaptive Replacement Cache

Adaptive Replacement Cache is a page replacement algorithm withbetter performance than LRU developed at the IBM Almaden Research Center. This is accomplished by keeping track of both Frequently Used and Recently Used pages plus a recent eviction history for both...

(ARC): constantly balances between LRU and LFU, to improve combined result. See also Clock with Adaptive Replacement

Clock with Adaptive Replacement

Clock with Adaptive Replacement is a page replacement algorithm, which combines Adaptive Replacement Cache and CLOCK, and it has performancecomparable to ARC, and substantially outperforms both...

(CAR).

Multi Queue Caching Algorithm

Multi Queue (MQ) caching algorithm: (by Zhou, Philbin, and Li).Other things to consider:

- Items with different cost: keep items that are expensive to obtain, e.g. those that take a long time to get.

- Items taking up more cache: If items have different sizes, the cache may want to discard a large item to store several smaller ones.

- Items that expire with time: Some caches keep information that expires (e.g. a news cache, a DNS cache, or a web browser cache). The computer may discard items because they are expired. Depending on the size of the cache no further caching algorithm to discard items may be necessary.

Various algorithms also exist to maintain cache coherency

Cache coherency

In computing, cache coherence refers to the consistency of data stored in local caches of a shared resource.When clients in a system maintain caches of a common memory resource, problems may arise with inconsistent data. This is particularly true of CPUs in a multiprocessing system...

. This applies only to situation where multiple independent caches are used for the same data (for example multiple database servers updating the single shared data file).

See also

- CacheCacheIn computer engineering, a cache is a component that transparently stores data so that future requests for that data can be served faster. The data that is stored within a cache might be values that have been computed earlier or duplicates of original values that are stored elsewhere...

- Cache-oblivious algorithm

- CPU cacheCPU cacheA CPU cache is a cache used by the central processing unit of a computer to reduce the average time to access memory. The cache is a smaller, faster memory which stores copies of the data from the most frequently used main memory locations...

- Page replacement algorithmPage replacement algorithmIn a computer operating system that uses paging for virtual memory management, page replacement algorithms decide which memory pages to page out when a page of memory needs to be allocated...

- Locality of referenceLocality of referenceIn computer science, locality of reference, also known as the principle of locality, is the phenomenon of the same value or related storage locations being frequently accessed. There are two basic types of reference locality. Temporal locality refers to the reuse of specific data and/or resources...