Color image pipeline

Encyclopedia

An image pipeline or video pipeline is a term used to describe the components that are typically or commonly used between an image source (such as a camera, a scanner, or the rendering engine in a computer game), and an image renderer (such as a television set, a computer screen, a computer printer or cinema screen), or for performing any intermediate digital image processing

consisting of two or more separate processing blocks. An image/video pipeline may be implemented as computer software, in a digital signal processor

, on an FPGA or as fixed-function ASIC

. In addition, analog circuits can be used to do many of the same functions.

Typical components include image sensor

corrections, noise reduction

, image scaling

, gamma correction

, image enhancement, colorspace conversion, chroma subsampling

, framerate conversion, image compression

/video compression, and computer data storage/data transmission

.

Typical goals of an imaging pipeline may be perceptually pleasing end-results, colorimetric precision, a high degree of flexibility, low cost/low CPU utilization/long battery life, or reduction in bandwidth

/file size

.

Some functions may be algorithmically linear. Mathematically, those elements can be connected in any order without changing the end-result. As digital computers use a finite approximation to numerical computing, this is in practice not true. Other elements may be non-linear or time-variant. For both cases, there is often one or a few sequences of components that makes sense for optimum precision as well as minimum hardware-cost/CPU-load.

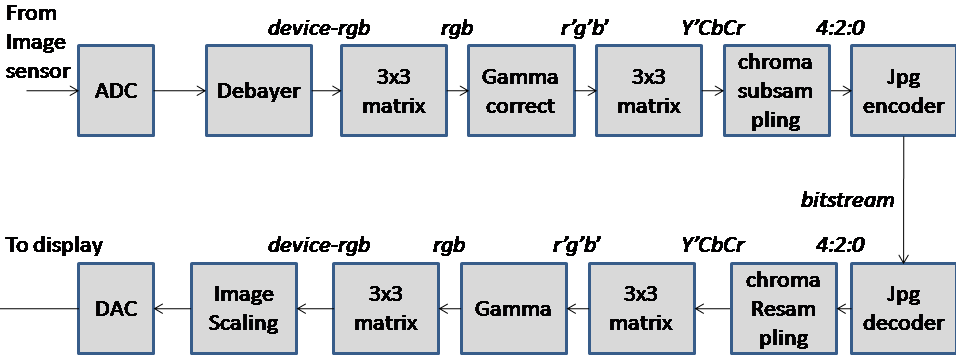

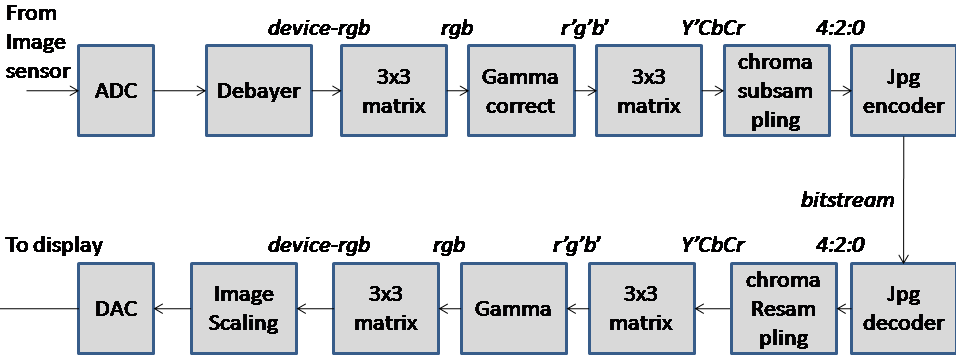

The figure shows a simplified, typical use of two imaging pipelines. The upper half shows components that might be found in a digital camera. The lower half shows components that might be used in an image viewing application on a computer for displaying the images produced by the camera.

Note that operations mimicking physical, linear behaviour, such as image scaling, is ideally carried out in the left hand side, working on linear RGB signals. Operations that are to appear "perceptually uniform", such as lossy image compression, on the other hand, should be carried out in the right hand side, working on "gamma-corrected" r'g'b or Y'CbCr signals.

Digital image processing

Digital image processing is the use of computer algorithms to perform image processing on digital images. As a subcategory or field of digital signal processing, digital image processing has many advantages over analog image processing...

consisting of two or more separate processing blocks. An image/video pipeline may be implemented as computer software, in a digital signal processor

Digital signal processor

A digital signal processor is a specialized microprocessor with an architecture optimized for the fast operational needs of digital signal processing.-Typical characteristics:...

, on an FPGA or as fixed-function ASIC

ASIC

ASIC may refer to:* Application-specific integrated circuit, an integrated circuit developed for a particular use, as opposed to a customised general-purpose device.* ASIC programming language, a dialect of BASIC...

. In addition, analog circuits can be used to do many of the same functions.

Typical components include image sensor

Image sensor

An image sensor is a device that converts an optical image into an electronic signal. It is used mostly in digital cameras and other imaging devices...

corrections, noise reduction

Noise reduction

Noise reduction is the process of removing noise from a signal.All recording devices, both analogue or digital, have traits which make them susceptible to noise...

, image scaling

Image scaling

In computer graphics, image scaling is the process of resizing a digital image. Scaling is a non-trivial process that involves a trade-off between efficiency, smoothness and sharpness. As the size of an image is increased, so the pixels which comprise the image become increasingly visible, making...

, gamma correction

Gamma correction

Gamma correction, gamma nonlinearity, gamma encoding, or often simply gamma, is the name of a nonlinear operation used to code and decode luminance or tristimulus values in video or still image systems...

, image enhancement, colorspace conversion, chroma subsampling

Chroma subsampling

Chroma subsampling is the practice of encoding images by implementing less resolution for chroma information than for luma information, taking advantage of the human visual system's lower acuity for color differences than for luminance....

, framerate conversion, image compression

Image compression

The objective of image compression is to reduce irrelevance and redundancy of the image data in order to be able to store or transmit data in an efficient form.- Lossy and lossless compression :...

/video compression, and computer data storage/data transmission

Data transmission

Data transmission, digital transmission, or digital communications is the physical transfer of data over a point-to-point or point-to-multipoint communication channel. Examples of such channels are copper wires, optical fibres, wireless communication channels, and storage media...

.

Typical goals of an imaging pipeline may be perceptually pleasing end-results, colorimetric precision, a high degree of flexibility, low cost/low CPU utilization/long battery life, or reduction in bandwidth

Bandwidth (computing)

In computer networking and computer science, bandwidth, network bandwidth, data bandwidth, or digital bandwidth is a measure of available or consumed data communication resources expressed in bits/second or multiples of it .Note that in textbooks on wireless communications, modem data transmission,...

/file size

File size

File size measures the size of a computer file. Typically it is measured in bytes with a prefix. The actual amount of disk space consumed by the file depends on the file system....

.

Some functions may be algorithmically linear. Mathematically, those elements can be connected in any order without changing the end-result. As digital computers use a finite approximation to numerical computing, this is in practice not true. Other elements may be non-linear or time-variant. For both cases, there is often one or a few sequences of components that makes sense for optimum precision as well as minimum hardware-cost/CPU-load.

The figure shows a simplified, typical use of two imaging pipelines. The upper half shows components that might be found in a digital camera. The lower half shows components that might be used in an image viewing application on a computer for displaying the images produced by the camera.

Note that operations mimicking physical, linear behaviour, such as image scaling, is ideally carried out in the left hand side, working on linear RGB signals. Operations that are to appear "perceptually uniform", such as lossy image compression, on the other hand, should be carried out in the right hand side, working on "gamma-corrected" r'g'b or Y'CbCr signals.