Distributed memory

Encyclopedia

Computer science

Computer science or computing science is the study of the theoretical foundations of information and computation and of practical techniques for their implementation and application in computer systems...

, distributed memory refers to a multiple-processor computer system

Multiprocessing

Multiprocessing is the use of two or more central processing units within a single computer system. The term also refers to the ability of a system to support more than one processor and/or the ability to allocate tasks between them...

in which each processor

Central processing unit

The central processing unit is the portion of a computer system that carries out the instructions of a computer program, to perform the basic arithmetical, logical, and input/output operations of the system. The CPU plays a role somewhat analogous to the brain in the computer. The term has been in...

has its own private memory

Computer memory

In computing, memory refers to the physical devices used to store programs or data on a temporary or permanent basis for use in a computer or other digital electronic device. The term primary memory is used for the information in physical systems which are fast In computing, memory refers to the...

. Computational tasks can only operate on local data, and if remote data is required, the computational task must communicate with one or more remote processors. In contrast, a shared memory

Shared memory

In computing, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Depending on context, programs may run on a single processor or on multiple separate processors...

multi processor offers a single memory space used by all processors. Processors do not have to be aware where data resides, except that there may be performance penalties, and that race conditions are to be avoided.

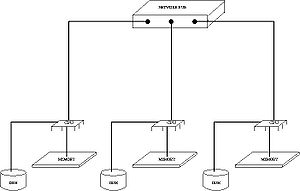

Architecture

In a distributed memory system there is typically a processor, a memory, and some form of interconnection that allows programs on each processor to interact with each other. The interconnect can be organised with point to point links or separate hardware can provide a switching network. The network topologyNetwork topology

Network topology is the layout pattern of interconnections of the various elements of a computer or biological network....

is a key factor in determining how the multi-processor machine scales

Scalability

In electronics scalability is the ability of a system, network, or process, to handle growing amount of work in a graceful manner or its ability to be enlarged to accommodate that growth...

. The links between nodes can be implemented using some standard network protocol (for example Ethernet

Ethernet

Ethernet is a family of computer networking technologies for local area networks commercially introduced in 1980. Standardized in IEEE 802.3, Ethernet has largely replaced competing wired LAN technologies....

), using bespoke network links (used in for example the Transputer), or using dual ported memories

Dual-ported RAM

Dual-ported RAM is a type of Random Access Memory that allows multiple reads or writes to occur at the same time, or nearly the same time, unlike single-ported RAM which only allows one access at a time....

.

Programming distributed memory machines

The key issue in programming distributed memory systems is how to distribute the data over the memories. Depending on the problem solved, the data can be distributed statically, or it can be moved through the nodes. Data can be moved on demand, or data can be pushed to the new nodes in advance.As an example, if a problem can be described as a pipeline where data X is processed subsequently through functions F, G, H, etc. (the result is H(G(F(X)))), then this can be expressed as a distributed memory problem where the data is transmitted first to the node that performs F that passes the result onto the second node that computes G, and finally to the third node that computes H. This is also known as systolic computation.

Data can be kept statically in nodes if most computations happen locally, and only changes on edges have to be reported to other nodes. An example of this is simulation where data is modeled using a grid, and each node simulates a small part of the larger grid. On every iteration, nodes inform all neighboring nodes of the new edge data.

Distributed shared memory

Similarly, in distributed shared memoryDistributed shared memory

Distributed Shared Memory , in Computer Architecture is a form of memory architecture where the memories can be addressed as one address space...

each node of a cluster has access to a large shared memory in addition to each node's limited non-shared private memory.

Shared memory versus distributed memory versus distributed shared memory

The advantage of (distributed) shared memory is that it offers a unified address space in which all data can be found.The advantage of distributed memory is that it excludes race conditions, and that it forces the programmer to think about data distribution.

The advantage of distributed (shared) memory is that it is easier to design a machine that scales with the algorithm

Distributed shared memory hides the mechanism of communication - it does not hide the latency of communication.