.gif)

Thread (computer science)

Encyclopedia

Computer science

Computer science or computing science is the study of the theoretical foundations of information and computation and of practical techniques for their implementation and application in computer systems...

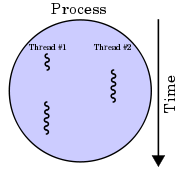

, a thread of execution is the smallest unit of processing that can be scheduled

Scheduling (computing)

In computer science, a scheduling is the method by which threads, processes or data flows are given access to system resources . This is usually done to load balance a system effectively or achieve a target quality of service...

by an operating system

Operating system

An operating system is a set of programs that manage computer hardware resources and provide common services for application software. The operating system is the most important type of system software in a computer system...

. The implementation of threads and process

Process (computing)

In computing, a process is an instance of a computer program that is being executed. It contains the program code and its current activity. Depending on the operating system , a process may be made up of multiple threads of execution that execute instructions concurrently.A computer program is a...

es differs from one operating system

Operating system

An operating system is a set of programs that manage computer hardware resources and provide common services for application software. The operating system is the most important type of system software in a computer system...

to another, but in most cases, a thread is contained inside a process. Multiple threads can exist within the same process and share resources such as memory

Shared memory

In computing, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Depending on context, programs may run on a single processor or on multiple separate processors...

, while different process

Process (computing)

In computing, a process is an instance of a computer program that is being executed. It contains the program code and its current activity. Depending on the operating system , a process may be made up of multiple threads of execution that execute instructions concurrently.A computer program is a...

es do not share these resources. In particular, the threads of a process share the latter's instructions (its code) and its context (the values that its variables reference at any given moment). To give an analogy, multiple threads in a process are like multiple cooks reading off the same cook book and following its instructions, not necessarily from the same page.

On a single processor, multithreading generally occurs by time-division multiplexing

Time-division multiplexing

Time-division multiplexing is a type of digital multiplexing in which two or more bit streams or signals are transferred apparently simultaneously as sub-channels in one communication channel, but are physically taking turns on the channel. The time domain is divided into several recurrent...

(as in multitasking

Computer multitasking

In computing, multitasking is a method where multiple tasks, also known as processes, share common processing resources such as a CPU. In the case of a computer with a single CPU, only one task is said to be running at any point in time, meaning that the CPU is actively executing instructions for...

): the processor

Central processing unit

The central processing unit is the portion of a computer system that carries out the instructions of a computer program, to perform the basic arithmetical, logical, and input/output operations of the system. The CPU plays a role somewhat analogous to the brain in the computer. The term has been in...

switches between different threads. This context switch

Context switch

A context switch is the computing process of storing and restoring the state of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system...

ing generally happens frequently enough that the user perceives the threads or tasks as running at the same time. On a multiprocessor

Multiprocessor

Computer system having two or more processing units each sharing main memory and peripherals, in order to simultaneously process programs.Sometimes the term Multiprocessor is confused with the term Multiprocessing....

or multi-core system, the threads or tasks will actually run at the same time, with each processor or core running a particular thread or task.

Many modern operating systems directly support both time-sliced and multiprocessor threading with a process scheduler. The kernel of an operating system allows programmers to manipulate threads via the system call

System call

In computing, a system call is how a program requests a service from an operating system's kernel. This may include hardware related services , creating and executing new processes, and communicating with integral kernel services...

interface. Some implementations are called a kernel thread, whereas a lightweight process

Light-weight process

In computer operating systems, a light-weight process is a means of achieving multitasking. In the traditional meaning of the term, as used in Unix System V and Solaris, an LWP runs in user space on top of a single kernel thread and shares its address space and system resources with other LWPs...

(LWP) is a specific type of kernel thread that shares the same state and information.

Programs can have user-space threads when threading with timers, signals, or other methods to interrupt their own execution, performing a sort of ad-hoc time-slicing.

How threads differ from processes

Threads differ from traditional multitaskingComputer multitasking

In computing, multitasking is a method where multiple tasks, also known as processes, share common processing resources such as a CPU. In the case of a computer with a single CPU, only one task is said to be running at any point in time, meaning that the CPU is actively executing instructions for...

operating system processes

Process (computing)

In computing, a process is an instance of a computer program that is being executed. It contains the program code and its current activity. Depending on the operating system , a process may be made up of multiple threads of execution that execute instructions concurrently.A computer program is a...

in that:

- processes are typically independent, while threads exist as subsets of a process

- processes carry considerably more stateState (computer science)In computer science and automata theory, a state is a unique configuration of information in a program or machine. It is a concept that occasionally extends into some forms of systems programming such as lexers and parsers....

information than threads, whereas multiple threads within a process share process state as well as memoryComputer storageComputer data storage, often called storage or memory, refers to computer components and recording media that retain digital data. Data storage is one of the core functions and fundamental components of computers....

and other resourceResource (computer science)A resource, or system resource, is any physical or virtual component of limited availability within a computer system. Every device connected to a computer system is a resource. Every internal system component is a resource...

s - processes have separate address spaceAddress spaceIn computing, an address space defines a range of discrete addresses, each of which may correspond to a network host, peripheral device, disk sector, a memory cell or other logical or physical entity.- Overview :...

s, whereas threads share their address space - processes interact only through system-provided inter-process communicationInter-process communicationIn computing, Inter-process communication is a set of methods for the exchange of data among multiple threads in one or more processes. Processes may be running on one or more computers connected by a network. IPC methods are divided into methods for message passing, synchronization, shared...

mechanisms - Context switchContext switchA context switch is the computing process of storing and restoring the state of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system...

ing between threads in the same process is typically faster than context switching between processes.

Systems like Windows NT

Windows NT

Windows NT is a family of operating systems produced by Microsoft, the first version of which was released in July 1993. It was a powerful high-level-language-based, processor-independent, multiprocessing, multiuser operating system with features comparable to Unix. It was intended to complement...

and OS/2

OS/2

OS/2 is a computer operating system, initially created by Microsoft and IBM, then later developed by IBM exclusively. The name stands for "Operating System/2," because it was introduced as part of the same generation change release as IBM's "Personal System/2 " line of second-generation personal...

are said to have "cheap" threads and "expensive" processes; in other operating systems there is not so great a difference except the cost of address space

Address space

In computing, an address space defines a range of discrete addresses, each of which may correspond to a network host, peripheral device, disk sector, a memory cell or other logical or physical entity.- Overview :...

switch which implies a TLB

Translation Lookaside Buffer

A translation lookaside buffer is a CPU cache that memory management hardware uses to improve virtual address translation speed. All current desktop and server processors use a TLB to map virtual and physical address spaces, and it is ubiquitous in any hardware which utilizes virtual memory.The...

flush.

Multithreading

Multithreading as a widespread programming and execution model allows multiple threads to exist within the context of a single process. These threads share the process' resources but are able to execute independently. The threaded programming model provides developers with a useful abstraction of concurrent execution. However, perhaps the most interesting application of the technology is when it is applied to a single process to enable parallel execution on a multiprocessor system.This advantage of a multithreaded program allows it to operate faster on computer systems that have multiple CPUs

Central processing unit

The central processing unit is the portion of a computer system that carries out the instructions of a computer program, to perform the basic arithmetical, logical, and input/output operations of the system. The CPU plays a role somewhat analogous to the brain in the computer. The term has been in...

, CPUs with multiple cores, or across a cluster of machines — because the threads of the program naturally lend themselves to truly concurrent

Concurrency (computer science)

In computer science, concurrency is a property of systems in which several computations are executing simultaneously, and potentially interacting with each other...

execution

Execution (computers)

Execution in computer and software engineering is the process by which a computer or a virtual machine carries out the instructions of a computer program. The instructions in the program trigger sequences of simple actions on the executing machine...

. In such a case, the programmer

Programmer

A programmer, computer programmer or coder is someone who writes computer software. The term computer programmer can refer to a specialist in one area of computer programming or to a generalist who writes code for many kinds of software. One who practices or professes a formal approach to...

needs to be careful to avoid race condition

Race condition

A race condition or race hazard is a flaw in an electronic system or process whereby the output or result of the process is unexpectedly and critically dependent on the sequence or timing of other events...

s, and other non-intuitive behaviors. In order for data to be correctly manipulated, threads will often need to rendezvous

Rendezvous problem

The rendezvous dilemma can be formulated in this way:If they both choose to wait, of course, they will never meet. If they both choose to walk there are chances that they meet and chances that they do not...

in time in order to process the data in the correct order. Threads may also require mutually-exclusive operations (often implemented using semaphore

Semaphore (programming)

In computer science, a semaphore is a variable or abstract data type that provides a simple but useful abstraction for controlling access by multiple processes to a common resource in a parallel programming environment....

s) in order to prevent common data from being simultaneously modified, or read while in the process of being modified. Careless use of such primitives can lead to deadlock

Deadlock

A deadlock is a situation where in two or more competing actions are each waiting for the other to finish, and thus neither ever does. It is often seen in a paradox like the "chicken or the egg"...

s.

Another use of multithreading, applicable even for single-CPU systems, is the ability for an application to remain responsive to input. In a single-threaded program, if the main execution thread blocks on a long-running task, the entire application can appear to freeze. By moving such long-running tasks to a worker thread that runs concurrently with the main execution thread, it is possible for the application to remain responsive to user input while executing tasks in the background. On the other hand, in most cases multithreading is not the only way to keep program responsive, with non-blocking I/O and/or Unix signals being used to achieve the same result.

Operating systems schedule threads in one of two ways:

- Preemptive multithreading is generally considered the superior approach, as it allows the operating system to determine when a context switchContext switchA context switch is the computing process of storing and restoring the state of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system...

should occur. The disadvantage to preemptive multithreading is that the system may make a context switch at an inappropriate time, causing lock convoyLock convoyIn computer science, a lock convoy is a performance problem that can occur when using locks for concurrency control in a multithreaded application.A lock convoy occurs when multiple threads of equal priority contend repeatedly for the same lock...

, priority inversionPriority inversionIn computer science, priority inversion is a problematic scenario in scheduling when a higher priority task is indirectly preempted by a lower priority task effectively "inverting" the relative priorities of the two tasks....

or other negative effects which may be avoided by cooperative multithreading. - Cooperative multithreading, on the other hand, relies on the threads themselves to relinquish control once they are at a stopping point. This can create problems if a thread is waiting for a resource to become available.

Until late 1990s, CPUs in desktop computers did not have much support for multithreading, because switching between threads was generally already quicker than full process context switch

Context switch

A context switch is the computing process of storing and restoring the state of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system...

es. Processors in embedded systems, which have higher requirements for real-time

Real-time computing

In computer science, real-time computing , or reactive computing, is the study of hardware and software systems that are subject to a "real-time constraint"— e.g. operational deadlines from event to system response. Real-time programs must guarantee response within strict time constraints...

behaviors, might support multithreading by decreasing the thread-switch time, perhaps by allocating a dedicated register file

Register file

A register file is an array of processor registers in a central processing unit . Modern integrated circuit-based register files are usually implemented by way of fast static RAMs with multiple ports...

for each thread instead of saving/restoring a common register file. In the late 1990s, the idea of executing instructions from multiple threads simultaneously, known as simultaneous multithreading

Simultaneous multithreading

Simultaneous multithreading, often abbreviated as SMT, is a technique for improving the overall efficiency of superscalar CPUs with hardware multithreading...

, has reached desktops with Intel's Pentium 4

Pentium 4

Pentium 4 was a line of single-core desktop and laptop central processing units , introduced by Intel on November 20, 2000 and shipped through August 8, 2008. They had a 7th-generation x86 microarchitecture, called NetBurst, which was the company's first all-new design since the introduction of the...

processor, under the name hyper threading

Hyper-threading

Hyper-threading is Intel's term for its simultaneous multithreading implementation in its Atom, Intel Core i3/i5/i7, Itanium, Pentium 4 and Xeon CPUs....

. It has been dropped from Intel Core

Intel Core

Yonah was the code name for Intel's first generation of 65 nm process mobile microprocessors, based on the Banias/Dothan-core Pentium M microarchitecture. SIMD performance has been improved through the addition of SSE3 instructions and improvements to SSE and SSE2 implementations, while integer...

and Core 2 architectures, but later was re-instated in Core i7 architecture.

Critics of multithreading contend that increasing the use of threads has significant drawbacks:

"Although threads seem to be a small step from sequential computation, in fact, they represent a huge step. They discard the most essential and appealing properties of sequential computation: understandability, predictability, and determinism. Threads, as a model of computation, are wildly nondeterministic, and the job of the programmer becomes one of pruning that nondeterminism." -- The Problem with Threads, Edward A. Lee, UC Berkeley, 2006

Processes, kernel threads, user threads, and fibers

A process is the "heaviest" unit of kernel scheduling. Processes own resourcesResource (computer science)

A resource, or system resource, is any physical or virtual component of limited availability within a computer system. Every device connected to a computer system is a resource. Every internal system component is a resource...

allocated by the operating system. Resources include memory, file handles

Handle (computing)

In computer programming, a handle is a particular kind of smart pointer. Handles are used when application software references blocks of memory or objects managed by another system, such as a database or an operating system....

, sockets, device handles, and windows. Processes do not share address spaces or file resources except through explicit methods such as inheriting file handles or shared memory segments, or mapping the same file in a shared way. Processes are typically preemptively multitasked.

A kernel thread is the "lightest" unit of kernel scheduling. At least one kernel thread exists within each process. If multiple kernel threads can exist within a process, then they share the same memory and file resources. Kernel threads are preemptively multitasked if the operating system's process scheduler

Scheduling (computing)

In computer science, a scheduling is the method by which threads, processes or data flows are given access to system resources . This is usually done to load balance a system effectively or achieve a target quality of service...

is preemptive. Kernel threads do not own resources except for a stack

Call stack

In computer science, a call stack is a stack data structure that stores information about the active subroutines of a computer program. This kind of stack is also known as an execution stack, control stack, run-time stack, or machine stack, and is often shortened to just "the stack"...

, a copy of the registers

Processor register

In computer architecture, a processor register is a small amount of storage available as part of a CPU or other digital processor. Such registers are addressed by mechanisms other than main memory and can be accessed more quickly...

including the program counter

Program counter

The program counter , commonly called the instruction pointer in Intel x86 microprocessors, and sometimes called the instruction address register, or just part of the instruction sequencer in some computers, is a processor register that indicates where the computer is in its instruction sequence...

, and thread-local storage

Thread-local storage

Thread-local storage is a computer programming method that uses static or global memory local to a thread.This is sometimes needed because normally all threads in a process share the same address space, which is sometimes undesirable...

(if any). The kernel can assign one thread to each logical core in a system (because each processor splits itself up into multiple logical cores if it supports multithreading, or only support one logical core per physical core if it does not support multithreading), and can swap out threads that get blocked. However, kernel threads take much longer than user threads to be swapped.

Threads are sometimes implemented in userspace

User space

A conventional computer operating system usually segregates virtual memory into kernel space and user space. Kernel space is strictly reserved for running the kernel, kernel extensions, and most device drivers...

libraries, thus called user threads. The kernel is not aware of them, so they are managed and scheduled in userspace

User space

A conventional computer operating system usually segregates virtual memory into kernel space and user space. Kernel space is strictly reserved for running the kernel, kernel extensions, and most device drivers...

. Some implementations base their user threads on top of several kernel threads to benefit from multi-processor

Multiprocessing

Multiprocessing is the use of two or more central processing units within a single computer system. The term also refers to the ability of a system to support more than one processor and/or the ability to allocate tasks between them...

machines (M:N model). In this article the term "thread" (without kernel or user qualifier) defaults to referring to kernel threads. User threads as implemented by virtual machine

Virtual machine

A virtual machine is a "completely isolated guest operating system installation within a normal host operating system". Modern virtual machines are implemented with either software emulation or hardware virtualization or both together.-VM Definitions:A virtual machine is a software...

s are also called green threads

Green threads

In computer programming, green threads are threads that are scheduled by a virtual machine instead of natively by the underlying operating system...

. User threads are generally fast to create and manage, but cannot take advantage of multithreading or multiprocessing and get blocked if all of their associated kernel threads get blocked even if there are some user threads that are ready to run.

Fibers

Fiber (computer science)

In computer science, a fiber is a particularly lightweight thread of execution.Like threads, fibers share address space. However, fibers use co-operative multitasking while threads use pre-emptive multitasking. Threads often depend on the kernel's thread scheduler to preempt a busy thread and...

are an even lighter unit of scheduling which are cooperatively scheduled: a running fiber must explicitly "yield" to allow another fiber to run, which makes their implementation much easier than kernel or user threads. A fiber can be scheduled to run in any thread in the same process. This permits applications to gain performance improvements by managing scheduling themselves, instead of relying on the kernel scheduler (which may not be tuned for the application). Parallel programming environments such as OpenMP

OpenMP

OpenMP is an API that supports multi-platform shared memory multiprocessing programming in C, C++, and Fortran, on most processor architectures and operating systems, including Linux, Unix, AIX, Solaris, Mac OS X, and Microsoft Windows platforms...

typically implement their tasks through fibers.

Concurrency and data structures

Threads in the same process share the same address space. This allows concurrently-running code to coupleCoupling (computer science)

In computer science, coupling or dependency is the degree to which each program module relies on each one of the other modules.Coupling is usually contrasted with cohesion. Low coupling often correlates with high cohesion, and vice versa...

tightly and conveniently exchange data without the overhead or complexity of an IPC

Inter-process communication

In computing, Inter-process communication is a set of methods for the exchange of data among multiple threads in one or more processes. Processes may be running on one or more computers connected by a network. IPC methods are divided into methods for message passing, synchronization, shared...

. When shared between threads, however, even simple data structures become prone to race hazards

Race condition

A race condition or race hazard is a flaw in an electronic system or process whereby the output or result of the process is unexpectedly and critically dependent on the sequence or timing of other events...

if they require more than one CPU instruction to update: two threads may end up attempting to update the data structure at the same time and find it unexpectedly changing underfoot. Bugs caused by race hazards can be very difficult to reproduce and isolate.

To prevent this, threading APIs offer synchronization primitives such as mutexes

Mutual exclusion

Mutual exclusion algorithms are used in concurrent programming to avoid the simultaneous use of a common resource, such as a global variable, by pieces of computer code called critical sections. A critical section is a piece of code in which a process or thread accesses a common resource...

to lock

Lock (computer science)

In computer science, a lock is a synchronization mechanism for enforcing limits on access to a resource in an environment where there are many threads of execution. Locks are one way of enforcing concurrency control policies.-Types:...

data structures against concurrent access. On uniprocessor systems, a thread running into a locked mutex must sleep and hence trigger a context switch. On multi-processor systems, the thread may instead poll the mutex in a spinlock

Spinlock

In software engineering, a spinlock is a lock where the thread simply waits in a loop repeatedly checking until the lock becomes available. Since the thread remains active but isn't performing a useful task, the use of such a lock is a kind of busy waiting...

. Both of these may sap performance and force processors in SMP

Symmetric multiprocessing

In computing, symmetric multiprocessing involves a multiprocessor computer hardware architecture where two or more identical processors are connected to a single shared main memory and are controlled by a single OS instance. Most common multiprocessor systems today use an SMP architecture...

systems to contend for the memory bus, especially if the granularity

Granularity

Granularity is the extent to which a system is broken down into small parts, either the system itself or its description or observation. It is the "extent to which a larger entity is subdivided...

of the locking is fine.

I/O and scheduling

User thread or fiber implementations are typically entirely in userspace. As a result, context switching between user threads or fibers within the same process is extremely efficient because it does not require any interaction with the kernel at all: a context switch can be performed by locally saving the CPU registers used by the currently executing user thread or fiber and then loading the registers required by the user thread or fiber to be executed. Since scheduling occurs in userspace, the scheduling policy can be more easily tailored to the requirements of the program's workload.However, the use of blocking system calls in user threads (as opposed to kernel threads) or fibers can be problematic. If a user thread or a fiber performs a system call that blocks, the other user threads and fibers in the process are unable to run until the system call returns. A typical example of this problem is when performing I/O: most programs are written to perform I/O synchronously. When an I/O operation is initiated, a system call is made, and does not return until the I/O operation has been completed. In the intervening period, the entire process is "blocked" by the kernel and cannot run, which starves other user threads and fibers in the same process from executing.

A common solution to this problem is providing an I/O API that implements a synchronous interface by using non-blocking I/O internally, and scheduling another user thread or fiber while the I/O operation is in progress. Similar solutions can be provided for other blocking system calls. Alternatively, the program can be written to avoid the use of synchronous I/O or other blocking system calls.

SunOS

SunOS

SunOS is a version of the Unix operating system developed by Sun Microsystems for their workstation and server computer systems. The SunOS name is usually only used to refer to versions 1.0 to 4.1.4 of SunOS...

4.x implemented "light-weight processes" or LWPs. NetBSD

NetBSD

NetBSD is a freely available open source version of the Berkeley Software Distribution Unix operating system. It was the second open source BSD descendant to be formally released, after 386BSD, and continues to be actively developed. The NetBSD project is primarily focused on high quality design,...

2.x+, and DragonFly BSD

DragonFly BSD

DragonFly BSD is a free Unix-like operating system created as a fork of FreeBSD 4.8. Matthew Dillon, an Amiga developer in the late 1980s and early 1990s and a FreeBSD developer between 1994 and 2003, began work on DragonFly BSD in June 2003 and announced it on the FreeBSD mailing lists on July...

implement LWPs as kernel threads (1:1 model). SunOS 5.2 through SunOS 5.8 as well as NetBSD 2 to NetBSD 4 implemented a two level model, multiplexing one or more user level threads on each kernel thread (M:N model). SunOS 5.9 and later, as well as NetBSD 5 eliminated user threads support, returning to a 1:1 model. http://www.sun.com/software/whitepapers/solaris9/multithread.pdf FreeBSD 5 implemented M:N model. FreeBSD 6 supported both 1:1 and M:N, user could choose which one should be used with a given program using /etc/libmap.conf. Starting with FreeBSD 7, the 1:1 became the default. FreeBSD 8 no longer supports the M:N model.

The use of kernel threads simplifies user code by moving some of the most complex aspects of threading into the kernel. The program doesn't need to schedule threads or explicitly yield the processor. User code can be written in a familiar procedural style, including calls to blocking APIs, without starving other threads. However, kernel threading may force a context switch between threads at any time, and thus expose race hazards and concurrency bugs that would otherwise lie latent. On SMP systems, this is further exacerbated because kernel threads may literally execute concurrently on separate processors.

1:1 (Kernel-level threading)

Threads created by the user are in 1-1 correspondence with schedulable entities in the kernel. This is the simplest possible threading implementation. Win32 used this approach from the start. On LinuxLinux

Linux is a Unix-like computer operating system assembled under the model of free and open source software development and distribution. The defining component of any Linux system is the Linux kernel, an operating system kernel first released October 5, 1991 by Linus Torvalds...

, the usual C library

GNU C Library

The GNU C Library, commonly known as glibc, is the C standard library released by the GNU Project. Originally written by the Free Software Foundation for the GNU operating system, the library's development has been overseen by a committee since 2001, with Ulrich Drepper from Red Hat as the lead...

implements this approach (via the NPTL

Native POSIX Thread Library

The Native POSIX Thread Library is a software feature that enables the Linux kernel to run programs written to use POSIX Threads efficiently.-History:...

or older LinuxThreads

LinuxThreads

In the Linux operating system, LinuxThreads was a partial implementation of POSIX Threads that has since been superseded by the Native POSIX Thread Library ....

). The same approach is used by Solaris, NetBSD

NetBSD

NetBSD is a freely available open source version of the Berkeley Software Distribution Unix operating system. It was the second open source BSD descendant to be formally released, after 386BSD, and continues to be actively developed. The NetBSD project is primarily focused on high quality design,...

and FreeBSD

FreeBSD

FreeBSD is a free Unix-like operating system descended from AT&T UNIX via BSD UNIX. Although for legal reasons FreeBSD cannot be called “UNIX”, as the direct descendant of BSD UNIX , FreeBSD’s internals and system APIs are UNIX-compliant...

.

N:1 (User-level threading)

An N:1 model implies that all application-level threads map to a single kernel-level scheduled entity; the kernel has no knowledge of the application threads. With this approach, context switching can be done very quickly and, in addition, it can be implemented even on simple kernels which do not support threading. One of the major drawbacks however is that it cannot benefit from the hardware acceleration on multi-threadedMultithreading (computer hardware)

Multithreading computers have hardware support to efficiently execute multiple threads. These are distinguished from multiprocessing systems in that the threads have to share the resources of a single core: the computing units, the CPU caches and the translation lookaside buffer...

processors or multi-processor

Multiprocessing

Multiprocessing is the use of two or more central processing units within a single computer system. The term also refers to the ability of a system to support more than one processor and/or the ability to allocate tasks between them...

computers: there is never more than one thread being scheduled at the same time. It is used by GNU Portable Threads

GNU Portable Threads

GNU Pth is a POSIX/ANSI-C based user-space thread library for UNIX platforms which provides priority-based scheduling for multithreading applications...

.

M:N (Hybrid threading)

M:N maps some N number of application threads onto some M number of kernel entities, or "virtual processors." This is a compromise between kernel-level ("1:1") and user-level ("N:1") threading. In general, "M:N" threading systems are more complex to implement than either kernel or user threads, because changes to both kernel and user-space code are required. In the M:N implementation, the threading library is responsible for scheduling user threads on the available schedulable entities; this makes context switching of threads very fast, as it avoids system calls. However, this increases complexity and the likelihood of priority inversionPriority inversion

In computer science, priority inversion is a problematic scenario in scheduling when a higher priority task is indirectly preempted by a lower priority task effectively "inverting" the relative priorities of the two tasks....

, as well as suboptimal scheduling without extensive (and expensive) coordination between the userland scheduler and the kernel scheduler.

Implementations

There are many different and incompatible implementations of threading. These include both kernel-level and user-level implementations. However, they often follow more or less closely the POSIX ThreadsPOSIX Threads

POSIX Threads, usually referred to as Pthreads, is a POSIX standard for threads. The standard, POSIX.1c, Threads extensions , defines an API for creating and manipulating threads....

interface.

Kernel-level implementation examples

- Light Weight Kernel ThreadsLight Weight Kernel ThreadsLight Weight Kernel Threads or LWKT is a term from computer science in general and in DragonFlyBSD in particular. LWKTs differ from normal kernel threads in that they can preempt normal kernel threads. According to Matt Dillon, DragonFlyBSD creator:...

(LWKT) in various BSDs - M:N threading

- Native POSIX Thread LibraryNative POSIX Thread LibraryThe Native POSIX Thread Library is a software feature that enables the Linux kernel to run programs written to use POSIX Threads efficiently.-History:...

(NPTL) for LinuxLinuxLinux is a Unix-like computer operating system assembled under the model of free and open source software development and distribution. The defining component of any Linux system is the Linux kernel, an operating system kernel first released October 5, 1991 by Linus Torvalds...

, an implementation of the POSIX ThreadsPOSIX ThreadsPOSIX Threads, usually referred to as Pthreads, is a POSIX standard for threads. The standard, POSIX.1c, Threads extensions , defines an API for creating and manipulating threads....

(pthreads) standard - Apple Multiprocessing Services version 2.0 and later, uses the built-in nanokernel in Mac OS 8Mac OS 8Mac OS 8 is an operating system that was released by Apple Computer on July 26, 1997. It represented the largest overhaul of the Mac OS since the release of System 7, some six years previously. It puts more emphasis on color than previous operating systems...

.6 and later which was modified to support it. - Microsoft WindowsMicrosoft WindowsMicrosoft Windows is a series of operating systems produced by Microsoft.Microsoft introduced an operating environment named Windows on November 20, 1985 as an add-on to MS-DOS in response to the growing interest in graphical user interfaces . Microsoft Windows came to dominate the world's personal...

from Windows 95Windows 95Windows 95 is a consumer-oriented graphical user interface-based operating system. It was released on August 24, 1995 by Microsoft, and was a significant progression from the company's previous Windows products...

and Windows NTWindows NTWindows NT is a family of operating systems produced by Microsoft, the first version of which was released in July 1993. It was a powerful high-level-language-based, processor-independent, multiprocessing, multiuser operating system with features comparable to Unix. It was intended to complement...

onwards.

User-level implementation examples

- GNU Portable ThreadsGNU Portable ThreadsGNU Pth is a POSIX/ANSI-C based user-space thread library for UNIX platforms which provides priority-based scheduling for multithreading applications...

- FSU PthreadsFSU PthreadsFSU Pthreads is an implementation of POSIX Threads, a standard for threads, written for Ada. It was developed by Ted Baker and his computer science students at Florida State University for use in the Ada programming language...

- Apple Inc.'s Thread Manager

- REALbasicREALbasicRealbasic is the object-oriented dialect of the BASIC programming language used in Real Studio, a programming environment, developed and commercially marketed by Real Software, Inc of Austin, Texas for Mac OS X, Microsoft Windows, 32-bit x86 Linux and the web.- Language features :RB is a strongly...

(includes an API for cooperative threading) - Netscape Portable RuntimeNetscape Portable RuntimeIn computing, the Netscape portable runtime, or NSPR, a platform abstraction library, makes all operating systems it supports appear the same to Mozilla-style web-browsers. NSPR provides platform independence for non-GUI operating system facilities...

(includes a user-space fibers implementation)

Hybrid implementation examples

- Scheduler activationsScheduler activationsScheduler Activations is a threading mechanism that, when implemented in an operating system's process scheduler, provides kernel-level thread functionality with user-level thread flexibility and performance...

used by the NetBSD native POSIX threads library implementation (an M:N model as opposed to a 1:1 kernel or userspace implementation model) - Marcel from the PM2PM2The Parallel Multithreaded Machine is a software for parallel networking of computers.PM2 is an open-source distributed multithreaded programming environment designed to support efficiently distributed programs with a highly irregular behavior on distributed architectures...

project. - The OS for the Tera/Cray MTACray MTA-2The Cray MTA-2 is a Shared-Memory MIMD computer marketed by Cray Inc. It is an unusual design based on the Tera computer designed by Tera Computer Company. The original Tera computer turned out to be nearly un-manufacturable due to its aggressive packaging and circuit technology...

- Microsoft Windows 7

Fiber implementation examples

Fibers can be implemented without operating system support, although some operating systems or libraries provide explicit support for them.- Win32 supplies a fiber API (Windows NT 3.51 SP3 and later)

- RubyRuby (programming language)Ruby is a dynamic, reflective, general-purpose object-oriented programming language that combines syntax inspired by Perl with Smalltalk-like features. Ruby originated in Japan during the mid-1990s and was first developed and designed by Yukihiro "Matz" Matsumoto...

as Green threadsGreen threadsIn computer programming, green threads are threads that are scheduled by a virtual machine instead of natively by the underlying operating system...

Programming Language Support

Many programming languages support threading in some capacity. Many implementations of C and C++ do not provide direct support for threading on their own, but provide access to the native threading APIs provided by the operating system. Some higher level (and usually cross platform) programming languages such as Java, Python, and .NET, expose threading to the developer while abstracting the platform specific differences in threading implementations in the runtime to the developer. A number of other programming languages also try to abstract the concept of concurrency and threading from the developer altogether (CilkCilk

Cilk is a general-purpose programming language designed for multithreaded parallel computing. The commercial instantiation is Intel Cilk Plus.-Design:...

, OpenMP

OpenMP

OpenMP is an API that supports multi-platform shared memory multiprocessing programming in C, C++, and Fortran, on most processor architectures and operating systems, including Linux, Unix, AIX, Solaris, Mac OS X, and Microsoft Windows platforms...

, MPI

Message Passing Interface

Message Passing Interface is a standardized and portable message-passing system designed by a group of researchers from academia and industry to function on a wide variety of parallel computers...

). Some languages are designed to parallelism (Ateji PX, CUDA

CUDA

CUDA or Compute Unified Device Architecture is a parallel computing architecture developed by Nvidia. CUDA is the computing engine in Nvidia graphics processing units that is accessible to software developers through variants of industry standard programming languages...

).

A few interpreted programming languages such as Ruby and (the CPython implementation of) Python support threading, but have a limitation that is known as a Global Interpreter Lock

Global Interpreter Lock

A Global Interpreter Lock is a mutual exclusion lock held by a programming language interpreter thread to avoid sharing code that is not thread-safe with other threads. In languages with a GIL, there is always one GIL for each interpreter process...

(GIL). The GIL is a mutual exclusion lock held by the interpreter that can prevent the interpreter from concurrently interpreting the applications code on two or more threads at the same time, which effectively limits the concurrency on multiple core systems (mostly for processor-bound threads, and not much for network-bound ones).

Event-driven programming

Event-driven programming

In computer programming, event-driven programming or event-based programming is a programming paradigm in which the flow of the program is determined by events—i.e., sensor outputs or user actions or messages from other programs or threads.Event-driven programming can also be defined as an...

hardware description languages

Hardware description language

In electronics, a hardware description language or HDL is any language from a class of computer languages, specification languages, or modeling languages for formal description and design of electronic circuits, and most-commonly, digital logic...

like Verilog

Verilog

In the semiconductor and electronic design industry, Verilog is a hardware description language used to model electronic systems. Verilog HDL, not to be confused with VHDL , is most commonly used in the design, verification, and implementation of digital logic chips at the register-transfer level...

have a different threading model which supports extremely large numbers of threads (for modeling hardware).

See also

- Win32 Thread Information BlockWin32 Thread Information BlockIn computing, the Win32 Thread Information Block is a data structure in Win32 on x86 that stores info about the currently running thread. This structure is also known as the Thread Environment Block ....

- Hardware: Multithreading (computer hardware)Multithreading (computer hardware)Multithreading computers have hardware support to efficiently execute multiple threads. These are distinguished from multiprocessing systems in that the threads have to share the resources of a single core: the computing units, the CPU caches and the translation lookaside buffer...

, Multi-core (computing)Multi-core (computing)A multi-core processor is a single computing component with two or more independent actual processors , which are the units that read and execute program instructions...

, Simultaneous multithreadingSimultaneous multithreadingSimultaneous multithreading, often abbreviated as SMT, is a technique for improving the overall efficiency of superscalar CPUs with hardware multithreading... - Theory: Communicating sequential processesCommunicating sequential processesIn computer science, Communicating Sequential Processes is a formal language for describing patterns of interaction in concurrent systems. It is a member of the family of mathematical theories of concurrency known as process algebras, or process calculi...

, Computer multitaskingComputer multitaskingIn computing, multitasking is a method where multiple tasks, also known as processes, share common processing resources such as a CPU. In the case of a computer with a single CPU, only one task is said to be running at any point in time, meaning that the CPU is actively executing instructions for...

, Message passingMessage passingMessage passing in computer science is a form of communication used in parallel computing, object-oriented programming, and interprocess communication. In this model, processes or objects can send and receive messages to other processes... - Problems: Thread safety, Priority inversionPriority inversionIn computer science, priority inversion is a problematic scenario in scheduling when a higher priority task is indirectly preempted by a lower priority task effectively "inverting" the relative priorities of the two tasks....

- Techniques: ProtothreadsProtothreadsIn computer science, a protothread is a low-overhead mechanism for concurrent programming.Protothreads function as stackless, lightweight threads providing a blocking context cheaply using minimal memory per protothread ....

, Thread pool patternThread pool patternIn computer programming, the thread pool pattern is where a number of threads are created to perform a number of tasks, which are usually organized in a queue. Typically, there are many more tasks than threads. As soon as a thread completes its task, it will request the next task from the queue...

, Lock-free and wait-free algorithms - System Calls: clone (Linux system call)Clone (Linux system call)clone is a system call on the Linux kernel related to multithreading. In practice, one should try to avoid calling clone directly, but instead use a threading library which use clone when starting a thread .The syntax for calling clone under a Linux program is: #include int clone clone is a...

External links

- Answers to frequently asked questions for comp.programming.threads

- What makes multi-threaded programming hard?

- Article "Query by Slice, Parallel Execute, and Join: A Thread Pool Pattern in Java" by Binildas C. A.

- Article "The Free Lunch Is Over: A Fundamental Turn Toward Concurrency in Software" by Herb SutterHerb SutterHerb Sutter is a prominent C++ expert. He is also a book author and a columnist for Dr. Dobb's Journal. He joined Microsoft in 2002 as a platform evangelist for Visual C++ .NET, rising to lead software architect for C++/CLI. Sutter served as secretary and convener of the ISO C++ standards committee...

- Article "The Problem with Threads" by Edward LeeEdward LeeEdward Lee may refer to:* Edward Lee , American horror writer* Edward Lee , Archbishop of York, 1531–1544...

- Concepts of Multithreading

- ConTest - A Tool for Testing Multithreaded Java Applications by IBMIBMInternational Business Machines Corporation or IBM is an American multinational technology and consulting corporation headquartered in Armonk, New York, United States. IBM manufactures and sells computer hardware and software, and it offers infrastructure, hosting and consulting services in areas...

- Debugging and Optimizing Multithreaded OpenMP Programs

- Multithreading in the Solaris Operating Environment

- Parallel computing community

- POSIX threads explained by Daniel RobbinsDaniel RobbinsDaniel Robbins is a computer programmer and consultant best known as the founder and former chief architect of the Gentoo Linux project. In 2008, he launched the Funtoo project, a free GNU/Linux distribution based on Gentoo, and is the project's lead hacker and organizer...

- The C10K problem