Fault-tolerant computer systems

Encyclopedia

Types of fault tolerance

Most fault-tolerant computer systems are designed to be able to handle several possible failures, including hardware-related faults such as hard diskHard disk

A hard disk drive is a non-volatile, random access digital magnetic data storage device. It features rotating rigid platters on a motor-driven spindle within a protective enclosure. Data is magnetically read from and written to the platter by read/write heads that float on a film of air above the...

failures, input

Input device

In computing, an input device is any peripheral used to provide data and control signals to an information processing system such as a computer or other information appliance...

or output device

Output device

An output device is any piece of computer hardware equipment used to communicate the results of data processing carried out by an information processing system to the outside world....

failures, or other temporary or permanent failures; software bug

Software bug

A software bug is the common term used to describe an error, flaw, mistake, failure, or fault in a computer program or system that produces an incorrect or unexpected result, or causes it to behave in unintended ways. Most bugs arise from mistakes and errors made by people in either a program's...

s and errors; interface errors between the hardware and software, including driver

Device driver

In computing, a device driver or software driver is a computer program allowing higher-level computer programs to interact with a hardware device....

failures; operator errors, such as erroneous keystrokes, bad command sequences, or installing unexpected software; and physical damage or other flaws introduced to the system from an outside source .

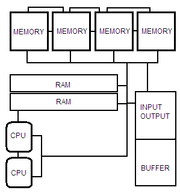

Hardware fault-tolerance is the most common application of these systems, designed to prevent failures due to hardware components. Typically, components have multiple backups and are separated into smaller "segments" that act to contain a fault, and extra redundancy is built into all physical connectors, power supplies, fans, etc. . There are special software and instrumentation packages designed to detect failures, such as fault masking, which is a way to ignore faults by seamlessly preparing a backup component to execute something as soon as the instruction is sent, using a sort of voting protocol where if the main and backups don't give the same results, the flawed output is ignored.

Software fault-tolerance is based more around nullifying programming errors using real-time redundancy, or static "emergency" subprograms to fill in for programs that crash. There are many ways to conduct such fault-regulation, depending on the application and the available hardware..

History

The first known fault-tolerant computer was SAPOSAPO (computer)

The SAPO was the first Czechoslovak computer. It operated in the years 1957-1960 in Výzkumný ústav matematických strojů, part of the Czechoslovak Academy of Sciences...

, built in 1951 in Czechoslovakia

Czechoslovakia

Czechoslovakia or Czecho-Slovakia was a sovereign state in Central Europe which existed from October 1918, when it declared its independence from the Austro-Hungarian Empire, until 1992...

by Antonin Svoboda

Antonín Svoboda

Antonin Svoboda was a Czech computer scientist, mathematician, electrical engineer, and researcher. He is credited with originating the design of fault-tolerant computer systems, and with the creation of SAPO, the first Czech computer design....

. Its basic design was magnetic drums

Drum memory

Drum memory is a magnetic data storage device and was an early form of computer memory widely used in the 1950s and into the 1960s, invented by Gustav Tauschek in 1932 in Austria....

connected via relays, with a voting method of memory error

RAM parity

RAM parity checking is the storing of a redundant parity bit representing the parity of a small amount of computer data stored in random access memory, and the subsequent comparison of the stored and the computed parity to detect whether a data error has occurred.The parity bit was originally...

detection. Several other machines were developed along this line, mostly for military use. Eventually, they separated into three distinct categories: machines that would last a long time without any maintenance, such as the ones used on NASA

NASA

The National Aeronautics and Space Administration is the agency of the United States government that is responsible for the nation's civilian space program and for aeronautics and aerospace research...

space probe

Space probe

A robotic spacecraft is a spacecraft with no humans on board, that is usually under telerobotic control. A robotic spacecraft designed to make scientific research measurements is often called a space probe. Many space missions are more suited to telerobotic rather than crewed operation, due to...

s and satellites; computers that were very dependable but required constant monitoring, such as those used to monitor and control nuclear power plants or supercollider

Collider

A collider is a type of a particle accelerator involving directed beams of particles.Colliders may either be ring accelerators or linear accelerators.-Explanation:...

experiments; and finally, computers with a high amount of runtime which would be under heavy use, such as many of the supercomputers used by insurance companies for their probability

Probability

Probability is ordinarily used to describe an attitude of mind towards some proposition of whose truth we arenot certain. The proposition of interest is usually of the form "Will a specific event occur?" The attitude of mind is of the form "How certain are we that the event will occur?" The...

monitoring.

Most of the development in the so called LLNM (Long Life, No Maintenance) computing was done by NASA during the 1960s, in preparation for Project Apollo

Project Apollo

The Apollo program was the spaceflight effort carried out by the United States' National Aeronautics and Space Administration , that landed the first humans on Earth's Moon. Conceived during the Presidency of Dwight D. Eisenhower, Apollo began in earnest after President John F...

and other research aspects. NASA's first machine went into a space observatory

Space observatory

A space observatory is any instrument in outer space which is used for observation of distant planets, galaxies, and other outer space objects...

, and their second attempt, the JSTAR computer, was used in Voyager

Voyager

-Technology:*LG Voyager, a mobile phone model manufactured by LG Electronics*NCR Voyager, a computer platform produced by NCR Corporation*Voyager , a computer worm affecting Oracle databases...

. This computer had a backup of memory arrays to use memory recovery methods and thus it was called the JPL Self-Testing-And-Repairing computer. It could detect its own errors and fix them or bring up redundant modules as needed. The computer is still working today.

Hyper-dependable computers were pioneered mostly by aircraft

Aircraft

An aircraft is a vehicle that is able to fly by gaining support from the air, or, in general, the atmosphere of a planet. An aircraft counters the force of gravity by using either static lift or by using the dynamic lift of an airfoil, or in a few cases the downward thrust from jet engines.Although...

manufacturers, nuclear power

Nuclear power

Nuclear power is the use of sustained nuclear fission to generate heat and electricity. Nuclear power plants provide about 6% of the world's energy and 13–14% of the world's electricity, with the U.S., France, and Japan together accounting for about 50% of nuclear generated electricity...

companies, and the railroad industry

Rail transport in the United States

Presently, most rail transport in the United States is based on freight train shipments. The U.S. rail industry has experienced repeated convulsions due to changing U.S. economic needs and the rise of automobile, bus, and air transport....

in the USA. These needed computers with massive amounts of uptime that would fail gracefully enough with a fault to allow continued operation, while relying on the fact that the computer output would be constantly monitored by humans to detect faults. Again, IBM developed the first computer of this kind for NASA for guidance of Saturn V

Saturn V

The Saturn V was an American human-rated expendable rocket used by NASA's Apollo and Skylab programs from 1967 until 1973. A multistage liquid-fueled launch vehicle, NASA launched 13 Saturn Vs from the Kennedy Space Center, Florida with no loss of crew or payload...

rockets, but later on BNSF, Unisys

Unisys

Unisys Corporation , headquartered in Blue Bell, Pennsylvania, United States, and incorporated in Delaware, is a long established business whose core products now involves computing and networking.-History:...

, and General Electric

General Electric

General Electric Company , or GE, is an American multinational conglomerate corporation incorporated in Schenectady, New York and headquartered in Fairfield, Connecticut, United States...

built their own.

In general, the early efforts at fault-tolerant designs were focused mainly on internal diagnosis, where a fault would indicate something was failing and a worker could replace it. SAPO, for instance, had a method by which faulty memory drums would emit a noise before failure . Later efforts showed that, to be fully effective, the system had to be self-repairing and diagnosing – isolating a fault and then implementing a redundant backup while alerting a need for repair. This is known as N-model redundancy, where faults cause automatic fail safes and a warning to the operator, and it is still the most common form of level one fault-tolerant design in use today.

Voting was another initial method, as discussed above, with multiple redundant backups operating constantly and checking each other's results, with the outcome that if, for example, four components reported an answer of 5 and one component reported an answer of 6, the other four would "vote" that the fifth component was faulty and have it taken out of service. This is called M out of N majority voting.

Historically, motion has always been to move further from N-model and more to M out of N due to the fact that the complexity of systems and the difficulty of ensuring the transitive state from fault-negative to fault-positive did not disrupt operations.

Fault tolerance verification and validation

The most important requirement of design in a fault tolerantFault-tolerant design

In engineering, fault-tolerant design is a design that enables a system to continue operation, possibly at a reduced level , rather than failing completely, when some part of the system fails...

computer system is making sure it actually meets its requirements for reliability. This is done by using various failure models to simulate various failures, and analyzing how well the system reacts. These statistical models are very complex, involving probability curves and specific fault rates, latency

Latency (engineering)

Latency is a measure of time delay experienced in a system, the precise definition of which depends on the system and the time being measured. Latencies may have different meaning in different contexts.-Packet-switched networks:...

curves, error rates, and the like. The most commonly used models are HARP, SAVE, and SHARPE in the USA, and SURF or LASS in Europe.

Fault tolerance research

Research into the kinds of tolerances needed for critical systems involves a large amount of interdisciplinary work. The more complex the system, the more carefully all possible interactions have to be considered and prepared for. Considering the importance of high-value systems in transport, utilities and the military, the field of topics that touch on research is very wide: it can include such obvious subjects as software modeling and reliability, or hardware design, to arcane elements such as stochasticStochastic

Stochastic refers to systems whose behaviour is intrinsically non-deterministic. A stochastic process is one whose behavior is non-deterministic, in that a system's subsequent state is determined both by the process's predictable actions and by a random element. However, according to M. Kac and E...

models, graph theory

Graph theory

In mathematics and computer science, graph theory is the study of graphs, mathematical structures used to model pairwise relations between objects from a certain collection. A "graph" in this context refers to a collection of vertices or 'nodes' and a collection of edges that connect pairs of...

, formal or exclusionary logic, parallel processing

Parallel processing

Parallel processing is the ability to carry out multiple operations or tasks simultaneously. The term is used in the contexts of both human cognition, particularly in the ability of the brain to simultaneously process incoming stimuli, and in parallel computing by machines.-Parallel processing by...

, remote data transmission

Data transmission

Data transmission, digital transmission, or digital communications is the physical transfer of data over a point-to-point or point-to-multipoint communication channel. Examples of such channels are copper wires, optical fibres, wireless communication channels, and storage media...

, and more.

See also

- Fault-tolerant systemFault-tolerant systemFault-tolerance or graceful degradation is the property that enables a system to continue operating properly in the event of the failure of some of its components. A newer approach is progressive enhancement...

- Resilience (network)Resilience (network)In computer networking: “Resilience is the ability to provide and maintain an acceptable level of service in the face of faults and challenges to normal operation.”These services include:* supporting distributed processing* supporting networked storage...

- List of system quality attributes

- immunity aware programmingImmunity Aware ProgrammingWhen writing firmware for an embedded system, immunity-aware programming refers to programming techniques which improve the tolerance of transient errors in the program counter or other modules of a program that would otherwise lead to failure...