Immunity Aware Programming

Encyclopedia

When writing firmware

for an embedded system

, immunity-aware programming refers to programming techniques which improve the tolerance of transient errors in the program counter

or other modules of a program that would otherwise lead to failure. Transient errors are typically caused by single event upset

s, insufficient power, or by strong electromagnetic

signals transmitted by some other "source" device.

Immunity-aware programming is an example of defensive programming

and EMC-aware programming. Although most of these techniques apply to the software in the "victim" device to make it more reliable, a few of these techniques apply to software in the "source" device to make it emit less unwanted noise.

can inexpensively improve the electromagnetic compatibility

of an embedded system

.

Embedded systems firmware is usually not considered to be a source of radio frequency interference. Radio emissions are often caused by harmonic

frequencies of the system clock and switching currents. The pulses on these wires can have fast rise and fall times, causing their wires to act as radio transmitters. This effect is increased by badly-designed printed circuit boards. These effects are reduced by using microcontroller output drivers with slower rise times, or by turning off system components.

The microcontroller is easy to control. It is also susceptible to faults from radio frequency interference. Therefore, making the microcontroller's software resist such errors can cheaply improve the system's tolerance for electromagnetic interference by reducing the need for hardware alterations.

microcontroller

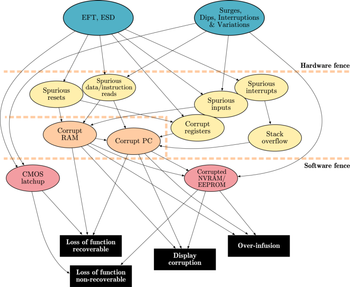

s have specific weak spots which can be strengthened by software that works against electromagnetic interference. Failure mode and effects analysis

of a system and its requirements is often required. Electromagnetic compatibility issues can easily be added to such an analysis.

. This watchdog should be external to the microcontroller, and immune to any plausible electromagnetic interference. It should reset the power supply, briefly switching it off. The watchdog period should be half or less of the time and power required to burn out the microcontroller. The power supply design should be well-grounded and decoupled using capacitors and inductors close to the microcontroller; some typical values are 100uF and 0.1uF in parallel.

Low power can cause serious malfunctions in most microcontrollers. For the CPU to successfully decode and execute instructions, the supplied voltage must not drop below the minimum voltage level. When the supplied voltage drops below this level, the CPU may start to execute some instructions incorrectly. The result is unexpected activity on the internal data and control lines. This activity may cause:

Brownout

detection solves most of those problems in most systems by causing the system to shut down when mains power is unreliable. One typical system retriggers a timer each time that the AC main voltage exceeds 90% of its rated voltage. If the timer expires, it interrupts the microcontroller, which then shuts down its system. Many systems also measure the power supply voltages, to guard against slow power supply degradation.

, and are thus very susceptible to transient disturbances. According to Ohm's law

, high impedance causes high voltage differences. They also are very sensitive to short circuit

from moisture or dust.

One typical failure is when the oscillators' stability is affected. This can cause it to stop, or change its period. The normal system hedges are to have an auxiliary oscillator using some cheap, robust scheme such as a ring of inverters or a resistor-capacitor one-shot timer. After a reset (perhaps caused by a software watchdog), the system may default to these, only switching in the sensitive crystal oscillator once timing measurements have proven it to be stable. It is also common in high-reliability systems to measure the clock frequency by comparing it to an external standard, usually a communications clock, the power line, or a resistor-capacitor timer.

Bursts of electromagnetic interference

can shorten clock periods or cause runt pulses that lead to incorrect data access or command execution. The result is wrong memory content or program pointers. The standard method of overcoming this in hardware is to use an on-chip phase locked loop to generate the microcontroller's actual clock signal. Software

can periodically verify data structures and read critical ports using voting, distributing the reads in time or space.

can actually destroy ports or cause malfunctions.

Most microcontrollers' pins are high impedance inputs or mixed inputs and outputs. High impedance input pins are sensitive to noise, and can register false levels if not properly terminated. Pins that are not terminated inside an IC need resistors attached. These have to be connected to ground or supply, ensuring a known logic state.

The Motor Industry Software Reliability Association

identifies the required steps in case of an error as follows:

(s).

Many processors, such as the Motorola 680x0, feature a hardware trap upon encountering an illegal instruction. A correct instruction, defined in the trap vector, is executed, rather than the random one. Traps can handle a larger range errors than function tokens and NOP slides. Supplementary to illegal instructions, hardware traps securely handle memory access violations, overflows, or a division by zero.

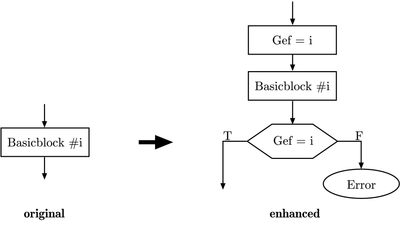

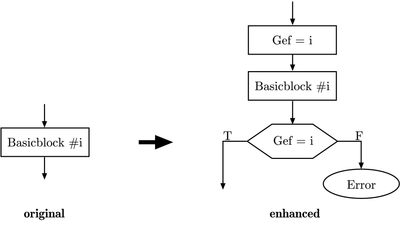

Improved noise immunity can be achieved by execution flow control known as token passing

Improved noise immunity can be achieved by execution flow control known as token passing

. The figure to the right shows the functional principle schematically. This method deals with program flow errors caused by the instruction pointers.

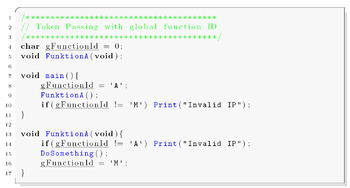

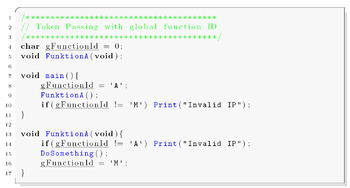

The implementation is simple and efficient. Every function is tagged with a unique function ID. When the function is called, the function ID is saved in a global variable. The function is only executed if the function ID in the global variable and the ID of the function match. If the IDs do not match, an instruction pointer error has occurred, and specific corrective actions can be taken. A sample implementation of token passing using a global variable programmed in C is stated in the following source listing.

The implementation of function tokens increases the program code size by 10 to 20%, and slows down the performance. To improve the implementation, instead of global variables like above, the function ID can be passed as an argument within the functions header as shown in the code sample below.

If an instruction pointer error occurs during the execution and a program points to a memory segment filled with NOP instructions, inevitably an error occurred and is recognized.

Two methods of implementing NOP-Fills are applicable.

When using the CodevisionAVR C compiler, NOP fills can be implemented easily. The chip programmer offers the feature of editing the program flash and eeprom to fill it with a specific value. Using an Atmel

ATMega16, no jump to reset address 0x00 needs to be implemented, as the overflow of the instruction pointer automatically sets its value to 0x00. Unfortunately, resets due to overflow are not equivalent to intentional reset. During the intended reset, all necessary MC registers are reset by hardware, which is not done by a jump to 0x00. So this method will not be applied in the following tests.

These disturbances can be prevented as following:

1. Cyclic update of the most important registers

2. Multiple read of input registers

Another elementary requirement of digital systems is the faultless transmission of data. Communication with other components can be the weak point and a source of errors of a system. A well-thought-out transmission protocol is very important. The techniques described below can also be applied to data transmitted, hence increasing transmission reliability.

is a type of hash function

used to produce a checksum

, which is a small integer from a large block of data, such as network traffic or computer files. CRCs are calculated before and after transmission or duplication, and compared to confirm that they are equal. A CRC detects all one- or two-bit errors, all odd errors, all burst errors if the burst is smaller than the CRC, and most of the wide-burst errors. Parity checks can be applied to single characters (VRC—vertical redundancy check), resulting an additional parity bit

or to a block of data (LRC—longitudinal redundancy check

), issuing a block check character. Both methods can be implemented rather easily by using an XOR operation. A trade-off is that less errors can be detected than with the CRC. Parity Checks only detect odd numbers of flipped bits. The even numbers of bit errors stay undetected. A possible improvement is the usage of both VRC and LRC, called Double Parity or Optimal Rectangular Code (ORC)

.

Some microcontrollers feature a hardware CRC unit.

When the data is read out, the two sets of data are compared. A disturbance is detected if the two data sets are not equal. An error can be reported. If both sets of data are corrupted, a significant error can be reported and the system can react accordingly.

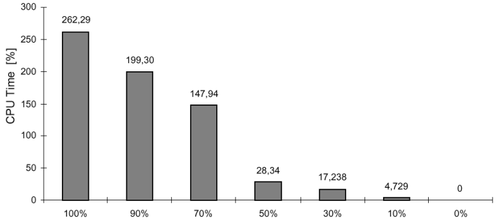

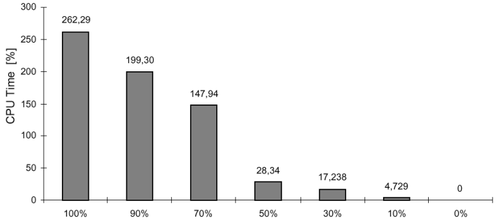

In most cases, safety-critical applications have strict constraints in terms of memory occupation and system performance. The duplication of the whole set of variables and the introduction of a consistency check before every read operation represent the optimum choice from the fault coverage point of view. Duplication of the whole set of variables enables an extremely high percentage of faults to be covered by this software redundancy technique. On the other side, by duplicating a lower percentage of variables one can trade off the obtained fault coverage with the CPU time overhead.

The experimental result shows that duplicating only 50% of the variables is enough to cover 85% of faults with a CPU time overhead of just 28%.

The experimental result shows that duplicating only 50% of the variables is enough to cover 85% of faults with a CPU time overhead of just 28%.

Attention should also be paid to the implementation of the consistency check, as it is usually carried out after each read operation or at the end of each variable's life period. Carefully implementing this check can minimize the CPU time and code size for this application.

As the detection of errors in data is achieved through duplicating all variables and adding consistency checks after every read operation, special considerations have to be applied according to the procedure interfaces. Parameters passed to procedures, as well as return values, are considered to be variables. Hence, every procedure parameter is duplicated, as well as the return values. A procedure is still called only once, but it returns two results, which must hold the same value. The source listing to the right shows a sample implementation of function parameter duplication.

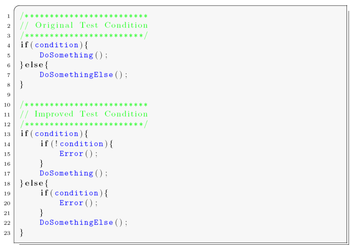

To duplicate a test is one of the most robust methods that exists for generic soft error detection. A drawback is that no strict assumption on the cause of the errors (EMI, ESD etc.), nor on the type of errors to expect (errors affecting the control flow, errors affecting data etc.) can be made. Erroneous bit-changes in data-bytes while stored in memory, cache, register, or transmitted on a bus are known. These data-bytes could be operation codes (instructions), memory addresses, or data. Thus, this method is able to detect a wide range of faults, and is not limited to a specific fault model. Using this method, memory increases about four times, and execution time is about 2.5 times as long as the same program without test duplication. Source listing to the right shows a sample implementation of the duplication of test conditions.

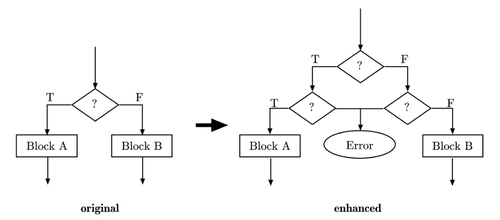

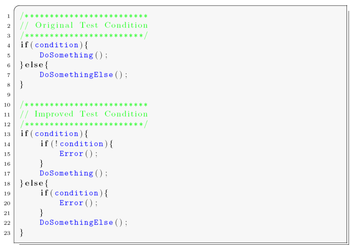

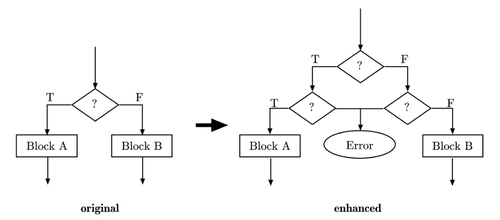

Compared to test duplication, where one condition is cross-checked, with branching duplication the condition is duplicated.

Compared to test duplication, where one condition is cross-checked, with branching duplication the condition is duplicated.

For every conditional test in the program, the condition and the resulting jump should be reevaluated, as shown in the figure. Only if the condition is met again, the jump is executed, else an error has occurred.

What is the benefit of when data, tests, and branches are duplicated when the calculated result is incorrect? One solution is to duplicate an instruction entirely, but implement them differently. So two different programs with the same functionality, but with different sets of data and different implementations are executed. Their outputs are compared, and must be equal. This method covers not just bit-flips or processor faults but also programming errors (bugs). If it is intended to especially handle hardware (CPU) faults, the software can be implemented using different parts of the hardware; for example, one implementation uses a hardware multiply and the other implementation multiplies by shifting or adding. This causes a significant overhead (more than a factor of two for the size of the code). On the other hand, the results are outstandingly accurate.

Try to reduce the amount of edge triggered interrupts. If interrupts can be triggered only with a level, then this helps to ensure that noise on an interrupt pin will not cause an undesired operation. It must be kept in mind that level-triggered interrupts can lead to repeated interrupts as long as the level stays high. In the implementation, this characteristic must be considered; repeated unwanted interrupts must be disabled in the ISR. If this is not possible, then on immediate entry of an edge-triggered interrupt, a software check on the pin to determine if the level is correct should suffice.

For all unused interrupts, an error-handling routine has to be implemented to keep the system in a defined state after an unintended interrupt.

Unintentional resets disturb the correct program execution, and are not acceptable for extensive applications or safety-critical systems.

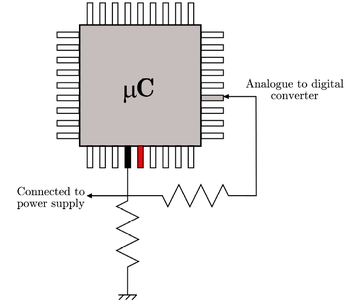

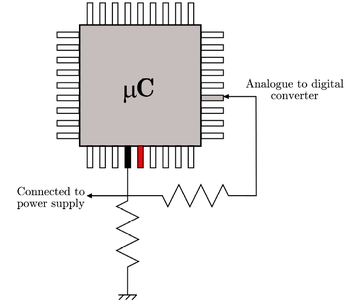

This method is a combination of hard- and software implementations. It proposes a simple circuit to detect an electromagnetic interference using the device's own resources. Most microcontrollers, like the ATMega16, integrate analog to digital converters (ADCs), which could be used to detect unusual power supply fluctuations caused by interferences.

This method is a combination of hard- and software implementations. It proposes a simple circuit to detect an electromagnetic interference using the device's own resources. Most microcontrollers, like the ATMega16, integrate analog to digital converters (ADCs), which could be used to detect unusual power supply fluctuations caused by interferences.

When an interference is detected by the software, the microcontroller could enter a safe state while waiting for the aggression to pass. During this safe state, no critical executions are allowed. The graphic presents how interference detection can be performed. This technique can easily be used with any microcontroller that features an AD-converter.

is an independent parallel component which detects software errors and hardware errors reliably.

The software informs the watchdog at regular intervals that it is still working properly. If the watchdog is not informed, it means that the software is not working as specified any more. Then the watchdog resets the system to a defined state. During the reset, the device is not able to process data and does not react to calls.

As the strategy to reset the watchdog timer is very important, two requirements have to be attended:

Simple activation of the watchdog and regular resets of the timer do not make optimal use of a watchdog. For best results, the refresh cycle of the timer must be set as short as possible and called from the main function, so a reset can be performed before damage is caused or an error occurred. If a microcontroller does not have an internal watchdog, a similar functionality can be implemented by the use of a timer interrupt or an external device.

circuit monitors the VCC level during operation by comparing it to a fixed trigger level. When VCC drops below the trigger level, the brown-out reset is immediately activated. When VCC rises again, the MCU is restarted after a certain delay.

Firmware

In electronic systems and computing, firmware is a term often used to denote the fixed, usually rather small, programs and/or data structures that internally control various electronic devices...

for an embedded system

Embedded system

An embedded system is a computer system designed for specific control functions within a larger system. often with real-time computing constraints. It is embedded as part of a complete device often including hardware and mechanical parts. By contrast, a general-purpose computer, such as a personal...

, immunity-aware programming refers to programming techniques which improve the tolerance of transient errors in the program counter

Program counter

The program counter , commonly called the instruction pointer in Intel x86 microprocessors, and sometimes called the instruction address register, or just part of the instruction sequencer in some computers, is a processor register that indicates where the computer is in its instruction sequence...

or other modules of a program that would otherwise lead to failure. Transient errors are typically caused by single event upset

Single event upset

A single event upset is a change of state caused by ions or electro-magnetic radiation striking a sensitive node in a micro-electronic device, such as in a microprocessor, semiconductor memory, or power transistors. The state change is a result of the free charge created by ionization in or close...

s, insufficient power, or by strong electromagnetic

Electromagnetic

Electromagnetic may refer to:* Electromagnetism* Electromagnetic field* Electromagnetic force* Electromagnetic radiation* Electromagnetic induction* Electromagnetic spectrum...

signals transmitted by some other "source" device.

Immunity-aware programming is an example of defensive programming

Defensive programming

Defensive programming is a form of defensive design intended to ensure the continuing function of a piece of software in spite of unforeseeable usage of said software. The idea can be viewed as reducing or eliminating the prospect of Murphy's Law having effect...

and EMC-aware programming. Although most of these techniques apply to the software in the "victim" device to make it more reliable, a few of these techniques apply to software in the "source" device to make it emit less unwanted noise.

Task and objectives

Microcontrollers' firmwareFirmware

In electronic systems and computing, firmware is a term often used to denote the fixed, usually rather small, programs and/or data structures that internally control various electronic devices...

can inexpensively improve the electromagnetic compatibility

Electromagnetic compatibility

Electromagnetic compatibility is the branch of electrical sciences which studies the unintentional generation, propagation and reception of electromagnetic energy with reference to the unwanted effects that such energy may induce...

of an embedded system

Embedded system

An embedded system is a computer system designed for specific control functions within a larger system. often with real-time computing constraints. It is embedded as part of a complete device often including hardware and mechanical parts. By contrast, a general-purpose computer, such as a personal...

.

Embedded systems firmware is usually not considered to be a source of radio frequency interference. Radio emissions are often caused by harmonic

Harmonic

A harmonic of a wave is a component frequency of the signal that is an integer multiple of the fundamental frequency, i.e. if the fundamental frequency is f, the harmonics have frequencies 2f, 3f, 4f, . . . etc. The harmonics have the property that they are all periodic at the fundamental...

frequencies of the system clock and switching currents. The pulses on these wires can have fast rise and fall times, causing their wires to act as radio transmitters. This effect is increased by badly-designed printed circuit boards. These effects are reduced by using microcontroller output drivers with slower rise times, or by turning off system components.

The microcontroller is easy to control. It is also susceptible to faults from radio frequency interference. Therefore, making the microcontroller's software resist such errors can cheaply improve the system's tolerance for electromagnetic interference by reducing the need for hardware alterations.

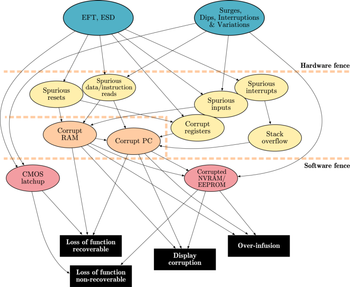

Possible interferences of microcontroller-based systems

CMOSCMOS

Complementary metal–oxide–semiconductor is a technology for constructing integrated circuits. CMOS technology is used in microprocessors, microcontrollers, static RAM, and other digital logic circuits...

microcontroller

Microcontroller

A microcontroller is a small computer on a single integrated circuit containing a processor core, memory, and programmable input/output peripherals. Program memory in the form of NOR flash or OTP ROM is also often included on chip, as well as a typically small amount of RAM...

s have specific weak spots which can be strengthened by software that works against electromagnetic interference. Failure mode and effects analysis

Failure mode and effects analysis

A failure modes and effects analysis is a procedure in product development and operations management for analysis of potential failure modes within a system for classification by the severity and likelihood of the failures...

of a system and its requirements is often required. Electromagnetic compatibility issues can easily be added to such an analysis.

Power supply

Slow changes of power supply voltage do not cause significant disturbances, but rapid changes can make unpredictable trouble. If a voltage exceeds parameters in the controller's data sheet by 150 percent, it can cause the input port or the output port to get hung in one state, known as CMOS latch-up. Without internal current control, latch-up causes the microcontroller to burn out. The standard solution is a mix of software and hardware changes. Most embedded systems have a software watchdog timerWatchdog timer

A watchdog timer is a computer hardware or software timer that triggers a system reset or other corrective action if the main program, due to some fault condition, such as a hang, neglects to regularly service the watchdog A watchdog timer (or computer operating properly (COP) timer) is a computer...

. This watchdog should be external to the microcontroller, and immune to any plausible electromagnetic interference. It should reset the power supply, briefly switching it off. The watchdog period should be half or less of the time and power required to burn out the microcontroller. The power supply design should be well-grounded and decoupled using capacitors and inductors close to the microcontroller; some typical values are 100uF and 0.1uF in parallel.

Low power can cause serious malfunctions in most microcontrollers. For the CPU to successfully decode and execute instructions, the supplied voltage must not drop below the minimum voltage level. When the supplied voltage drops below this level, the CPU may start to execute some instructions incorrectly. The result is unexpected activity on the internal data and control lines. This activity may cause:

- CPU register corruption

- I/O register corruption

- I/O pin random toggling

- SRAM corruption

- EEPROMEEPROMEEPROM stands for Electrically Erasable Programmable Read-Only Memory and is a type of non-volatile memory used in computers and other electronic devices to store small amounts of data that must be saved when power is removed, e.g., calibration...

corruption

Brownout

Brownout (electricity)

A brownout is an intentional drop in voltage in an electrical power supply system used for load reduction in an emergency. The reduction lasts for minutes or hours, as opposed to short-term voltage sag or dip. The term brownout comes from the dimming experienced by lighting when the voltage sags...

detection solves most of those problems in most systems by causing the system to shut down when mains power is unreliable. One typical system retriggers a timer each time that the AC main voltage exceeds 90% of its rated voltage. If the timer expires, it interrupts the microcontroller, which then shuts down its system. Many systems also measure the power supply voltages, to guard against slow power supply degradation.

The oscillator

The input ports of CMOS oscillators have high impedancesElectrical impedance

Electrical impedance, or simply impedance, is the measure of the opposition that an electrical circuit presents to the passage of a current when a voltage is applied. In quantitative terms, it is the complex ratio of the voltage to the current in an alternating current circuit...

, and are thus very susceptible to transient disturbances. According to Ohm's law

Ohm's law

Ohm's law states that the current through a conductor between two points is directly proportional to the potential difference across the two points...

, high impedance causes high voltage differences. They also are very sensitive to short circuit

Short circuit

A short circuit in an electrical circuit that allows a current to travel along an unintended path, often where essentially no electrical impedance is encountered....

from moisture or dust.

One typical failure is when the oscillators' stability is affected. This can cause it to stop, or change its period. The normal system hedges are to have an auxiliary oscillator using some cheap, robust scheme such as a ring of inverters or a resistor-capacitor one-shot timer. After a reset (perhaps caused by a software watchdog), the system may default to these, only switching in the sensitive crystal oscillator once timing measurements have proven it to be stable. It is also common in high-reliability systems to measure the clock frequency by comparing it to an external standard, usually a communications clock, the power line, or a resistor-capacitor timer.

Bursts of electromagnetic interference

Electromagnetic interference

Electromagnetic interference is disturbance that affects an electrical circuit due to either electromagnetic induction or electromagnetic radiation emitted from an external source. The disturbance may interrupt, obstruct, or otherwise degrade or limit the effective performance of the circuit...

can shorten clock periods or cause runt pulses that lead to incorrect data access or command execution. The result is wrong memory content or program pointers. The standard method of overcoming this in hardware is to use an on-chip phase locked loop to generate the microcontroller's actual clock signal. Software

Software design

Software design is a process of problem solving and planning for a software solution. After the purpose and specifications of software are determined, software developers will design or employ designers to develop a plan for a solution...

can periodically verify data structures and read critical ports using voting, distributing the reads in time or space.

Input/Output ports

Input/Output ports—including address lines and data lines—connected by long lines or external peripherals are the antennae that permit disturbances to have effects. Electromagnetic interference can lead to incorrect data and addresses on these lines. Strong fluctuations can cause the computer to misread I/O registers or even stop communication with these ports. Electrostatic dischargeElectrostatic discharge

Electrostatic discharge is a serious issue in solid state electronics, such as integrated circuits. Integrated circuits are made from semiconductor materials such as silicon and insulating materials such as silicon dioxide...

can actually destroy ports or cause malfunctions.

Most microcontrollers' pins are high impedance inputs or mixed inputs and outputs. High impedance input pins are sensitive to noise, and can register false levels if not properly terminated. Pins that are not terminated inside an IC need resistors attached. These have to be connected to ground or supply, ensuring a known logic state.

Corrective actions

An analysis of possible errors before correction is very important. The cause must be determined so that the problem can be fixed.The Motor Industry Software Reliability Association

Motor Industry Software Reliability Association

Motor Industry Software Reliability Association is an organization that produces guidelines for the software developed for electronic components used in Automotive Industry...

identifies the required steps in case of an error as follows:

- Information/warning the user

- Store the faulty data until a defined reset can be carried out

- Keep the system in a defined state until the error can be corrected

Instruction pointer (IP) error management

A disturbed instruction pointer can lead to serious errors, such as an undefined jump to an arbitrary point in the memory, where illegal instructions are read. The state of the system will be undefined. IP errors can be handled by use of software based solutions such as function tokens and an NOP slideNOP slide

In computer CPUs, a NOP slide, NOP sled or NOP ramp is a sequence of NOP instructions meant to "slide" the CPU's instruction execution flow to its final, desired, destination...

(s).

Many processors, such as the Motorola 680x0, feature a hardware trap upon encountering an illegal instruction. A correct instruction, defined in the trap vector, is executed, rather than the random one. Traps can handle a larger range errors than function tokens and NOP slides. Supplementary to illegal instructions, hardware traps securely handle memory access violations, overflows, or a division by zero.

Token passing (function token)

Token passing

In telecommunication, token passing is a channel access method where a signal called a token is passed between nodes that authorizes the node to communicate. The most well-known examples are token ring and ARCNET....

. The figure to the right shows the functional principle schematically. This method deals with program flow errors caused by the instruction pointers.

The implementation is simple and efficient. Every function is tagged with a unique function ID. When the function is called, the function ID is saved in a global variable. The function is only executed if the function ID in the global variable and the ID of the function match. If the IDs do not match, an instruction pointer error has occurred, and specific corrective actions can be taken. A sample implementation of token passing using a global variable programmed in C is stated in the following source listing.

The implementation of function tokens increases the program code size by 10 to 20%, and slows down the performance. To improve the implementation, instead of global variables like above, the function ID can be passed as an argument within the functions header as shown in the code sample below.

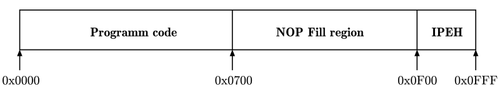

NOP slide

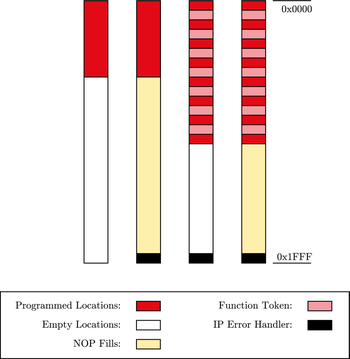

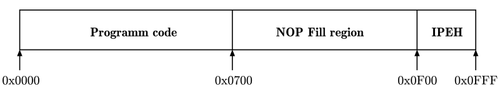

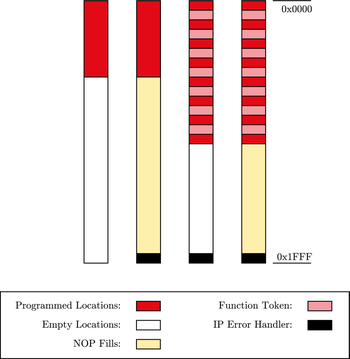

With NOP-Fills, the reliability of a system in case of a disturbed instruction pointer can be improved in some cases. The entire program memory that is not used by the program code is filled with No-Operation (NOP) instructions. In machine code a NOP instruction is often represented by 0x00 (e.g. Intel 8051, ATMega16 etc.). The system is kept in a defined state. At the end of the physical program memory, an instruction pointer error handling (IPEH IP-Error-Handler) has to be implemented. In some cases this can be a simple reset.If an instruction pointer error occurs during the execution and a program points to a memory segment filled with NOP instructions, inevitably an error occurred and is recognized.

Two methods of implementing NOP-Fills are applicable.

- In the first method, the unused physical memory is set to 0x00 manually by search and replace in the (HEX) program file. The drawback of this method is that this has to be done after every compilation.

- The second method is to include a corresponding number of NOP assembler directives directly in the program code.

When using the CodevisionAVR C compiler, NOP fills can be implemented easily. The chip programmer offers the feature of editing the program flash and eeprom to fill it with a specific value. Using an Atmel

Atmel

Atmel Corporation is a manufacturer of semiconductors, founded in 1984. Its focus is on system-level solutions built around flash microcontrollers...

ATMega16, no jump to reset address 0x00 needs to be implemented, as the overflow of the instruction pointer automatically sets its value to 0x00. Unfortunately, resets due to overflow are not equivalent to intentional reset. During the intended reset, all necessary MC registers are reset by hardware, which is not done by a jump to 0x00. So this method will not be applied in the following tests.

I/O register errors

Microcontroller architecture requires the I/O leads to be placed at the outer edge of the silicon die. Thus I/O contacts are strongly affected by transient disturbances on their way to the silicon core, and I/O registers are one of the most vulnerable parts of the microcontroller. Wrongly-read I/O registers may lead to an incorrect system state. The most serious errors can occur at the reset port and interrupt input ports. Disturbed data direction registers (DDR) may inhibit writing to the bus.These disturbances can be prevented as following:

1. Cyclic update of the most important registers

- By cyclically updating of the most important register and the data in the data direction registers in shortest possible intervals, errors can be reduced. Thus a wrongly set bit can be corrected before it can have negative effects.

2. Multiple read of input registers

- A further method of filtering disturbances is multiple read of input registers. The read-in values are then checked for consistency. If the values are consistent, they can be considered valid. A definition of a value range and/or the calculation of a mean value can improve the results for some applications.

- Side effect: increased activity

- A drawback is the increased activity due to permanent updates and readouts of peripherals. This activity may add additional emissions and failures.

- External interrupt ports; stack overflow

- External interrupts are triggered by falling/rising edges or high/low potential at the interrupt port, leading to an interrupt request (IRQ) in the controller. Hardware interrupts are divided into maskable interrupts and non-maskable interrupts (NMI). The triggering of maskable interrupts can be stopped in some time-critical functions. If an interrupt is called, the current instruction pointer (IP) is saved on the stack, and the stack pointer (SP) is decremented. The address of the interrupt service routine (ISR) is read from the interrupt vector table and loaded to the IP register, and the ISR is executed as a consequence.

- If interrupts—due to disturbances—are generated faster than processed, the stack grows until all memory is used. Data on the stack or other data might be overwritten. A defensive software strategy can be applied. The stack pointer (SP) can be watched. The growing of the stack beyond a defined address can then be stopped. The value of the stack pointer can be checked at the start of the interrupt service routine. If the SP points to an address outside the defined stack limits, a reset can be executed.

Data redundancy

In systems without error detection and correction units, the reliability of the system can be improved by providing protection through software. Protecting the entire memory (code and data) may not be practical in software, as it causes an unacceptable amount of overhead, but it is a software implemented low-cost solution for code segments.Another elementary requirement of digital systems is the faultless transmission of data. Communication with other components can be the weak point and a source of errors of a system. A well-thought-out transmission protocol is very important. The techniques described below can also be applied to data transmitted, hence increasing transmission reliability.

Cyclic redundancy and parity check

A cyclic redundancy checkCyclic redundancy check

A cyclic redundancy check is an error-detecting code commonly used in digital networks and storage devices to detect accidental changes to raw data...

is a type of hash function

Hash function

A hash function is any algorithm or subroutine that maps large data sets to smaller data sets, called keys. For example, a single integer can serve as an index to an array...

used to produce a checksum

Checksum

A checksum or hash sum is a fixed-size datum computed from an arbitrary block of digital data for the purpose of detecting accidental errors that may have been introduced during its transmission or storage. The integrity of the data can be checked at any later time by recomputing the checksum and...

, which is a small integer from a large block of data, such as network traffic or computer files. CRCs are calculated before and after transmission or duplication, and compared to confirm that they are equal. A CRC detects all one- or two-bit errors, all odd errors, all burst errors if the burst is smaller than the CRC, and most of the wide-burst errors. Parity checks can be applied to single characters (VRC—vertical redundancy check), resulting an additional parity bit

Parity bit

A parity bit is a bit that is added to ensure that the number of bits with the value one in a set of bits is even or odd. Parity bits are used as the simplest form of error detecting code....

or to a block of data (LRC—longitudinal redundancy check

Longitudinal redundancy check

In telecommunication, a longitudinal redundancy check or horizontal redundancy check is a form of redundancy check that is applied independently to each of a parallel group of bit streams...

), issuing a block check character. Both methods can be implemented rather easily by using an XOR operation. A trade-off is that less errors can be detected than with the CRC. Parity Checks only detect odd numbers of flipped bits. The even numbers of bit errors stay undetected. A possible improvement is the usage of both VRC and LRC, called Double Parity or Optimal Rectangular Code (ORC)

Group Code Recording

In computer science, group code recording refers to several distinct but related encoding methods for magnetic media. The first, used in 6250 cpi magnetic tape, is an error-correcting code combined with a run length limited encoding scheme...

.

Some microcontrollers feature a hardware CRC unit.

Different kinds of duplication

A specific method of data redundancy is duplication, which can be applied in several ways, as described in the following:- Data duplication

- To cope with corruption of data, multiple copies of important registers and variables can be stored. Consistency checks between memory locations storing the same values, or voting techniques, can then be performed when accessing the data.

- Two different modifications to the source code need to be implemented.

- The first one corresponds to duplicating some or all of the program variables to introduce data redundancy, and modifying all the operators to manage the introduced replica of the variables.

- The second modification introduces consistency checks in the control flow, so that consistency between the two copies of each variable is verified.

When the data is read out, the two sets of data are compared. A disturbance is detected if the two data sets are not equal. An error can be reported. If both sets of data are corrupted, a significant error can be reported and the system can react accordingly.

In most cases, safety-critical applications have strict constraints in terms of memory occupation and system performance. The duplication of the whole set of variables and the introduction of a consistency check before every read operation represent the optimum choice from the fault coverage point of view. Duplication of the whole set of variables enables an extremely high percentage of faults to be covered by this software redundancy technique. On the other side, by duplicating a lower percentage of variables one can trade off the obtained fault coverage with the CPU time overhead.

Attention should also be paid to the implementation of the consistency check, as it is usually carried out after each read operation or at the end of each variable's life period. Carefully implementing this check can minimize the CPU time and code size for this application.

- Function parameter duplication

As the detection of errors in data is achieved through duplicating all variables and adding consistency checks after every read operation, special considerations have to be applied according to the procedure interfaces. Parameters passed to procedures, as well as return values, are considered to be variables. Hence, every procedure parameter is duplicated, as well as the return values. A procedure is still called only once, but it returns two results, which must hold the same value. The source listing to the right shows a sample implementation of function parameter duplication.

- Test duplication

To duplicate a test is one of the most robust methods that exists for generic soft error detection. A drawback is that no strict assumption on the cause of the errors (EMI, ESD etc.), nor on the type of errors to expect (errors affecting the control flow, errors affecting data etc.) can be made. Erroneous bit-changes in data-bytes while stored in memory, cache, register, or transmitted on a bus are known. These data-bytes could be operation codes (instructions), memory addresses, or data. Thus, this method is able to detect a wide range of faults, and is not limited to a specific fault model. Using this method, memory increases about four times, and execution time is about 2.5 times as long as the same program without test duplication. Source listing to the right shows a sample implementation of the duplication of test conditions.

- Branching duplication

For every conditional test in the program, the condition and the resulting jump should be reevaluated, as shown in the figure. Only if the condition is met again, the jump is executed, else an error has occurred.

- Instruction duplication and diversity in implementation

What is the benefit of when data, tests, and branches are duplicated when the calculated result is incorrect? One solution is to duplicate an instruction entirely, but implement them differently. So two different programs with the same functionality, but with different sets of data and different implementations are executed. Their outputs are compared, and must be equal. This method covers not just bit-flips or processor faults but also programming errors (bugs). If it is intended to especially handle hardware (CPU) faults, the software can be implemented using different parts of the hardware; for example, one implementation uses a hardware multiply and the other implementation multiplies by shifting or adding. This causes a significant overhead (more than a factor of two for the size of the code). On the other hand, the results are outstandingly accurate.

Reset ports and interrupt ports

Reset ports and interrupts are very important, as they can be triggered by rising/falling edges or high/low potential at the interrupt port. Transient disturbances can lead to unwanted resets or trigger interrupts, and thus cause the entire system to crash. For every triggered interrupt, the instruction pointer is saved on the stack, and the stack pointer is decremented.Try to reduce the amount of edge triggered interrupts. If interrupts can be triggered only with a level, then this helps to ensure that noise on an interrupt pin will not cause an undesired operation. It must be kept in mind that level-triggered interrupts can lead to repeated interrupts as long as the level stays high. In the implementation, this characteristic must be considered; repeated unwanted interrupts must be disabled in the ISR. If this is not possible, then on immediate entry of an edge-triggered interrupt, a software check on the pin to determine if the level is correct should suffice.

For all unused interrupts, an error-handling routine has to be implemented to keep the system in a defined state after an unintended interrupt.

Unintentional resets disturb the correct program execution, and are not acceptable for extensive applications or safety-critical systems.

Reset differentiation (cold/warm start)

A frequent system requirement is the automatic resumption of work after a disturbance/disruption. It can be useful to record the state of a system at shut down and to save the data in a non-volatile memory. At startup the system can evaluate if the system restarts due to disturbance or failure (warm start), and the system status can be restored or an error can be indicated. In case of a cold start, the saved data in the memory can be considered valid.External current consumption measurement

When an interference is detected by the software, the microcontroller could enter a safe state while waiting for the aggression to pass. During this safe state, no critical executions are allowed. The graphic presents how interference detection can be performed. This technique can easily be used with any microcontroller that features an AD-converter.

Watchdog

Watchdog timers significantly improve the reliability of a microcontroller in an electromagnetically-influenced environment. They are often integrated on the chip. A watchdog timerWatchdog timer

A watchdog timer is a computer hardware or software timer that triggers a system reset or other corrective action if the main program, due to some fault condition, such as a hang, neglects to regularly service the watchdog A watchdog timer (or computer operating properly (COP) timer) is a computer...

is an independent parallel component which detects software errors and hardware errors reliably.

The software informs the watchdog at regular intervals that it is still working properly. If the watchdog is not informed, it means that the software is not working as specified any more. Then the watchdog resets the system to a defined state. During the reset, the device is not able to process data and does not react to calls.

As the strategy to reset the watchdog timer is very important, two requirements have to be attended:

- The watchdog may only be reset if all routines work properly.

- The reset must be executed as quickly as possible.

Simple activation of the watchdog and regular resets of the timer do not make optimal use of a watchdog. For best results, the refresh cycle of the timer must be set as short as possible and called from the main function, so a reset can be performed before damage is caused or an error occurred. If a microcontroller does not have an internal watchdog, a similar functionality can be implemented by the use of a timer interrupt or an external device.

Brown-out

A brown-outBrownout (electricity)

A brownout is an intentional drop in voltage in an electrical power supply system used for load reduction in an emergency. The reduction lasts for minutes or hours, as opposed to short-term voltage sag or dip. The term brownout comes from the dimming experienced by lighting when the voltage sags...

circuit monitors the VCC level during operation by comparing it to a fixed trigger level. When VCC drops below the trigger level, the brown-out reset is immediately activated. When VCC rises again, the MCU is restarted after a certain delay.

See also

- EMC Aware ProgrammingEMC Aware ProgrammingElectromagnetic compatibility –aware programming involves writing software which is resilient to errors induced by electromagnetic fields.-Motivation:...

- Emission Aware ProgrammingEmission Aware ProgrammingEmission-aware programming is a design philosophy aiming to reduce the amount of electromagnetic radiation emitted by electronic devices through proper design of the software executed by the device, rather than changing the hardware.-Emission Sources:...

- Electromagnetic compatibilityElectromagnetic compatibilityElectromagnetic compatibility is the branch of electrical sciences which studies the unintentional generation, propagation and reception of electromagnetic energy with reference to the unwanted effects that such energy may induce...

- List of EMC directives

- fault-tolerant computer system