Checksum

Encyclopedia

Datum

A geodetic datum is a reference from which measurements are made. In surveying and geodesy, a datum is a set of reference points on the Earth's surface against which position measurements are made, and an associated model of the shape of the earth to define a geographic coordinate system...

computed from an arbitrary block of digital

Digital

A digital system is a data technology that uses discrete values. By contrast, non-digital systems use a continuous range of values to represent information...

data for the purpose of detecting accidental errors that may have been introduced during its transmission

Telecommunication

Telecommunication is the transmission of information over significant distances to communicate. In earlier times, telecommunications involved the use of visual signals, such as beacons, smoke signals, semaphore telegraphs, signal flags, and optical heliographs, or audio messages via coded...

or storage

Computer storage

Computer data storage, often called storage or memory, refers to computer components and recording media that retain digital data. Data storage is one of the core functions and fundamental components of computers....

. The integrity of the data can be checked at any later time by recomputing the checksum and comparing it with the stored one. If the checksums match, the data were almost certainly not altered (either intentionally or unintentionally).

The procedure

Algorithm

In mathematics and computer science, an algorithm is an effective method expressed as a finite list of well-defined instructions for calculating a function. Algorithms are used for calculation, data processing, and automated reasoning...

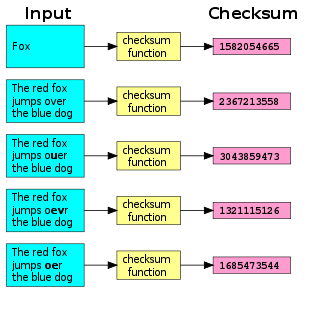

that yields the checksum from the data is called a checksum function or checksum algorithm. A good checksum algorithm will yield a different result with high probability when the data is accidentally corrupted; if the checksums match, the data is very likely to be free of accidental errors.

Checksum functions are related to hash function

Hash function

A hash function is any algorithm or subroutine that maps large data sets to smaller data sets, called keys. For example, a single integer can serve as an index to an array...

s, fingerprint

Fingerprint (computing)

In computer science, a fingerprinting algorithm is a procedure that maps an arbitrarily large data item to a much shorter bit string, its fingerprint, that uniquely identifies the original data for all practical purposes just as human fingerprints uniquely identify people for practical purposes...

s, randomization function

Randomization function

In computer science, a randomization function or randomizing function is an algorithm or procedure that implements a randomly chosen function between two specific sets, suitable for use in a randomized algorithm....

s, and cryptographic hash function

Cryptographic hash function

A cryptographic hash function is a deterministic procedure that takes an arbitrary block of data and returns a fixed-size bit string, the hash value, such that an accidental or intentional change to the data will change the hash value...

s. However, each of those concepts has different applications and therefore different design goals. Check digit

Check digit

A check digit is a form of redundancy check used for error detection, the decimal equivalent of a binary checksum. It consists of a single digit computed from the other digits in the message....

s and parity bit

Parity bit

A parity bit is a bit that is added to ensure that the number of bits with the value one in a set of bits is even or odd. Parity bits are used as the simplest form of error detecting code....

s are special cases of checksums, appropriate for small blocks of data (such as Social Security number

Social Security number

In the United States, a Social Security number is a nine-digit number issued to U.S. citizens, permanent residents, and temporary residents under section 205 of the Social Security Act, codified as . The number is issued to an individual by the Social Security Administration, an independent...

s, bank account

Bank account

A Bank account is a financial account recording the financial transactions between the customer and the bank and the resulting financial position of the customer with the bank .-Account types:...

numbers, computer words, single byte

Byte

The byte is a unit of digital information in computing and telecommunications that most commonly consists of eight bits. Historically, a byte was the number of bits used to encode a single character of text in a computer and for this reason it is the basic addressable element in many computer...

s, etc.). Some error-correcting codes are based on special checksums that not only detect common errors but also allow the original data to be recovered in certain cases.

Parity byte or parity word

The simplest checksum algorithm is the so-called longitudinal parity checkLongitudinal redundancy check

In telecommunication, a longitudinal redundancy check or horizontal redundancy check is a form of redundancy check that is applied independently to each of a parallel group of bit streams...

, which breaks the data into "words" with a fixed number n of bits, and then computes the exclusive or of all those words. The result is appended to the message as an extra word. To check the integrity of a message, the receiver computes the exclusive or of all its words, including the checksum; if the result is not a word with n zeros, the receiver knows that a transmission error occurred.

With this checksum, any transmission error that flips a single bit of the message, or an odd number of bits, will be detected as an incorrect checksum. However, an error that affects two bits will not be detected if those bits lie at the same position in two distinct words. If the affected bits are independently chosen at random, the probability of a two-bit error being undetected is 1/n.

Modular sum

A variant of the previous algorithm is to add all the "words" as unsigned binary numbers, discarding any overflow bits, and append the two's complementTwo's complement

The two's complement of a binary number is defined as the value obtained by subtracting the number from a large power of two...

of the total as the checksum. To validate a message, the receiver adds all the words in the same manner, including the checksum; if the result is not a word full of zeros, an error must have occurred. This variant too detects any single-bit error, but the probability that a two-bit error will go undetected is a little less than 1/n.

Position-dependent checksums

The simple checksums described above fail to detect some common errors that affect many bits at once, such as changing the order of data words, or inserting or deleting words with all bits set to zero. The checksum algorithms that are most used in practice, such as Fletcher's checksumFletcher's checksum

The Fletcher checksum is an algorithm for computing a position-dependent checksum devised by John G. Fletcher at Lawrence Livermore Labs in the late 1970s. A description of the algorithm and an analysis of the performance characteristics of a particular implementation were published in the IEEE...

, Adler-32

Adler-32

Adler-32 is a checksum algorithm which was invented by Mark Adler in 1995, and is a modification of the Fletcher checksum. Compared to a cyclic redundancy check of the same length, it trades reliability for speed. Adler-32 is more reliable than Fletcher-16, and slightly less reliable than Fletcher-32...

, and cyclic redundancy check

Cyclic redundancy check

A cyclic redundancy check is an error-detecting code commonly used in digital networks and storage devices to detect accidental changes to raw data...

s (CRCs), address these weaknesses by considering not only the value of each word but also its position in the sequence. This feature generally increases the cost

Computational Complexity

Computational Complexity may refer to:*Computational complexity theory*Computational Complexity...

of computing the checksum.

General considerations

A message that is m bits long can be viewed as a corner of the m-dimensional hypercubeHypercube

In geometry, a hypercube is an n-dimensional analogue of a square and a cube . It is a closed, compact, convex figure whose 1-skeleton consists of groups of opposite parallel line segments aligned in each of the space's dimensions, perpendicular to each other and of the same length.An...

. The effect of a checksum algorithm that yields an n-bit checksum is to map each m-bit message to a corner of a larger hypercube, with dimension m+n. The 2m+n corners of this hypercube represent all possible received messages. The valid received messages (those that have the correct checksum) comprise a smaller set, with only 2m corners.

A single-bit transmission error then corresponds to a displacement from a valid corner (the correct message and checksum) to one of the m adjacent corners. An error that affects k bits moves the message to a corner that is k steps removed from its correct corner. The goal of a good checksum algorithm is to spread the valid corners as far from each other as possible, so as to increase the likelihood that "typical" transmission errors will end up in an invalid corner.

Checksum tools

- cksumCksumcksum' is a command in Unix-like operating systems that generates a checksum value for a file or stream of data. The cksum command reads each file given in its arguments, or standard input if no arguments are provided, and outputs the file's CRC checksum and byte count.The cksum command can be...

, a UnixUnixUnix is a multitasking, multi-user computer operating system originally developed in 1969 by a group of AT&T employees at Bell Labs, including Ken Thompson, Dennis Ritchie, Brian Kernighan, Douglas McIlroy, and Joe Ossanna...

command that generates both a 32-bit CRC and a byte count for any given input file. - Bitser, a free Microsoft WindowsMicrosoft WindowsMicrosoft Windows is a series of operating systems produced by Microsoft.Microsoft introduced an operating environment named Windows on November 20, 1985 as an add-on to MS-DOS in response to the growing interest in graphical user interfaces . Microsoft Windows came to dominate the world's personal...

application that calculates MD5MD5The MD5 Message-Digest Algorithm is a widely used cryptographic hash function that produces a 128-bit hash value. Specified in RFC 1321, MD5 has been employed in a wide variety of security applications, and is also commonly used to check data integrity...

, SHA-1 and SHA-256 sums for any given input file. - md5sumMd5summd5sum is a computer program that calculates and verifies 128-bit MD5 hashes, as described in RFC 1321. The MD5 hash functions as a compact digital fingerprint of a file. As with all such hashing algorithms, there is theoretically an unlimited number of files that will have any given MD5 hash...

, a UnixUnixUnix is a multitasking, multi-user computer operating system originally developed in 1969 by a group of AT&T employees at Bell Labs, including Ken Thompson, Dennis Ritchie, Brian Kernighan, Douglas McIlroy, and Joe Ossanna...

command that generates an MD5MD5The MD5 Message-Digest Algorithm is a widely used cryptographic hash function that produces a 128-bit hash value. Specified in RFC 1321, MD5 has been employed in a wide variety of security applications, and is also commonly used to check data integrity...

sum - jdigest, a Java GUI tool that generates and checks MD5MD5The MD5 Message-Digest Algorithm is a widely used cryptographic hash function that produces a 128-bit hash value. Specified in RFC 1321, MD5 has been employed in a wide variety of security applications, and is also commonly used to check data integrity...

and SHAShaFor other uses, see Sha .Sha is a letter of the Cyrillic alphabet. It commonly represents the voiceless postalveolar fricative , like the pronunciation of ⟨sh⟩ in "sheep", or the somewhat similar voiceless retroflex fricative . It is used in every variation of the Cyrillic alphabet, for Slavic and...

sums - Jacksum, a Java API, usable both through a GUI and a CLI, which incorporates many checksum implementations and allows to extend with as many as you need.

- jcksum, a Java library, that can be used by developers in Java applications to calculate checksums using different algorithms.

See also

- Check digitCheck digitA check digit is a form of redundancy check used for error detection, the decimal equivalent of a binary checksum. It consists of a single digit computed from the other digits in the message....

- File verificationFile verificationFile verification is the process of using an algorithm for verifying the integrity or authenticity of a computer file. This can be done by comparing two files bit-by-bit, but requires two copies of the same file, and may miss systematic corruptions which might occur to both files...

- Hamming codeHamming codeIn telecommunication, Hamming codes are a family of linear error-correcting codes that generalize the Hamming-code invented by Richard Hamming in 1950. Hamming codes can detect up to two and correct up to one bit errors. By contrast, the simple parity code cannot correct errors, and can detect only...

- List of hash functions

- Luhn algorithmLuhn algorithmThe Luhn algorithm or Luhn formula, also known as the "modulus 10" or "mod 10" algorithm,is a simple checksum formula used to validate a variety of identification numbers, such as credit card numbers, IMEI numbers, National Provider Identifier numbers in US and Canadian Social Insurance Numbers...

- Verhoeff algorithmVerhoeff algorithmThe Verhoeff algorithm, a checksum formula for error detection first published in 1969, was developed by Dutch mathematician Jacobus Verhoeff . Like the more widely known Luhn algorithm, it works with strings of decimal digits of any length...

- Parity bitParity bitA parity bit is a bit that is added to ensure that the number of bits with the value one in a set of bits is even or odd. Parity bits are used as the simplest form of error detecting code....

- Frame check sequenceFrame Check SequenceA frame check sequence refers to the extra checksum characters added to a frame in a communication protocol for error detection and correction. Frames are used to send upper-layer data and ultimately the user application data from a source to a destination. The data package includes the message...

- Bit rotBit rotBit rot, also known as bit decay, data rot, or data decay, is a colloquial computing term used to describe either a gradual decay of storage media or the degradation of a software program over time. The latter use of the term implies that software can wear out or rust like a physical tool...

- ZFSZFSIn computing, ZFS is a combined file system and logical volume manager designed by Sun Microsystems. The features of ZFS include data integrity verification against data corruption modes , support for high storage capacities, integration of the concepts of filesystem and volume management,...

A file system that performs automatic file integrity checking using checksums