.gif)

Local search (constraint satisfaction)

Encyclopedia

In constraint satisfaction

, local search is an incomplete method for finding a solution to a problem

. It is based on iteratively improving an assignment of the variables until all constraints are satisfied. In particular, local search algorithms typically modify the value of a variable in an assignment at each step. The new assignment is close to the previous one in the space of assignment, hence the name local search.

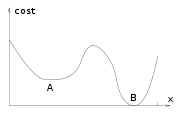

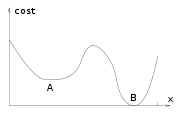

All local search algorithms use a function that evaluates the quality of assignment, for example the number of constraints violated by the assignment. This amount is called the cost of the assignment. The aim of local search is that of finding an assignment of minimal cost, which is a solution if any exists.

Two classes of local search algorithms exist. The first one is that of greedy or non-randomized algorithms. These algorithms proceed by changing the current assignment by always trying to decrease (or at least, non-increase) its cost. The main problem of these algorithms is the possible presence of plateaus, which are regions of the space of assignments where no local move decreases cost. The second class of local search algorithm have been invented to solve this problem. They escape these plateaus by doing random moves, and are called randomized local search algorithms.

Two classes of local search algorithms exist. The first one is that of greedy or non-randomized algorithms. These algorithms proceed by changing the current assignment by always trying to decrease (or at least, non-increase) its cost. The main problem of these algorithms is the possible presence of plateaus, which are regions of the space of assignments where no local move decreases cost. The second class of local search algorithm have been invented to solve this problem. They escape these plateaus by doing random moves, and are called randomized local search algorithms.

GSAT

(greedy sat) was the first local search algorithm for satisfiability, and is a form of hill climbing.

This way, every move that would not otherwise change the cost of the solution decreases it. Moreover, the weight of constraints that remain violated for a large number of moves keeps increasing. Therefore, during a number of moves not satisfying a constraint, the cost of moves to assignments satisfying that constraint keeps increasing.

This list is updated every time the assignment is changed. If a variable is assigned to a value, the pair variable/value is added to the list, and the oldest pair is removed from it. This way, the list only contains the most recent assignments to a variable. If a variable/value pair is in the tabu list, changing the current assignment by setting the variable to the value is forbidden. The algorithm can only choose the best move among the ones that are not forbidden. This way, it cannot cycle over the same solution unless the number of moves in this cycle is larger than the length of the tabu list.

, which is a real number between 0 and 1. At every move, with probability

, which is a real number between 0 and 1. At every move, with probability  the algorithm proceeds like a greedy algorithm, trying to maximally decrease the cost of the assignment. With probability

the algorithm proceeds like a greedy algorithm, trying to maximally decrease the cost of the assignment. With probability  , however, the solution is changed in some other way, which involves some degree of randomness.

, however, the solution is changed in some other way, which involves some degree of randomness.

formulae, which is the original settings of this algorithm, every such a move changes the value of the variable from true to false or vice versa, and produce the satisfiability of the violated constraint. As for all random walk strategies, a random move is only done with a given probability, and a move maximally decreasing the cost is done otherwise.

In particular, if the improvement of cost of a move is negative (the move increases cost), this move is done with probability

of a move is negative (the move increases cost), this move is done with probability  , where

, where  is real number. Since the probability of doing this move increases with

is real number. Since the probability of doing this move increases with  , this parameter is called the temperature. Simulated annealing decreases this temperature over time, thus allowing more random moves at the beginning and less after time.

, this parameter is called the temperature. Simulated annealing decreases this temperature over time, thus allowing more random moves at the beginning and less after time.

For any assignment of the variables of the cutset, the remaining problem has a forest as primal graph. As a result, it can be solved efficiently. In order to guide local search, an algorithm detecting the minimal number of constraints that can be violated is used in place of a satisfiability algorithm on the for forest part of the problem.

This minimal number is found by determining the cost of each variable assignment. This cost is the minimal number of constraints violated by an assignment of the variables in the subtree rooted at the variable, when the variable takes the given value. This cost can be calculated as follows. If denotes the cost of the assignment

denotes the cost of the assignment  and

and  are the children of

are the children of  , the following formula holds. In this formula,

, the following formula holds. In this formula,  is the 0 or 1 depending on whether the assignment

is the 0 or 1 depending on whether the assignment  violates the constraint between

violates the constraint between  and

and  .

.

The cost for variables in the cutset is zero, and these variables are assumed to be allowed to take only their given value. With these assumptions, the above formula allows computing the cost of all variable evaluations by iteratively proceeding bottom-up from the leaves to the root(s) of the forest.

The cost of variable evaluations can be used by local search for computing the cost of a solution. The cost of values of the roots of the forest is indeed the minimal number of violated constraints in the forest for these given values. These costs can therefore used to evaluate the cost of the assignment to the cutset variables and to estimate the cost of similar assignments on the cutset variables.

Constraint satisfaction

In artificial intelligence and operations research, constraint satisfaction is the process of finding a solution to a set of constraints that impose conditions that the variables must satisfy. A solution is therefore a vector of variables that satisfies all constraints.The techniques used in...

, local search is an incomplete method for finding a solution to a problem

Constraint satisfaction problem

Constraint satisfaction problems s are mathematical problems defined as a set of objects whose state must satisfy a number of constraints or limitations. CSPs represent the entities in a problem as a homogeneous collection of finite constraints over variables, which is solved by constraint...

. It is based on iteratively improving an assignment of the variables until all constraints are satisfied. In particular, local search algorithms typically modify the value of a variable in an assignment at each step. The new assignment is close to the previous one in the space of assignment, hence the name local search.

All local search algorithms use a function that evaluates the quality of assignment, for example the number of constraints violated by the assignment. This amount is called the cost of the assignment. The aim of local search is that of finding an assignment of minimal cost, which is a solution if any exists.

Hill climbing

The most basic form of local search is based on choosing the change that maximally decreases the cost of the solution. This method, called hill climbing, proceeds as follows: first, a random assignment is chosen; then, a value is changed so as to maximally improve the quality of the resulting assignment. If no solution has been found after a given number of changes, a new random assignment is selected. Hill climbing algorithms can only escape a plateau by doing changes that do not change the quality of the assignment. As a result, they can be stuck in a plateau where the quality of assignment has a local maxima.GSAT

GSAT

The GSAT satellites are India's indigenously developed technologies of satellite communications, like digital audio, data and video broadcasting. GSAT has been designed with two S-band and 3 C-band transponders .-GSAT-4:GSAT-4, also known as HealthSat was an experimental satellite launched on 15th...

(greedy sat) was the first local search algorithm for satisfiability, and is a form of hill climbing.

Constraint weighting or breakout method

A method for escaping from a local minimum is that of using a weighted sum of violated constraints as a measure of cost, and changing some weights when no improving move is available. More precisely, if no change reduces the cost of the assignment, the algorithm increases the weight of constraints violated by the current assignment.This way, every move that would not otherwise change the cost of the solution decreases it. Moreover, the weight of constraints that remain violated for a large number of moves keeps increasing. Therefore, during a number of moves not satisfying a constraint, the cost of moves to assignments satisfying that constraint keeps increasing.

Tabu search

A drawback of hill climbing with moves that do not decrease cost, is that it may cycle over assignments of the same cost. Tabu search overcomes this problem by maintaining a list of "forbidden" assignments, called the tabu list. In particular, the tabu list typically contains the list of the last changes. More precisely, it contains the last variable/value such that the variable has been recently assigned to the value.This list is updated every time the assignment is changed. If a variable is assigned to a value, the pair variable/value is added to the list, and the oldest pair is removed from it. This way, the list only contains the most recent assignments to a variable. If a variable/value pair is in the tabu list, changing the current assignment by setting the variable to the value is forbidden. The algorithm can only choose the best move among the ones that are not forbidden. This way, it cannot cycle over the same solution unless the number of moves in this cycle is larger than the length of the tabu list.

Random walk

A random walk algorithm sometimes moves like a greedy algorithm but sometimes moves randomly. It depends on a parameter , which is a real number between 0 and 1. At every move, with probability

, which is a real number between 0 and 1. At every move, with probability  the algorithm proceeds like a greedy algorithm, trying to maximally decrease the cost of the assignment. With probability

the algorithm proceeds like a greedy algorithm, trying to maximally decrease the cost of the assignment. With probability  , however, the solution is changed in some other way, which involves some degree of randomness.

, however, the solution is changed in some other way, which involves some degree of randomness.WalkSAT

The random move of WalkSAT is changing the value of a random variable of a random violated constraint. For propositional satisfiability of conjunctive normal formConjunctive normal form

In Boolean logic, a formula is in conjunctive normal form if it is a conjunction of clauses, where a clause is a disjunction of literals.As a normal form, it is useful in automated theorem proving...

formulae, which is the original settings of this algorithm, every such a move changes the value of the variable from true to false or vice versa, and produce the satisfiability of the violated constraint. As for all random walk strategies, a random move is only done with a given probability, and a move maximally decreasing the cost is done otherwise.

Simulated annealing

The technique of simulated annealing is based on changing the probability of doing a random move over one that maximally decreasing the cost. In particular, the name originates from the strategy of decreasing the probability of doing random moves during the execution of the algorithm, thus virtually "freezing" the space of search.In particular, if the improvement of cost

of a move is negative (the move increases cost), this move is done with probability

of a move is negative (the move increases cost), this move is done with probability  , where

, where  is real number. Since the probability of doing this move increases with

is real number. Since the probability of doing this move increases with  , this parameter is called the temperature. Simulated annealing decreases this temperature over time, thus allowing more random moves at the beginning and less after time.

, this parameter is called the temperature. Simulated annealing decreases this temperature over time, thus allowing more random moves at the beginning and less after time.Local search on a cycle cutset

Local search usually works on all variables, improving a complete assignment to them. However, local search can also be run on a subset of variables, using some other mechanism for the other variables. A proposed algorithm works on a cycle cutset, which is a set of variables that, if removed from the problem, makes it acyclic.For any assignment of the variables of the cutset, the remaining problem has a forest as primal graph. As a result, it can be solved efficiently. In order to guide local search, an algorithm detecting the minimal number of constraints that can be violated is used in place of a satisfiability algorithm on the for forest part of the problem.

This minimal number is found by determining the cost of each variable assignment. This cost is the minimal number of constraints violated by an assignment of the variables in the subtree rooted at the variable, when the variable takes the given value. This cost can be calculated as follows. If

denotes the cost of the assignment

denotes the cost of the assignment  and

and  are the children of

are the children of  , the following formula holds. In this formula,

, the following formula holds. In this formula,  is the 0 or 1 depending on whether the assignment

is the 0 or 1 depending on whether the assignment  violates the constraint between

violates the constraint between  and

and  .

.

The cost for variables in the cutset is zero, and these variables are assumed to be allowed to take only their given value. With these assumptions, the above formula allows computing the cost of all variable evaluations by iteratively proceeding bottom-up from the leaves to the root(s) of the forest.

The cost of variable evaluations can be used by local search for computing the cost of a solution. The cost of values of the roots of the forest is indeed the minimal number of violated constraints in the forest for these given values. These costs can therefore used to evaluate the cost of the assignment to the cutset variables and to estimate the cost of similar assignments on the cutset variables.