Wave–particle duality

Encyclopedia

Wave–particle duality postulates that all particle

s exhibit both wave

and particle

properties. A central concept of quantum mechanics

, this duality addresses the inability of classical concepts like "particle" and "wave" to fully describe the behavior of quantum-scale objects. Standard interpretations of quantum mechanics explain this paradox

as a fundamental property of the Universe, while alternative interpretations explain the duality as an emergent, second-order consequence of various limitations of the observer. This treatment focuses on explaining the behavior from the perspective of the widely used Copenhagen interpretation

, in which wave–particle duality is one aspect of the concept of complementarity

, that a phenomenon can be viewed in one way or in another, but not both simultaneously.

The idea of duality originated in a debate over the nature of light

and matter

that dates back to the 17th century, when competing theories of light were proposed by Christiaan Huygens and Isaac Newton

: light was thought either to consist of waves (Huygens) or of particles (Newton). Through the work of Max Planck

, Albert Einstein

, Louis de Broglie, Arthur Compton

, Niels Bohr

, and many others, current scientific theory holds that all particles also have a wave nature (and vice versa). This phenomenon has been verified not only for elementary particles, but also for compound particles like atoms and even molecules. For macroscopic

particles, because of their extremely small wavelengths, wave properties usually cannot be detected.

was one of the first to publicly hypothesize about the nature of light, proposing that light is a disturbance in the element air. (That is, it is a wave-like phenomenon). On the other hand, Democritus

– the original atomist

– argued that all things in the universe, including light, are composed of indivisible sub-components (light being some form of solar atom). At the beginning of the 11th Century, the Arabic scientist Alhazen wrote the first comprehensive treatise on optics

; describing refraction, reflection, and the operation of a pinhole lens via rays of light traveling from the point of emission to the eye. He asserted that these rays were composed of particles of light. In 1630, René Descartes

popularized and accredited in the West the opposing wave description in his treatise on light

, showing that the behavior of light could be re-created by modeling wave-like disturbances in his universal medium ("plenum"). Beginning in 1670 and progressing over three decades, Isaac Newton

developed and championed his corpuscular hypothesis

, arguing that the perfectly straight lines of reflection

demonstrated light's particle nature; only particles could travel in such straight lines. He explained refraction

by positing that particles of light accelerated laterally upon entering a denser medium. Around the same time, Newton's contemporaries Robert Hooke

and Christian Huygens – and later Augustin-Jean Fresnel

– mathematically refined the wave viewpoint, showing that if light traveled at different speeds in different media (such as water and air), refraction

could be easily explained as the medium-dependent propagation of light waves. The resulting Huygens–Fresnel principle was extremely successful at reproducing light's behavior and, subsequently supported by Thomas Young

's discovery of double-slit interference, was the beginning of the end for the particle light camp.

The final blow against corpuscular theory came when James Clerk Maxwell

The final blow against corpuscular theory came when James Clerk Maxwell

discovered that he could combine four simple equations

, which had been previously discovered, along with a slight modification to describe self propagating waves of oscillating electric and magnetic fields. When the propagation speed of these electromagnetic waves was calculated, the speed of light

fell out. It quickly became apparent that visible light, ultraviolet light, and infrared light (phenomena thought previously to be unrelated) were all electromagnetic waves of differing frequency. The wave theory had prevailed – or at least it seemed to.

While the 19th century had seen the success of the wave theory at describing light, it had also witnessed the rise of the atomic theory at describing matter. In 1789, Antoine Lavoisier

securely differentiated chemistry

from alchemy

by introducing rigor and precision into his laboratory techniques; allowing him to deduce the conservation of mass

and categorize many new chemical elements and compounds. However, the nature of these essential chemical elements remained unknown. In 1799, Joseph Louis Proust advanced chemistry towards the atom by showing that elements combined in definite proportions

. This led John Dalton

to resurrect Democritus' atom in 1803, when he proposed that elements were invisible sub components; which explained why the varying oxides of metals (e.g. stannous oxide and cassiterite

, SnO and SnO2 respectively) possess a 1:2 ratio of oxygen to one another. But Dalton and other chemists of the time had not considered that some elements occur in monatomic form (like Helium) and others in diatomic

form (like Hydrogen), or that water was H2O, not the simpler and more intuitive HO – thus the atomic weight

s presented at the time were varied and often incorrect. Additionally, the formation of HO by two parts of hydrogen gas and one part of oxygen gas would require an atom of oxygen to split in half (or two half-atoms of hydrogen to come together). This problem was solved by Amedeo Avogadro

, who studied the reacting volumes of gases as they formed liquids and solids. By postulating

that equal volumes of elemental gas contain an equal number of atoms, he was able to show that H2O was formed from two parts H2 and one part O2. By discovering diatomic gases, Avogadro completed the basic atomic theory, allowing the correct molecular formulae of most known compounds – as well as the correct weights of atoms – to be deduced and categorized in a consistent manner. The final stroke in classical atomic theory came when Dimitri Mendeleev saw an order in recurring chemical properties, and created a table

presenting the elements in unprecedented order and symmetry. But there were holes in Mendeleev's table, with no element to fill them in. His critics initially cited this as a fatal flaw, but were silenced when new elements were discovered that perfectly fit into these holes. The success of the periodic table effectively converted any remaining opposition to atomic theory; even though no single atom had ever been observed in the laboratory, chemistry was now an atomic science.

s. This was first demonstrated by J. J. Thomson

in 1897 when, using a cathode ray tube

, he found that an electrical charge would travel across a vacuum (which would possess infinite resistance in classical theory). Since the vacuum offered no medium for an electric fluid to travel, this discovery could only be explained via a particle carrying a negative charge and moving through the vacuum. This electron flew in the face of classical electrodynamics, which had successfully treated electricity as a fluid for many years (leading to the invention of batteries

, electric motors, dynamo

s, and arc lamps). More importantly, the intimate relation between electric charge and electromagnetism had been well documented following the discoveries of Michael Faraday

and Clerk Maxwell. Since electromagnetism was known to be a wave generated by a changing electric or magnetic field (a continuous, wave-like entity itself) an atomic/particle description of electricity and charge was a non sequitur

. And classical electrodynamics was not the only classical theory rendered incomplete.

of classical mechanics, the basis of all classical thermodynamic theories, stated that an object's energy is partitioned equally among the object's vibrational modes

. This worked well when describing thermal objects, whose vibrational modes were defined as the speeds of their constituent atoms, and the speed distribution derived from egalitarian partitioning of these vibrational modes closely matched experimental results. Speeds much higher than the average speed were suppressed by the fact that kinetic energy

is quadratic – doubling the speed requires four times the energy – thus the number of atoms occupying high energy modes (high speeds) quickly drops off because the constant, equal partition can excite successively fewer atoms. Low speed modes would ostensibly dominate the distribution, since low speed modes would require ever less energy, and prima facie a zero-speed mode would require zero energy and its energy partition would contain an infinite number of atoms. But this would only occur in the absence of atomic interaction; when collisions are allowed, the low speed modes are immediately suppressed by jostling from the higher energy atoms, exciting them to higher energy modes. An equilibrium is swiftly reached where most atoms occupy a speed proportional to the temperature of the object (thus defining temperature as the average kinetic energy of the object).

But applying the same reasoning to the electromagnetic emission of such a thermal object was not so successful. It had been long known that thermal objects emit light. Hot metal glows red, and upon further heating, white (this is the underlying principle of the incandescent bulb). Since light was known to be waves of electromagnetism, physicists hoped to describe this emission via classical laws. This became known as the black body

problem. Since the equipartition theorem worked so well in describing the vibrational modes of the thermal object itself, it was trivial to assume that it would perform equally well in describing the radiative emission of such objects. But a problem quickly arose when determining the vibrational modes of light. To simplify the problem (by limiting the vibrational modes) a lowest allowable wavelength was defined by placing the thermal object in a cavity. Any electromagnetic mode at equilibrium (i.e. any standing wave

) could only exist if it used the walls of the cavities as nodes

. Thus there were no waves/modes with a wavelength larger than twice the length (L) of the cavity.

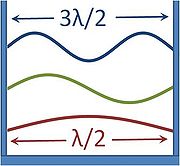

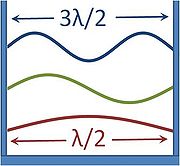

The first few allowable modes would therefore have wavelengths of : 2L, L, 2L/3, L/2, etc. (each successive wavelength adding one node to the wave). However, while the wavelength could never exceed 2L, there was no such limit on decreasing the wavelength, and adding nodes to reduce the wavelength could proceed ad infinitum. Suddenly it became apparent that the short wavelength modes completely dominated the distribution, since ever shorter wavelength modes could be crammed into the cavity. If each mode received an equal partition of energy, the short wavelength modes would consume all the energy. This became clear when plotting the Rayleigh–Jeans law which, while correctly predicting the intensity of long wavelength emissions, predicted infinite total energy as the intensity diverges to infinity for short wavelengths. This became known as the ultraviolet catastrophe

The first few allowable modes would therefore have wavelengths of : 2L, L, 2L/3, L/2, etc. (each successive wavelength adding one node to the wave). However, while the wavelength could never exceed 2L, there was no such limit on decreasing the wavelength, and adding nodes to reduce the wavelength could proceed ad infinitum. Suddenly it became apparent that the short wavelength modes completely dominated the distribution, since ever shorter wavelength modes could be crammed into the cavity. If each mode received an equal partition of energy, the short wavelength modes would consume all the energy. This became clear when plotting the Rayleigh–Jeans law which, while correctly predicting the intensity of long wavelength emissions, predicted infinite total energy as the intensity diverges to infinity for short wavelengths. This became known as the ultraviolet catastrophe

.

The solution arrived in 1900 when Max Planck

hypothesized that the frequency of light emitted by the black body depended on the frequency of the oscillator that emitted it, and the energy of these oscillators increased linearly with frequency (according to his constant h, where E = hν). This was not an unsound proposal considering that macroscopic oscillators operate similarly: when studying five simple harmonic oscillators of equal amplitude but different frequency, the oscillator with the highest frequency possesses the highest energy (though this relationship is not linear like Planck's). By demanding that high-frequency light must be emitted by an oscillator of equal frequency, and further requiring that this oscillator occupy higher energy than one of a lesser frequency, Planck avoided any catastrophe; giving an equal partition to high-frequency oscillators produced successively fewer oscillators and less emitted light. And as in the Maxwell–Boltzmann distribution, the low-frequency, low-energy oscillators were suppressed by the onslaught of thermal jiggling from higher energy oscillators, which necessarily increased their energy and frequency.

The most revolutionary aspect of Planck's treatment of the black body is that it inherently relies on an integer number of oscillators in thermal equilibrium

with the electromagnetic field. These oscillators give their entire energy to the electromagnetic field, creating a quantum of light, as often as they are excited by the electromagnetic field, absorbing a quantum of light and beginning to oscillate at the corresponding frequency. Planck had intentionally created an atomic theory of the black body, but had unintentionally generated an atomic theory of light, where the black body never generates quanta of light at a given frequency with an energy less than hν. However, once realizing that he had quantized the electromagnetic field, he denounced particles of light as a limitation of his approximation, not a property of reality.

took Planck's black body model in itself and saw a wonderful solution to another outstanding problem of the day: the photoelectric effect

. Ever since the discovery of electrons eight years previously, electrons had been the thing to study in physics laboratories worldwide. Nikola Tesla

discovered in 1901 that when a metal was illuminated by high-frequency light (e.g. ultraviolet light), electrons were ejected from the metal at high energy. This work was based on the previous knowledge that light incident upon metals produces a current

, but Tesla was the first to describe it as a particle phenomenon.

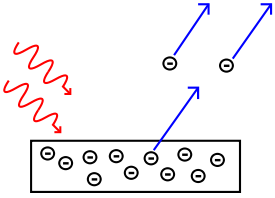

The following year, Philipp Lenard

discovered that (within the range of the experimental parameters he was using) the energy of these ejected electrons did not depend on the intensity of the incoming light, but on its frequency. So if one shines a little low-frequency light upon a metal, a few low energy electrons are ejected. If one now shines a very intense beam of low-frequency light upon the same metal, a whole slew of electrons are ejected; however they possess the same low energy, there are merely more of them. In order to get high energy electrons, one must illuminate the metal with high-frequency light. The more light there is, the more electrons are ejected. Like blackbody radiation, this was at odds with a theory invoking continuous transfer of energy between radiation and matter. However, it can still be explained using a fully classical description of light, as long as matter is quantum mechanical in nature.

If one used Planck's energy quanta, and demanded that electromagnetic radiation at a given frequency could only transfer energy to matter in integer multiples of an energy quantum hν, then the photoelectric effect could be explained very simply. Low-frequency light only ejects low-energy electrons because each electron is excited by the absorption of a single photon. Increasing the intensity of the low-frequency light (increasing the number of photons) only increases the number of excited electrons, not their energy, because the energy of each photon remains low. Only by increasing the frequency of the light, and thus increasing the energy of the photons, can one eject electrons with higher energy. Thus, using Planck's constant h to determine the energy of the photons based upon their frequency, the energy of ejected electrons should also increase linearly with frequency; the gradient of the line being Planck's constant. These results were not confirmed until 1915, when Robert Andrews Millikan, who had previously determined the charge of the electron, produced experimental results in perfect accord with Einstein's predictions. While the energy of ejected electrons reflected Planck's constant, the existence of photons was not explicitly proven until the discovery of the photon antibunching

effect, of which a modern experiment can be performed in undergraduate-level labs. This phenomenon could only be explained via photons, and not through any semi-classical theory (which could alternatively explain the photoelectric effect). When Einstein received his Nobel Prize

in 1921, it was not for his more difficult and mathematically laborious special

and general relativity

, but for the simple, yet totally revolutionary, suggestion of quantized light. Einstein's "light quanta" would not be called photons until 1925, but even in 1905 they represented the quintessential example of wave–particle duality. Electromagnetic radiation propagates following linear wave equations, but can only be emitted or absorbed as discrete elements, thus acting as a wave and a particle simultaneously.

was advanced by Christiaan Huygens, who proposed a wave theory of light, and in particular demonstrated how waves might interfere to form a wavefront, propagating in a straight line. However, the theory had difficulties in other matters, and was soon overshadowed by Isaac Newton's corpuscular theory of light. That is, Newton proposed that light consisted of small particles, with which he could easily explain the phenomenon of reflection

. With considerably more difficulty, he could also explain refraction

through a lens

, and the splitting of sunlight into a rainbow

by a prism

. Newton's particle viewpoint went essentially unchallenged for over a century.

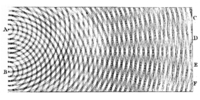

s by Young

and Fresnel

provided evidence

for Huygens' wave theories. The double-slit experiments showed that when light is sent through a grid, a characteristic interference pattern is observed, very similar to the pattern resulting from the interference of water waves

; the wavelength of light can be computed from such patterns. The wave view did not immediately displace the ray and particle view, but began to dominate scientific thinking about light in the mid 19th century, since it could explain polarization phenomena that the alternatives could not.

In the late 19th century, James Clerk Maxwell

explained light as the propagation of electromagnetic waves according to the Maxwell equations. These equations were verified by experiment by Heinrich Hertz in 1887, and the wave theory became widely accepted.

published an analysis that succeeded in reproducing the observed spectrum

of light emitted by a glowing object. To accomplish this, Planck had to make an ad hoc mathematical assumption of quantized energy of the oscillators (atoms of the black body

) that emit radiation. It was Einstein who later proposed that it is the electromagnetic radiation itself that is quantized, and not the energy of radiating atoms.

In 1905, Albert Einstein

In 1905, Albert Einstein

provided an explanation of the photoelectric effect

, a hitherto troubling experiment that the wave theory of light seemed incapable of explaining. He did so by postulating the existence of photon

s, quanta

of light energy with particulate qualities.

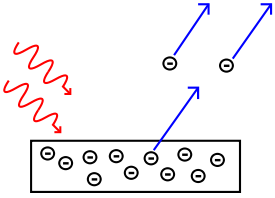

In the photoelectric effect

, it was observed that shining a light on certain metals would lead to an electric current

in a circuit

. Presumably, the light was knocking electrons out of the metal, causing current to flow. However, using the case of potassium as an example, it was also observed that while a dim blue light was enough to cause a current, even the strongest, brightest red light available with the technology of the time caused no current at all. According to the classical theory of light and matter, the strength or amplitude

of a light wave was in proportion to its brightness: a bright light should have been easily strong enough to create a large current. Yet, oddly, this was not so.

Einstein explained this conundrum by postulating

that the electrons can receive energy from electromagnetic field only in discrete portions (quanta that were called photon

s): an amount of energy

E that was related to the frequency

f of the light by

where h is Planck's constant (6.626 × 10−34 J seconds). Only photons of a high enough frequency (above a certain threshold value) could knock an electron free. For example, photons of blue light had sufficient energy to free an electron from the metal, but photons of red light did not. More intense light above the threshold frequency could release more electrons, but no amount of light (using technology available at the time) below the threshold frequency could release an electron. To "violate" this law would require extremely high intensity lasers which had not yet been invented. Intensity-dependent phenomena have now been studied in detail with such lasers.

Einstein was awarded the Nobel Prize in Physics

in 1921 for his discovery of the law of the photoelectric effect.

(denoted as λ), and momentum

(denoted as p):

This is a generalization of Einstein's equation above, since the momentum of a photon is given by p = and the wavelength (in a vacuum) by λ =

and the wavelength (in a vacuum) by λ =  , where c is the speed of light

, where c is the speed of light

in vacuum.

De Broglie's formula was confirmed three years later for electron

s (which differ from photons in having a rest mass) with the observation of electron diffraction

in two independent experiments. At the University of Aberdeen

, George Paget Thomson

passed a beam of electrons through a thin metal film and observed the predicted interference patterns. At Bell Labs

Clinton Joseph Davisson and Lester Halbert Germer guided their beam through a crystalline grid.

De Broglie was awarded the Nobel Prize for Physics in 1929 for his hypothesis. Thomson and Davisson shared the Nobel Prize for Physics in 1937 for their experimental work.

postulated his uncertainty principle

, which states:

where here indicates standard deviation

here indicates standard deviation

, a measure of spread or uncertainty;

Heisenberg originally explained this as a consequence of the process of measuring: Measuring position accurately would disturb momentum and vice-versa, offering an example (the "gamma-ray microscope") that depended crucially on the de Broglie hypothesis

. It is now thought, however, that this only partly explains the phenomenon, but that the uncertainty also exists in the particle itself, even before the measurement is made.

In fact, the modern explanation of the uncertainty principle, extending the Copenhagen interpretation

first put forward by Bohr

and Heisenberg

, depends even more centrally on the wave nature of a particle: Just as it is nonsensical to discuss the precise location of a wave on a string, particles do not have perfectly precise positions; likewise, just as it is nonsensical to discuss the wavelength of a "pulse" wave traveling down a string, particles do not have perfectly precise momenta (which corresponds to the inverse of wavelength). Moreover, when position is relatively well defined, the wave is pulse-like and has a very ill-defined wavelength (and thus momentum). And conversely, when momentum (and thus wavelength) is relatively well defined, the wave looks long and sinusoidal, and therefore it has a very ill-defined position.

De Broglie himself had proposed a pilot wave

construct to explain the observed wave–particle duality. In this view, each particle has a well-defined position and momentum, but is guided by a wave function derived from Schrödinger's equation. The pilot wave theory was initially rejected because it generated non-local effects when applied to systems involving more than one particle. Non-locality, however, soon became established as an integral feature of quantum theory

(see EPR paradox

), and David Bohm

extended de Broglie's model to explicitly include it. In the resulting representation, also called the de Broglie–Bohm theory or Bohmian mechanics,, the wave–particle duality is not a property of matter itself, but an appearance generated by the particle's motion subject to a guiding equation or quantum potential

.

s and electron

s, similar experiments have been conducted with neutron

s and proton

s. Among the most famous experiments are those of Estermann and Otto Stern

in 1929.

Authors of similar recent experiments with atoms and molecules, described below, claim that these larger particles also act like waves.

A dramatic series of experiments emphasizing the action of gravity in relation to wave–particle duality were conducted in the 1970s using the neutron interferometer

. Neutrons, one of the components of the atomic nucleus

, provide much of the mass of a nucleus and thus of ordinary matter. In the neutron interferometer, they act as quantum-mechanical waves directly subject to the force of gravity. While the results were not surprising since gravity was known to act on everything, including light (see tests of general relativity

and the Pound-Rebka falling photon experiment), the self-interference of the quantum mechanical wave of a massive fermion in a gravitational field had never been experimentally confirmed before.

In 1999, the diffraction of C60 fullerenes by researchers from the University of Vienna

was reported. Fullerenes are comparatively large and massive objects, having an atomic mass of about 720 u. The de Broglie wavelength is 2.5 pm

, whereas the diameter of the molecule is about 1 nm

, about 400 times larger. As of 2005, this is the largest object for which quantum-mechanical wave-like properties have been directly observed in far-field diffraction.

In 2003 the Vienna group also demonstrated the wave nature of tetraphenylporphyrin

—a flat biodye with an extension of

about 2 nm and a mass of 614 u. For this demonstration they employed a near-field Talbot Lau interferometer. In the same interferometer they also found interference fringes for C60F48., a fluorinated buckyball with a mass of about 1600 u, composed of 108 atoms. Large molecules are already so complex that they give experimental access to some aspects of the quantum-classical interface, i.e. to certain decoherence mechanisms.

Whether objects heavier than the Planck mass (about the weight of a large bacterium) have a de Broglie wavelength is theoretically unclear and experimentally unreachable; above the Planck mass a particle's Compton wavelength

would be smaller than the Planck length and its own Schwarzschild radius

, a scale at which current theories of physics may break down or need to be replaced by more general ones.

Recently Couder, Fort et al. showed that we can use macroscopic oil droplets on vibrating surface as a model of wave-particle duality - localized droplet creates periodical waves around and interaction with them leads to quantum-like phenomena: interference in double-slit experiment, unpredictable tunneling (depending in complicated way on practically hidden state of field) and orbit quantization (that particle has to 'find a resonance' with field perturbations it creates - after one orbit, its internal phase has to return to the initial state).

, so well that modern practitioners rarely discuss it as such. In the formalism

of the theory, all the information about a particle is encoded in its wave function, a complex valued function roughly analogous to the amplitude of a wave at each point in space. This function evolves according to a differential equation

(generically called the Schrödinger equation

), and this equation gives rise to wave-like phenomena such as interference and diffraction.

The particle-like behavior is most evident due to phenomena associated with measurement in quantum mechanics

. Upon measuring the location of the particle, the wave-function will randomly "collapse," or rather, "decoheres

" to a sharply peaked function at some location, with the likelihood of any particular location equal to the squared amplitude of the wave-function there. The measurement will return a well-defined position, (subject to uncertainty), a property traditionally associated with particles.

Although this picture is somewhat simplified (to the non-relativistic

case), it is adequate to capture the essence of current thinking on the phenomena historically called "wave–particle duality". (See also: Particle in a box

, Mathematical formulation of quantum mechanics

.)

model, originally developed by Louis de Broglie and further developed by David Bohm

into the hidden variable theory

proposes that there is no duality, but rather particles are guided, in a deterministic

fashion, by a pilot wave (or its "quantum potential

") which will direct them to areas of constructive interference in preference to areas of destructive interference. This idea is held by a significant minority within the physics community.

At least one physicist considers the “wave-duality” a misnomer, as L. Ballentine, Quantum Mechanics, A Modern Development, p. 4, explains:

Afshar

's experiment

(2007) has demonstrated that it is possible to simultaneously observe both wave and particle properties of photons. Biddulph (2010) has explained this by applying techniques from deterministic chaos to non-chaotic systems, in particular a computable version of Palmer's Universal Invariant Set proposition (2009), which allows the apparent weirdness of quantum phenomena to be explained as artefacts of the quantum apparatus not a fundamental property of nature. Waves are shown to be the only means of describing motion, since smooth motion on a continuum is impossible. If a particle visits every point on its trajectory then the motion is an algorithm for each point. Turing has shown that almost all numbers are non-computable, which means that there is no possible algorithm, so the set of points on a trajectory is sparse. This implies that motion is either jerky or wave-like. By removing the need to load the particle with the properties of space and time, a fully deterministic, local and causal description of quantum phenomena is possible by use of a simple dynamical operator on a Universal Invariant Set.

's Collective Electrodynamics: Quantum Foundations of Electromagnetism (2000) analyzes the behavior of electrons and photons purely in terms of electron wave functions, and attributes the apparent particle-like behavior to quantization effects and eigenstates. According to reviewer David Haddon:

Albert Einstein

, who, in his search for a Unified Field Theory

, did not accept wave-particle duality, wrote:

And theoretical physicist Mendel Sachs

, who completed Einstein's unified field theory, writes:

The many-worlds interpretation

(MWI) is sometimes presented as a waves-only theory, including by its originator, Hugh Everett

who referred to MWI as "the wave interpretation".

The of R. Horodecki relates the particle to wave. The hypothesis implies that a massive particle is an intrinsically spatially as well as temporally extended wave phenomenon by a nonlinear law. According to M. I. Sanduk this hypothesis is related to a hypothetical bevel gear model. Then both concepts of particle and wave may be attributed to an observation problem of the gear.

is that zero dimensional mathematical points cannot be observed. Another is that the formal representation of such points, the Kronecker delta function is unphysical, because it cannot be renormalized. Parallel arguments apply to pure wave states.

is developed which regards the detection event as establishing a relationship between the quantized field and the detector. The inherent ambiguity associated with applying Heisenberg's uncertainty principle and thus wave–particle duality is subsequently avoided.

Particle

A particle is, generally, a small localized object to which can be ascribed physical properties. It may also refer to:In chemistry:* Colloidal particle, part of a one-phase system of two or more components where the particles aren't individually visible.In physics:* Subatomic particle, which may be...

s exhibit both wave

Wave

In physics, a wave is a disturbance that travels through space and time, accompanied by the transfer of energy.Waves travel and the wave motion transfers energy from one point to another, often with no permanent displacement of the particles of the medium—that is, with little or no associated mass...

and particle

Subatomic particle

In physics or chemistry, subatomic particles are the smaller particles composing nucleons and atoms. There are two types of subatomic particles: elementary particles, which are not made of other particles, and composite particles...

properties. A central concept of quantum mechanics

Quantum mechanics

Quantum mechanics, also known as quantum physics or quantum theory, is a branch of physics providing a mathematical description of much of the dual particle-like and wave-like behavior and interactions of energy and matter. It departs from classical mechanics primarily at the atomic and subatomic...

, this duality addresses the inability of classical concepts like "particle" and "wave" to fully describe the behavior of quantum-scale objects. Standard interpretations of quantum mechanics explain this paradox

Paradox

Similar to Circular reasoning, A paradox is a seemingly true statement or group of statements that lead to a contradiction or a situation which seems to defy logic or intuition...

as a fundamental property of the Universe, while alternative interpretations explain the duality as an emergent, second-order consequence of various limitations of the observer. This treatment focuses on explaining the behavior from the perspective of the widely used Copenhagen interpretation

Copenhagen interpretation

The Copenhagen interpretation is one of the earliest and most commonly taught interpretations of quantum mechanics. It holds that quantum mechanics does not yield a description of an objective reality but deals only with probabilities of observing, or measuring, various aspects of energy quanta,...

, in which wave–particle duality is one aspect of the concept of complementarity

Complementarity (physics)

In physics, complementarity is a basic principle of quantum theory proposed by Niels Bohr, closely identified with the Copenhagen interpretation, and refers to effects such as the wave–particle duality...

, that a phenomenon can be viewed in one way or in another, but not both simultaneously.

The idea of duality originated in a debate over the nature of light

Light

Light or visible light is electromagnetic radiation that is visible to the human eye, and is responsible for the sense of sight. Visible light has wavelength in a range from about 380 nanometres to about 740 nm, with a frequency range of about 405 THz to 790 THz...

and matter

Matter

Matter is a general term for the substance of which all physical objects consist. Typically, matter includes atoms and other particles which have mass. A common way of defining matter is as anything that has mass and occupies volume...

that dates back to the 17th century, when competing theories of light were proposed by Christiaan Huygens and Isaac Newton

Isaac Newton

Sir Isaac Newton PRS was an English physicist, mathematician, astronomer, natural philosopher, alchemist, and theologian, who has been "considered by many to be the greatest and most influential scientist who ever lived."...

: light was thought either to consist of waves (Huygens) or of particles (Newton). Through the work of Max Planck

Max Planck

Max Karl Ernst Ludwig Planck, ForMemRS, was a German physicist who actualized the quantum physics, initiating a revolution in natural science and philosophy. He is regarded as the founder of the quantum theory, for which he received the Nobel Prize in Physics in 1918.-Life and career:Planck came...

, Albert Einstein

Albert Einstein

Albert Einstein was a German-born theoretical physicist who developed the theory of general relativity, effecting a revolution in physics. For this achievement, Einstein is often regarded as the father of modern physics and one of the most prolific intellects in human history...

, Louis de Broglie, Arthur Compton

Arthur Compton

Arthur Holly Compton was an American physicist and Nobel laureate in physics for his discovery of the Compton effect. He served as Chancellor of Washington University in St. Louis from 1945 to 1953.-Early years:...

, Niels Bohr

Niels Bohr

Niels Henrik David Bohr was a Danish physicist who made foundational contributions to understanding atomic structure and quantum mechanics, for which he received the Nobel Prize in Physics in 1922. Bohr mentored and collaborated with many of the top physicists of the century at his institute in...

, and many others, current scientific theory holds that all particles also have a wave nature (and vice versa). This phenomenon has been verified not only for elementary particles, but also for compound particles like atoms and even molecules. For macroscopic

Macroscopic

The macroscopic scale is the length scale on which objects or processes are of a size which is measurable and observable by the naked eye.When applied to phenomena and abstract objects, the macroscopic scale describes existence in the world as we perceive it, often in contrast to experiences or...

particles, because of their extremely small wavelengths, wave properties usually cannot be detected.

Brief history of wave and particle viewpoints

AristotleAristotle

Aristotle was a Greek philosopher and polymath, a student of Plato and teacher of Alexander the Great. His writings cover many subjects, including physics, metaphysics, poetry, theater, music, logic, rhetoric, linguistics, politics, government, ethics, biology, and zoology...

was one of the first to publicly hypothesize about the nature of light, proposing that light is a disturbance in the element air. (That is, it is a wave-like phenomenon). On the other hand, Democritus

Democritus

Democritus was an Ancient Greek philosopher born in Abdera, Thrace, Greece. He was an influential pre-Socratic philosopher and pupil of Leucippus, who formulated an atomic theory for the cosmos....

– the original atomist

Atomic theory

In chemistry and physics, atomic theory is a theory of the nature of matter, which states that matter is composed of discrete units called atoms, as opposed to the obsolete notion that matter could be divided into any arbitrarily small quantity...

– argued that all things in the universe, including light, are composed of indivisible sub-components (light being some form of solar atom). At the beginning of the 11th Century, the Arabic scientist Alhazen wrote the first comprehensive treatise on optics

Book of Optics

The Book of Optics ; ; Latin: De Aspectibus or Opticae Thesaurus: Alhazeni Arabis; Italian: Deli Aspecti) is a seven-volume treatise on optics and other fields of study composed by the medieval Muslim scholar Alhazen .-See also:* Science in medieval Islam...

; describing refraction, reflection, and the operation of a pinhole lens via rays of light traveling from the point of emission to the eye. He asserted that these rays were composed of particles of light. In 1630, René Descartes

René Descartes

René Descartes ; was a French philosopher and writer who spent most of his adult life in the Dutch Republic. He has been dubbed the 'Father of Modern Philosophy', and much subsequent Western philosophy is a response to his writings, which are studied closely to this day...

popularized and accredited in the West the opposing wave description in his treatise on light

The World (Descartes)

The World, originally titled Le Monde and also called Treatise on the Light, is a book by René Descartes . Written between 1629 and 1633, it contains a relatively complete version of his philosophy, from method, to metaphysics, to physics and biology.Descartes was a follower of the mechanical...

, showing that the behavior of light could be re-created by modeling wave-like disturbances in his universal medium ("plenum"). Beginning in 1670 and progressing over three decades, Isaac Newton

Isaac Newton

Sir Isaac Newton PRS was an English physicist, mathematician, astronomer, natural philosopher, alchemist, and theologian, who has been "considered by many to be the greatest and most influential scientist who ever lived."...

developed and championed his corpuscular hypothesis

Opticks

Opticks is a book written by English physicist Isaac Newton that was released to the public in 1704. It is about optics and the refraction of light, and is considered one of the great works of science in history...

, arguing that the perfectly straight lines of reflection

Reflection (physics)

Reflection is the change in direction of a wavefront at an interface between two differentmedia so that the wavefront returns into the medium from which it originated. Common examples include the reflection of light, sound and water waves...

demonstrated light's particle nature; only particles could travel in such straight lines. He explained refraction

Refraction

Refraction is the change in direction of a wave due to a change in its speed. It is essentially a surface phenomenon . The phenomenon is mainly in governance to the law of conservation of energy. The proper explanation would be that due to change of medium, the phase velocity of the wave is changed...

by positing that particles of light accelerated laterally upon entering a denser medium. Around the same time, Newton's contemporaries Robert Hooke

Robert Hooke

Robert Hooke FRS was an English natural philosopher, architect and polymath.His adult life comprised three distinct periods: as a scientific inquirer lacking money; achieving great wealth and standing through his reputation for hard work and scrupulous honesty following the great fire of 1666, but...

and Christian Huygens – and later Augustin-Jean Fresnel

Augustin-Jean Fresnel

Augustin-Jean Fresnel , was a French engineer who contributed significantly to the establishment of the theory of wave optics. Fresnel studied the behaviour of light both theoretically and experimentally....

– mathematically refined the wave viewpoint, showing that if light traveled at different speeds in different media (such as water and air), refraction

Refraction

Refraction is the change in direction of a wave due to a change in its speed. It is essentially a surface phenomenon . The phenomenon is mainly in governance to the law of conservation of energy. The proper explanation would be that due to change of medium, the phase velocity of the wave is changed...

could be easily explained as the medium-dependent propagation of light waves. The resulting Huygens–Fresnel principle was extremely successful at reproducing light's behavior and, subsequently supported by Thomas Young

Thomas Young (scientist)

Thomas Young was an English polymath. He is famous for having partly deciphered Egyptian hieroglyphics before Jean-François Champollion eventually expanded on his work...

's discovery of double-slit interference, was the beginning of the end for the particle light camp.

James Clerk Maxwell

James Clerk Maxwell of Glenlair was a Scottish physicist and mathematician. His most prominent achievement was formulating classical electromagnetic theory. This united all previously unrelated observations, experiments and equations of electricity, magnetism and optics into a consistent theory...

discovered that he could combine four simple equations

Maxwell's equations

Maxwell's equations are a set of partial differential equations that, together with the Lorentz force law, form the foundation of classical electrodynamics, classical optics, and electric circuits. These fields in turn underlie modern electrical and communications technologies.Maxwell's equations...

, which had been previously discovered, along with a slight modification to describe self propagating waves of oscillating electric and magnetic fields. When the propagation speed of these electromagnetic waves was calculated, the speed of light

Speed of light

The speed of light in vacuum, usually denoted by c, is a physical constant important in many areas of physics. Its value is 299,792,458 metres per second, a figure that is exact since the length of the metre is defined from this constant and the international standard for time...

fell out. It quickly became apparent that visible light, ultraviolet light, and infrared light (phenomena thought previously to be unrelated) were all electromagnetic waves of differing frequency. The wave theory had prevailed – or at least it seemed to.

While the 19th century had seen the success of the wave theory at describing light, it had also witnessed the rise of the atomic theory at describing matter. In 1789, Antoine Lavoisier

Antoine Lavoisier

Antoine-Laurent de Lavoisier , the "father of modern chemistry", was a French nobleman prominent in the histories of chemistry and biology...

securely differentiated chemistry

Chemistry

Chemistry is the science of matter, especially its chemical reactions, but also its composition, structure and properties. Chemistry is concerned with atoms and their interactions with other atoms, and particularly with the properties of chemical bonds....

from alchemy

Alchemy

Alchemy is an influential philosophical tradition whose early practitioners’ claims to profound powers were known from antiquity. The defining objectives of alchemy are varied; these include the creation of the fabled philosopher's stone possessing powers including the capability of turning base...

by introducing rigor and precision into his laboratory techniques; allowing him to deduce the conservation of mass

Conservation of mass

The law of conservation of mass, also known as the principle of mass/matter conservation, states that the mass of an isolated system will remain constant over time...

and categorize many new chemical elements and compounds. However, the nature of these essential chemical elements remained unknown. In 1799, Joseph Louis Proust advanced chemistry towards the atom by showing that elements combined in definite proportions

Law of definite proportions

In chemistry, the law of definite proportions, sometimes called Proust's Law, states that a chemical compound always contains exactly the same proportion of elements by mass. An equivalent statement is the law of constant composition, which states that all samples of a given chemical compound have...

. This led John Dalton

John Dalton

John Dalton FRS was an English chemist, meteorologist and physicist. He is best known for his pioneering work in the development of modern atomic theory, and his research into colour blindness .-Early life:John Dalton was born into a Quaker family at Eaglesfield, near Cockermouth, Cumberland,...

to resurrect Democritus' atom in 1803, when he proposed that elements were invisible sub components; which explained why the varying oxides of metals (e.g. stannous oxide and cassiterite

Cassiterite

Cassiterite is a tin oxide mineral, SnO2. It is generally opaque, but it is translucent in thin crystals. Its luster and multiple crystal faces produce a desirable gem...

, SnO and SnO2 respectively) possess a 1:2 ratio of oxygen to one another. But Dalton and other chemists of the time had not considered that some elements occur in monatomic form (like Helium) and others in diatomic

Diatomic

Diatomic molecules are molecules composed only of two atoms, of either the same or different chemical elements. The prefix di- means two in Greek. Common diatomic molecules are hydrogen , nitrogen , oxygen , and carbon monoxide . Seven elements exist in the diatomic state in the liquid and solid...

form (like Hydrogen), or that water was H2O, not the simpler and more intuitive HO – thus the atomic weight

Atomic weight

Atomic weight is a dimensionless physical quantity, the ratio of the average mass of atoms of an element to 1/12 of the mass of an atom of carbon-12...

s presented at the time were varied and often incorrect. Additionally, the formation of HO by two parts of hydrogen gas and one part of oxygen gas would require an atom of oxygen to split in half (or two half-atoms of hydrogen to come together). This problem was solved by Amedeo Avogadro

Amedeo Avogadro

Lorenzo Romano Amedeo Carlo Avogadro di Quaregna e di Cerreto, Count of Quaregna and Cerreto was an Italian savant. He is most noted for his contributions to molecular theory, including what is known as Avogadro's law...

, who studied the reacting volumes of gases as they formed liquids and solids. By postulating

Avogadro's law

Avogadro's law is a gas law named after Amedeo Avogadro who, in 1811, hypothesized that two given samples of an ideal gas, at the same temperature, pressure and volume, contain the same number of molecules...

that equal volumes of elemental gas contain an equal number of atoms, he was able to show that H2O was formed from two parts H2 and one part O2. By discovering diatomic gases, Avogadro completed the basic atomic theory, allowing the correct molecular formulae of most known compounds – as well as the correct weights of atoms – to be deduced and categorized in a consistent manner. The final stroke in classical atomic theory came when Dimitri Mendeleev saw an order in recurring chemical properties, and created a table

Periodic table

The periodic table of the chemical elements is a tabular display of the 118 known chemical elements organized by selected properties of their atomic structures. Elements are presented by increasing atomic number, the number of protons in an atom's atomic nucleus...

presenting the elements in unprecedented order and symmetry. But there were holes in Mendeleev's table, with no element to fill them in. His critics initially cited this as a fatal flaw, but were silenced when new elements were discovered that perfectly fit into these holes. The success of the periodic table effectively converted any remaining opposition to atomic theory; even though no single atom had ever been observed in the laboratory, chemistry was now an atomic science.

Particles of electricity?

At the close of the 19th century, the reductionism of atomic theory began to advance into the atom itself; determining, through physics, the nature of the atom and the operation of chemical reactions. Electricity, first thought to be a fluid, was now understood to consist of particles called electronElectron

The electron is a subatomic particle with a negative elementary electric charge. It has no known components or substructure; in other words, it is generally thought to be an elementary particle. An electron has a mass that is approximately 1/1836 that of the proton...

s. This was first demonstrated by J. J. Thomson

J. J. Thomson

Sir Joseph John "J. J." Thomson, OM, FRS was a British physicist and Nobel laureate. He is credited for the discovery of the electron and of isotopes, and the invention of the mass spectrometer...

in 1897 when, using a cathode ray tube

Cathode ray tube

The cathode ray tube is a vacuum tube containing an electron gun and a fluorescent screen used to view images. It has a means to accelerate and deflect the electron beam onto the fluorescent screen to create the images. The image may represent electrical waveforms , pictures , radar targets and...

, he found that an electrical charge would travel across a vacuum (which would possess infinite resistance in classical theory). Since the vacuum offered no medium for an electric fluid to travel, this discovery could only be explained via a particle carrying a negative charge and moving through the vacuum. This electron flew in the face of classical electrodynamics, which had successfully treated electricity as a fluid for many years (leading to the invention of batteries

Battery (electricity)

An electrical battery is one or more electrochemical cells that convert stored chemical energy into electrical energy. Since the invention of the first battery in 1800 by Alessandro Volta and especially since the technically improved Daniell cell in 1836, batteries have become a common power...

, electric motors, dynamo

Dynamo

- Engineering :* Dynamo, a magnetic device originally used as an electric generator* Dynamo theory, a theory relating to magnetic fields of celestial bodies* Solar dynamo, the physical process that generates the Sun's magnetic field- Software :...

s, and arc lamps). More importantly, the intimate relation between electric charge and electromagnetism had been well documented following the discoveries of Michael Faraday

Michael Faraday

Michael Faraday, FRS was an English chemist and physicist who contributed to the fields of electromagnetism and electrochemistry....

and Clerk Maxwell. Since electromagnetism was known to be a wave generated by a changing electric or magnetic field (a continuous, wave-like entity itself) an atomic/particle description of electricity and charge was a non sequitur

Non sequitur (logic)

Non sequitur , in formal logic, is an argument in which its conclusion does not follow from its premises. In a non sequitur, the conclusion could be either true or false, but the argument is fallacious because there is a disconnection between the premise and the conclusion. All formal fallacies...

. And classical electrodynamics was not the only classical theory rendered incomplete.

Radiation quantization

Black-body radiation, the emission of electromagnetic energy due to an object's heat, could not be explained from classical arguments alone. The equipartition theoremEquipartition theorem

In classical statistical mechanics, the equipartition theorem is a general formula that relates the temperature of a system with its average energies. The equipartition theorem is also known as the law of equipartition, equipartition of energy, or simply equipartition...

of classical mechanics, the basis of all classical thermodynamic theories, stated that an object's energy is partitioned equally among the object's vibrational modes

Normal mode

A normal mode of an oscillating system is a pattern of motion in which all parts of the system move sinusoidally with the same frequency and with a fixed phase relation. The frequencies of the normal modes of a system are known as its natural frequencies or resonant frequencies...

. This worked well when describing thermal objects, whose vibrational modes were defined as the speeds of their constituent atoms, and the speed distribution derived from egalitarian partitioning of these vibrational modes closely matched experimental results. Speeds much higher than the average speed were suppressed by the fact that kinetic energy

Kinetic energy

The kinetic energy of an object is the energy which it possesses due to its motion.It is defined as the work needed to accelerate a body of a given mass from rest to its stated velocity. Having gained this energy during its acceleration, the body maintains this kinetic energy unless its speed changes...

is quadratic – doubling the speed requires four times the energy – thus the number of atoms occupying high energy modes (high speeds) quickly drops off because the constant, equal partition can excite successively fewer atoms. Low speed modes would ostensibly dominate the distribution, since low speed modes would require ever less energy, and prima facie a zero-speed mode would require zero energy and its energy partition would contain an infinite number of atoms. But this would only occur in the absence of atomic interaction; when collisions are allowed, the low speed modes are immediately suppressed by jostling from the higher energy atoms, exciting them to higher energy modes. An equilibrium is swiftly reached where most atoms occupy a speed proportional to the temperature of the object (thus defining temperature as the average kinetic energy of the object).

But applying the same reasoning to the electromagnetic emission of such a thermal object was not so successful. It had been long known that thermal objects emit light. Hot metal glows red, and upon further heating, white (this is the underlying principle of the incandescent bulb). Since light was known to be waves of electromagnetism, physicists hoped to describe this emission via classical laws. This became known as the black body

Black body

A black body is an idealized physical body that absorbs all incident electromagnetic radiation. Because of this perfect absorptivity at all wavelengths, a black body is also the best possible emitter of thermal radiation, which it radiates incandescently in a characteristic, continuous spectrum...

problem. Since the equipartition theorem worked so well in describing the vibrational modes of the thermal object itself, it was trivial to assume that it would perform equally well in describing the radiative emission of such objects. But a problem quickly arose when determining the vibrational modes of light. To simplify the problem (by limiting the vibrational modes) a lowest allowable wavelength was defined by placing the thermal object in a cavity. Any electromagnetic mode at equilibrium (i.e. any standing wave

Standing wave

In physics, a standing wave – also known as a stationary wave – is a wave that remains in a constant position.This phenomenon can occur because the medium is moving in the opposite direction to the wave, or it can arise in a stationary medium as a result of interference between two waves traveling...

) could only exist if it used the walls of the cavities as nodes

Node (physics)

A node is a point along a standing wave where the wave has minimal amplitude. For instance, in a vibrating guitar string, the ends of the string are nodes. By changing the position of the end node through frets, the guitarist changes the effective length of the vibrating string and thereby the...

. Thus there were no waves/modes with a wavelength larger than twice the length (L) of the cavity.

Ultraviolet catastrophe

The ultraviolet catastrophe, also called the Rayleigh–Jeans catastrophe, was a prediction of late 19th century/early 20th century classical physics that an ideal black body at thermal equilibrium will emit radiation with infinite power....

.

The solution arrived in 1900 when Max Planck

Max Planck

Max Karl Ernst Ludwig Planck, ForMemRS, was a German physicist who actualized the quantum physics, initiating a revolution in natural science and philosophy. He is regarded as the founder of the quantum theory, for which he received the Nobel Prize in Physics in 1918.-Life and career:Planck came...

hypothesized that the frequency of light emitted by the black body depended on the frequency of the oscillator that emitted it, and the energy of these oscillators increased linearly with frequency (according to his constant h, where E = hν). This was not an unsound proposal considering that macroscopic oscillators operate similarly: when studying five simple harmonic oscillators of equal amplitude but different frequency, the oscillator with the highest frequency possesses the highest energy (though this relationship is not linear like Planck's). By demanding that high-frequency light must be emitted by an oscillator of equal frequency, and further requiring that this oscillator occupy higher energy than one of a lesser frequency, Planck avoided any catastrophe; giving an equal partition to high-frequency oscillators produced successively fewer oscillators and less emitted light. And as in the Maxwell–Boltzmann distribution, the low-frequency, low-energy oscillators were suppressed by the onslaught of thermal jiggling from higher energy oscillators, which necessarily increased their energy and frequency.

The most revolutionary aspect of Planck's treatment of the black body is that it inherently relies on an integer number of oscillators in thermal equilibrium

Thermal equilibrium

Thermal equilibrium is a theoretical physical concept, used especially in theoretical texts, that means that all temperatures of interest are unchanging in time and uniform in space...

with the electromagnetic field. These oscillators give their entire energy to the electromagnetic field, creating a quantum of light, as often as they are excited by the electromagnetic field, absorbing a quantum of light and beginning to oscillate at the corresponding frequency. Planck had intentionally created an atomic theory of the black body, but had unintentionally generated an atomic theory of light, where the black body never generates quanta of light at a given frequency with an energy less than hν. However, once realizing that he had quantized the electromagnetic field, he denounced particles of light as a limitation of his approximation, not a property of reality.

The photoelectric effect illuminated

Yet while Planck had solved the ultraviolet catastrophe by using atoms and a quantized electromagnetic field, most physicists immediately agreed that Planck's "light quanta" were unavoidable flaws in his model. A more complete derivation of black body radiation would produce a fully continuous, fully wave-like electromagnetic field with no quantization. However, in 1905 Albert EinsteinAlbert Einstein

Albert Einstein was a German-born theoretical physicist who developed the theory of general relativity, effecting a revolution in physics. For this achievement, Einstein is often regarded as the father of modern physics and one of the most prolific intellects in human history...

took Planck's black body model in itself and saw a wonderful solution to another outstanding problem of the day: the photoelectric effect

Photoelectric effect

In the photoelectric effect, electrons are emitted from matter as a consequence of their absorption of energy from electromagnetic radiation of very short wavelength, such as visible or ultraviolet light. Electrons emitted in this manner may be referred to as photoelectrons...

. Ever since the discovery of electrons eight years previously, electrons had been the thing to study in physics laboratories worldwide. Nikola Tesla

Nikola Tesla

Nikola Tesla was a Serbian-American inventor, mechanical engineer, and electrical engineer...

discovered in 1901 that when a metal was illuminated by high-frequency light (e.g. ultraviolet light), electrons were ejected from the metal at high energy. This work was based on the previous knowledge that light incident upon metals produces a current

Electric current

Electric current is a flow of electric charge through a medium.This charge is typically carried by moving electrons in a conductor such as wire...

, but Tesla was the first to describe it as a particle phenomenon.

The following year, Philipp Lenard

Philipp Lenard

Philipp Eduard Anton von Lenard , known in Hungarian as Lénárd Fülöp Eduárd Antal, was a Hungarian - German physicist and the winner of the Nobel Prize for Physics in 1905 for his research on cathode rays and the discovery of many of their properties...

discovered that (within the range of the experimental parameters he was using) the energy of these ejected electrons did not depend on the intensity of the incoming light, but on its frequency. So if one shines a little low-frequency light upon a metal, a few low energy electrons are ejected. If one now shines a very intense beam of low-frequency light upon the same metal, a whole slew of electrons are ejected; however they possess the same low energy, there are merely more of them. In order to get high energy electrons, one must illuminate the metal with high-frequency light. The more light there is, the more electrons are ejected. Like blackbody radiation, this was at odds with a theory invoking continuous transfer of energy between radiation and matter. However, it can still be explained using a fully classical description of light, as long as matter is quantum mechanical in nature.

If one used Planck's energy quanta, and demanded that electromagnetic radiation at a given frequency could only transfer energy to matter in integer multiples of an energy quantum hν, then the photoelectric effect could be explained very simply. Low-frequency light only ejects low-energy electrons because each electron is excited by the absorption of a single photon. Increasing the intensity of the low-frequency light (increasing the number of photons) only increases the number of excited electrons, not their energy, because the energy of each photon remains low. Only by increasing the frequency of the light, and thus increasing the energy of the photons, can one eject electrons with higher energy. Thus, using Planck's constant h to determine the energy of the photons based upon their frequency, the energy of ejected electrons should also increase linearly with frequency; the gradient of the line being Planck's constant. These results were not confirmed until 1915, when Robert Andrews Millikan, who had previously determined the charge of the electron, produced experimental results in perfect accord with Einstein's predictions. While the energy of ejected electrons reflected Planck's constant, the existence of photons was not explicitly proven until the discovery of the photon antibunching

Photon antibunching

Photon antibunching generally refers to a light field with photons more equally spaced than a coherent laser field and a signal at detectors is anticorrelated. More specifically, it can refer to sub-Poisson photon statistics, that is a photon number distribution for which the variance is less than...

effect, of which a modern experiment can be performed in undergraduate-level labs. This phenomenon could only be explained via photons, and not through any semi-classical theory (which could alternatively explain the photoelectric effect). When Einstein received his Nobel Prize

Nobel Prize

The Nobel Prizes are annual international awards bestowed by Scandinavian committees in recognition of cultural and scientific advances. The will of the Swedish chemist Alfred Nobel, the inventor of dynamite, established the prizes in 1895...

in 1921, it was not for his more difficult and mathematically laborious special

Special relativity

Special relativity is the physical theory of measurement in an inertial frame of reference proposed in 1905 by Albert Einstein in the paper "On the Electrodynamics of Moving Bodies".It generalizes Galileo's...

and general relativity

General relativity

General relativity or the general theory of relativity is the geometric theory of gravitation published by Albert Einstein in 1916. It is the current description of gravitation in modern physics...

, but for the simple, yet totally revolutionary, suggestion of quantized light. Einstein's "light quanta" would not be called photons until 1925, but even in 1905 they represented the quintessential example of wave–particle duality. Electromagnetic radiation propagates following linear wave equations, but can only be emitted or absorbed as discrete elements, thus acting as a wave and a particle simultaneously.

Huygens and Newton

The earliest comprehensive theory of lightLight

Light or visible light is electromagnetic radiation that is visible to the human eye, and is responsible for the sense of sight. Visible light has wavelength in a range from about 380 nanometres to about 740 nm, with a frequency range of about 405 THz to 790 THz...

was advanced by Christiaan Huygens, who proposed a wave theory of light, and in particular demonstrated how waves might interfere to form a wavefront, propagating in a straight line. However, the theory had difficulties in other matters, and was soon overshadowed by Isaac Newton's corpuscular theory of light. That is, Newton proposed that light consisted of small particles, with which he could easily explain the phenomenon of reflection

Reflection (physics)

Reflection is the change in direction of a wavefront at an interface between two differentmedia so that the wavefront returns into the medium from which it originated. Common examples include the reflection of light, sound and water waves...

. With considerably more difficulty, he could also explain refraction

Refraction

Refraction is the change in direction of a wave due to a change in its speed. It is essentially a surface phenomenon . The phenomenon is mainly in governance to the law of conservation of energy. The proper explanation would be that due to change of medium, the phase velocity of the wave is changed...

through a lens

Lens (optics)

A lens is an optical device with perfect or approximate axial symmetry which transmits and refracts light, converging or diverging the beam. A simple lens consists of a single optical element...

, and the splitting of sunlight into a rainbow

Rainbow

A rainbow is an optical and meteorological phenomenon that causes a spectrum of light to appear in the sky when the Sun shines on to droplets of moisture in the Earth's atmosphere. It takes the form of a multicoloured arc...

by a prism

Dispersive prism

In optics, a dispersive prism is a type of optical prism, normally having the shape of a geometrical triangular prism. It is the most widely-known type of optical prism, although perhaps not the most common in actual use. Triangular prisms are used to disperse light, that is, to break light up into...

. Newton's particle viewpoint went essentially unchallenged for over a century.

Young, Fresnel, and Maxwell

In the early 19th century, the double-slit experimentDouble-slit experiment

The double-slit experiment, sometimes called Young's experiment, is a demonstration that matter and energy can display characteristics of both waves and particles...

s by Young

Thomas Young (scientist)

Thomas Young was an English polymath. He is famous for having partly deciphered Egyptian hieroglyphics before Jean-François Champollion eventually expanded on his work...

and Fresnel

Augustin-Jean Fresnel

Augustin-Jean Fresnel , was a French engineer who contributed significantly to the establishment of the theory of wave optics. Fresnel studied the behaviour of light both theoretically and experimentally....

provided evidence

Scientific evidence

Scientific evidence has no universally accepted definition but generally refers to evidence which serves to either support or counter a scientific theory or hypothesis. Such evidence is generally expected to be empirical and properly documented in accordance with scientific method such as is...

for Huygens' wave theories. The double-slit experiments showed that when light is sent through a grid, a characteristic interference pattern is observed, very similar to the pattern resulting from the interference of water waves

Ripple tank

In physics and engineering, a ripple tank is a shallow glass tank of water used in schools and colleges to demonstrate the basic properties of waves. It is a specialized form of a wave tank. The ripple tank is usually illuminated from above, so that the light shines through the water. Some small...

; the wavelength of light can be computed from such patterns. The wave view did not immediately displace the ray and particle view, but began to dominate scientific thinking about light in the mid 19th century, since it could explain polarization phenomena that the alternatives could not.

In the late 19th century, James Clerk Maxwell

James Clerk Maxwell

James Clerk Maxwell of Glenlair was a Scottish physicist and mathematician. His most prominent achievement was formulating classical electromagnetic theory. This united all previously unrelated observations, experiments and equations of electricity, magnetism and optics into a consistent theory...

explained light as the propagation of electromagnetic waves according to the Maxwell equations. These equations were verified by experiment by Heinrich Hertz in 1887, and the wave theory became widely accepted.

Planck's formula for black-body radiation

In 1901, Max PlanckMax Planck

Max Karl Ernst Ludwig Planck, ForMemRS, was a German physicist who actualized the quantum physics, initiating a revolution in natural science and philosophy. He is regarded as the founder of the quantum theory, for which he received the Nobel Prize in Physics in 1918.-Life and career:Planck came...

published an analysis that succeeded in reproducing the observed spectrum

Spectrum

A spectrum is a condition that is not limited to a specific set of values but can vary infinitely within a continuum. The word saw its first scientific use within the field of optics to describe the rainbow of colors in visible light when separated using a prism; it has since been applied by...

of light emitted by a glowing object. To accomplish this, Planck had to make an ad hoc mathematical assumption of quantized energy of the oscillators (atoms of the black body

Black body

A black body is an idealized physical body that absorbs all incident electromagnetic radiation. Because of this perfect absorptivity at all wavelengths, a black body is also the best possible emitter of thermal radiation, which it radiates incandescently in a characteristic, continuous spectrum...

) that emit radiation. It was Einstein who later proposed that it is the electromagnetic radiation itself that is quantized, and not the energy of radiating atoms.

Einstein's explanation of the photoelectric effect

Albert Einstein

Albert Einstein was a German-born theoretical physicist who developed the theory of general relativity, effecting a revolution in physics. For this achievement, Einstein is often regarded as the father of modern physics and one of the most prolific intellects in human history...

provided an explanation of the photoelectric effect

Photoelectric effect

In the photoelectric effect, electrons are emitted from matter as a consequence of their absorption of energy from electromagnetic radiation of very short wavelength, such as visible or ultraviolet light. Electrons emitted in this manner may be referred to as photoelectrons...

, a hitherto troubling experiment that the wave theory of light seemed incapable of explaining. He did so by postulating the existence of photon

Photon

In physics, a photon is an elementary particle, the quantum of the electromagnetic interaction and the basic unit of light and all other forms of electromagnetic radiation. It is also the force carrier for the electromagnetic force...

s, quanta

Quantum

In physics, a quantum is the minimum amount of any physical entity involved in an interaction. Behind this, one finds the fundamental notion that a physical property may be "quantized," referred to as "the hypothesis of quantization". This means that the magnitude can take on only certain discrete...

of light energy with particulate qualities.

In the photoelectric effect

Photoelectric effect

In the photoelectric effect, electrons are emitted from matter as a consequence of their absorption of energy from electromagnetic radiation of very short wavelength, such as visible or ultraviolet light. Electrons emitted in this manner may be referred to as photoelectrons...

, it was observed that shining a light on certain metals would lead to an electric current

Electric current

Electric current is a flow of electric charge through a medium.This charge is typically carried by moving electrons in a conductor such as wire...

in a circuit

Electrical network

An electrical network is an interconnection of electrical elements such as resistors, inductors, capacitors, transmission lines, voltage sources, current sources and switches. An electrical circuit is a special type of network, one that has a closed loop giving a return path for the current...

. Presumably, the light was knocking electrons out of the metal, causing current to flow. However, using the case of potassium as an example, it was also observed that while a dim blue light was enough to cause a current, even the strongest, brightest red light available with the technology of the time caused no current at all. According to the classical theory of light and matter, the strength or amplitude

Amplitude

Amplitude is the magnitude of change in the oscillating variable with each oscillation within an oscillating system. For example, sound waves in air are oscillations in atmospheric pressure and their amplitudes are proportional to the change in pressure during one oscillation...

of a light wave was in proportion to its brightness: a bright light should have been easily strong enough to create a large current. Yet, oddly, this was not so.

Einstein explained this conundrum by postulating

Axiom