Entropy in thermodynamics and information theory

Encyclopedia

There are close parallels between the mathematical expressions for the thermodynamic entropy

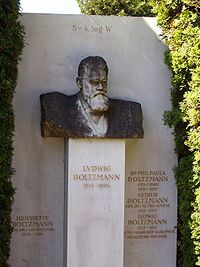

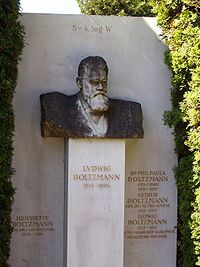

, usually denoted by S, of a physical system in the statistical thermodynamics established by Ludwig Boltzmann

and J. Willard Gibbs in the 1870s; and the information-theoretic entropy

, usually expressed as H, of Claude Shannon

and Ralph Hartley

developed in the 1940s. Shannon, although not initially aware of this similarity, commented on it upon publicizing information theory in A Mathematical Theory of Communication

.

This article explores what links there are between the two concepts, and how far they can be regarded as connected.

The defining expression for entropy

The defining expression for entropy

in the theory of statistical mechanics

established by Ludwig Boltzmann

and J. Willard Gibbs in the 1870s, is of the form:

where is the probability of the microstate

is the probability of the microstate

i taken from an equilibrium ensemble.

The defining expression for entropy

in the theory of information

established by Claude E. Shannon in 1948 is of the form:

where is the probability of the message

is the probability of the message  taken from the message space M.

taken from the message space M.

Mathematically H may also be seen as an average information, taken over the message space, because when a certain message occurs with probability pi, the information

−log(pi) will be obtained.

If all the microstates are equiprobable (a microcanonical ensemble

), the statistical thermodynamic entropy reduces to the form on Boltzmann's tombstone,

where W is the number of microstates.

If all the messages are equiprobable, the information entropy reduces to the Hartley entropy

where is the cardinality of the message space M.

is the cardinality of the message space M.

The logarithm in the thermodynamic definition is the natural logarithm

. It can be shown that the Gibbs entropy formula, with the natural logarithm, reproduces all of the properties of the macroscopic classical thermodynamics of Clausius. (See article: Entropy (statistical views)

).

The logarithm

can also be taken to the natural base in the case of information entropy. This is equivalent to choosing to measure information in nat

s instead of the usual bit

s. In practice, information entropy is almost always calculated using base 2 logarithms, but this distinction amounts to nothing other than a change in units. One nat is about 1.44 bits.

The presence of Boltzmann's constant k in the thermodynamic definitions is a historical accident, reflecting the conventional units of temperature. It is there to make sure that the statistical definition of thermodynamic entropy matches the classical entropy of Clausius, thermodynamically conjugate to temperature

. For a simple compressible system that can only perform volume work, the first law of thermodynamics

becomes

But one can equally well write this equation in terms of what physicists and chemists sometimes call the 'reduced' or dimensionless entropy, σ = S/k, so that

Just as S is conjugate to T, so σ is conjugate to kT (the energy that is characteristic of T on a molecular scale).

,

As long as f(x) is a probability density function, p. d. f., H represents the entropy (average information, disorder

, diversity, etc.) of f(x). For any uniform p. d. f. f(x), the exponential of H is the volume covered by f(x) (in analogy to the cardinality in the discrete case). The volume covered by a n-dimensional multivariate Gaussian distribution with moment matrix M is proportional to the volume of the ellipsoid of concentration and is equal to . The volume is always positive.

. The volume is always positive.

Entropy may be maximized using Gaussian adaptation

- one of the evolutionary algorithms - keeping the mean fitness - i. e. the probability of becoming a parent to new individuals in the population - constant (and without the need for any knowledge about entropy as a criterion function). This is illustrated by the figure below, showing Gaussian adaptation climbing a mountain crest in a phenotypic landscape. The lines in the figure are part of a contour line enclosing a region of acceptability in the landscape. At the start the cluster of red points represents a very homogeneous population with small variances in the phenotypes. Evidently, even small environmental changes in the landscape, may cause the process to become extinct.

After a sufficiently large number of generations, the increase in entropy may result in the green cluster. Actually, the mean fitness is the same for both red and green cluster (about 65%). The effect of this adaptation is not very salient in a 2-dimensional case, but in a high-dimensional case, the efficiency of the search process may be increased by many orders of magnitude.

Besides, a Gaussian distribution has the highest entropy compared to other distributions having the same second order moment matrix (Middleton 1960).

But it turns out that this is not in general a good measure of uncertainty or information. For example, the differential entropy can be negative; also it is not invariant under continuous coordinate transformations. Jaynes

showed in fact that the expression above is not the correct limit of the expression for a finite set of probabilities.

The correct expression, appropriate for the continuous case, is the relative entropy of a distribution, defined as the Kullback-Leibler divergence from the distribution to a reference measure m(x),

(or sometimes the negative of this).

The relative entropy carries over directly from discrete to continuous distributions, and is invariant under coordinate reparametrisations. The relative entropy is moreover, always positive, or zero in the case that f(x)=m(x).

Furthermore, the thermodynamic entropy S is dominated by different arrangements of the system, and in particular its energy, that are possible on a molecular scale. In comparison, information entropy of any macroscopic event is so small as to be completely irrelevant.

However, a connection can be made between the two, if the probabilities in question are the thermodynamic probabilities pi: the (reduced) Gibbs entropy σ can then be seen as simply the amount of Shannon information needed to define the detailed microscopic state of the system, given its macroscopic description. Or, in the words of G. N. Lewis writing about chemical entropy in 1930, "Gain in entropy always means loss of information, and nothing more". To be more concrete, in the discrete case using base two logarithms, the reduced Gibbs entropy is equal to the minimum number of yes/no questions that need to be answered in order to fully specify the microstate, given that we know the macrostate.

Furthermore, the prescription to find the equilibrium distributions of statistical mechanics, such as the Boltzmann distribution, by maximising the Gibbs entropy subject to appropriate constraints (the Gibbs algorithm

), can now be seen as something not unique to thermodynamics, but as a principle of general relevance in all sorts of statistical inference, if it desired to find a maximally uninformative probability distribution

, subject to certain constraints on the behaviour of its averages. (These perspectives are explored further in the article Maximum entropy thermodynamics

).

, in a refinement of the famous Maxwell's demon

scenario.

Consider Maxwell's set-up, but with only a single gas particle in a box. If the supernatural demon knows which half of the box the particle is in (equivalent to a single bit of information), it can close a shutter between the two halves of the box, close a piston unopposed into the empty half of the box, and then extract joules of useful work if the shutter is opened again. The particle can then be left to isothermally expand back to its original equilibrium occupied volume. In just the right circumstances therefore, the possession of a single bit of Shannon information (a single bit of negentropy

joules of useful work if the shutter is opened again. The particle can then be left to isothermally expand back to its original equilibrium occupied volume. In just the right circumstances therefore, the possession of a single bit of Shannon information (a single bit of negentropy

in Brillouin's term) really does correspond to a reduction in the entropy of the physical system. The global entropy is not decreased, but information to energy conversion is possible.

Using a phase-contrast microscope equipped with a high speed camera connected to a computer, as demon, the principle has been actually demonstrated. In this experiment, information to energy conversion is performed on a Brownian

particle by means of feedback control; that is, synchronizing the work given to the particle with the information obtained on its position. Computing energy balances for different feedback protocols, has confirmed that the Jarzynski equality

requires a generalization that accounts for the amount of information involved in the feedback.

Thus, Rolf Landauer

argued in 1961, if one were to imagine starting with those degrees of freedom in a thermalised state, there would be a real reduction in thermodynamic entropy if they were then re-set to a known state. This can only be achieved under information-preserving microscopically deterministic dynamics if the uncertainty is somehow dumped somewhere else – i.e. if the entropy of the environment (or the non information-bearing degrees of freedom) is increased by at least an equivalent amount, as required by the Second Law, by gaining an appropriate quantity of heat: specifically kT ln 2 of heat for every 1 bit of randomness erased.

On the other hand, Landauer argued, there is no thermodynamic objection to a logically reversible operation potentially being achieved in a physically reversible way in the system. It is only logically irreversible operations – for example, the erasing of a bit to a known state, or the merging of two computation paths – which must be accompanied by a corresponding entropy increase. When information is physical, all processing of its representations, i.e. generation, encoding, transmission, decoding and interpretation, are natural processes where entropy increases by consumption of free energy.

Applied to the Maxwell's demon/Szilard engine scenario, this suggests that it might be possible to "read" the state of the particle into a computing apparatus with no entropy cost; but only if the apparatus has already been SET into a known state, rather than being in a thermalised state of uncertainty. To SET (or RESET) the apparatus into this state will cost all the entropy that can be saved by knowing the state of Szilard's particle.

to a concept sometimes called negentropy

. In his 1962 book Science and Information Theory, Brillouin described the Negentropy Principle of Information or NPI, the gist of which is that acquiring information about a system’s microstates is associated with a decrease in entropy (work is needed to extract information, erasure leads to increase in thermodynamic entropy).

There is no violation of the second law of thermodynamics, according to Brillouin, since a reduction in any local system’s thermodynamic entropy results in an increase in thermodynamic entropy elsewhere. Negentropy was considered as controversial because its earlier understanding can yield Carnot efficiency higher than one.

In 2009, Mahulikar & Herwig redefined thermodynamic negentropy as the specific entropy deficit of the dynamically ordered sub-system relative to its surroundings. This definition enabled the formulation of the Negentropy Principle, which is mathematically shown to follow from the 2nd Law of Thermodynamics, during order existence.

often speaks of the thermodynamic entropy of black hole

s in terms of their information content. Do black holes destroy information? It appears that there are deep relations between the entropy of a black hole and information loss See Black hole thermodynamics

and Black hole information paradox

.

. (One could speak of the "joint entropy" of these distributions by considering them independent, but since they are not jointly observable, they cannot be considered as a joint distribution

.)

It is well known that a Shannon based definition of information entropy leads in the classical case to the Boltzmann entropy. It is tempting to regard the Von Neumann entropy

as the corresponding quantum mechanical definition. But the latter is problematic from quantum information point of view. Consequently Stotland, Pomeransky, Bachmat and Cohen have introduced a new definition of entropy that reflects the inherent uncertainty of quantum mechanical states. This definition allows to distinguish between the minimum uncertainty entropy of pure states, and the excess statistical entropy of mixtures.

provides a mathematical justification of the second law of thermodynamics

under these principles, and precisely defines the limitations of the applicability of that law to the microscopic realm of individual particle movements.

:

The article Conceptual inadequacy of the Shannon information in quantum measurement, published in 2001 by Anton Zeilinger

and Caslav Brukner, synthesized and developed these remarks. The so-called Zeilinger's principle suggests that the quantization observed in QM could be bound to information quantization (one cannot observe less than one bit, and what is not observed is by definition "random"). But these claims remain highly controversial.

Entropy

Entropy is a thermodynamic property that can be used to determine the energy available for useful work in a thermodynamic process, such as in energy conversion devices, engines, or machines. Such devices can only be driven by convertible energy, and have a theoretical maximum efficiency when...

, usually denoted by S, of a physical system in the statistical thermodynamics established by Ludwig Boltzmann

Ludwig Boltzmann

Ludwig Eduard Boltzmann was an Austrian physicist famous for his founding contributions in the fields of statistical mechanics and statistical thermodynamics...

and J. Willard Gibbs in the 1870s; and the information-theoretic entropy

Information entropy

In information theory, entropy is a measure of the uncertainty associated with a random variable. In this context, the term usually refers to the Shannon entropy, which quantifies the expected value of the information contained in a message, usually in units such as bits...

, usually expressed as H, of Claude Shannon

Claude Elwood Shannon

Claude Elwood Shannon was an American mathematician, electronic engineer, and cryptographer known as "the father of information theory"....

and Ralph Hartley

Ralph Hartley

Ralph Vinton Lyon Hartley was an electronics researcher. He invented the Hartley oscillator and the Hartley transform, and contributed to the foundations of information theory.-Biography:...

developed in the 1940s. Shannon, although not initially aware of this similarity, commented on it upon publicizing information theory in A Mathematical Theory of Communication

A Mathematical Theory of Communication

"A Mathematical Theory of Communication" is an influential 1948 article by mathematician Claude E. Shannon. As of November 2011, Google Scholar has listed more than 48,000 unique citations of the article and the later-published book version...

.

This article explores what links there are between the two concepts, and how far they can be regarded as connected.

Discrete case

Entropy

Entropy is a thermodynamic property that can be used to determine the energy available for useful work in a thermodynamic process, such as in energy conversion devices, engines, or machines. Such devices can only be driven by convertible energy, and have a theoretical maximum efficiency when...

in the theory of statistical mechanics

Statistical mechanics

Statistical mechanics or statistical thermodynamicsThe terms statistical mechanics and statistical thermodynamics are used interchangeably...

established by Ludwig Boltzmann

Ludwig Boltzmann

Ludwig Eduard Boltzmann was an Austrian physicist famous for his founding contributions in the fields of statistical mechanics and statistical thermodynamics...

and J. Willard Gibbs in the 1870s, is of the form:

where

is the probability of the microstate

is the probability of the microstateMicrostate (statistical mechanics)

In statistical mechanics, a microstate is a specific microscopic configuration of a thermodynamic system that the system may occupy with a certain probability in the course of its thermal fluctuations...

i taken from an equilibrium ensemble.

The defining expression for entropy

Information entropy

In information theory, entropy is a measure of the uncertainty associated with a random variable. In this context, the term usually refers to the Shannon entropy, which quantifies the expected value of the information contained in a message, usually in units such as bits...

in the theory of information

Information theory

Information theory is a branch of applied mathematics and electrical engineering involving the quantification of information. Information theory was developed by Claude E. Shannon to find fundamental limits on signal processing operations such as compressing data and on reliably storing and...

established by Claude E. Shannon in 1948 is of the form:

where

is the probability of the message

is the probability of the message  taken from the message space M.

taken from the message space M.Mathematically H may also be seen as an average information, taken over the message space, because when a certain message occurs with probability pi, the information

−log(pi) will be obtained.

If all the microstates are equiprobable (a microcanonical ensemble

Microcanonical ensemble

In statistical physics, the microcanonical ensemble is a theoretical tool used to describe the thermodynamic properties of an isolated system. In such a system, the possible macrostates of the system all have the same energy and the probability for the system to be in any given microstate is the same...

), the statistical thermodynamic entropy reduces to the form on Boltzmann's tombstone,

where W is the number of microstates.

If all the messages are equiprobable, the information entropy reduces to the Hartley entropy

where

is the cardinality of the message space M.

is the cardinality of the message space M.The logarithm in the thermodynamic definition is the natural logarithm

Natural logarithm

The natural logarithm is the logarithm to the base e, where e is an irrational and transcendental constant approximately equal to 2.718281828...

. It can be shown that the Gibbs entropy formula, with the natural logarithm, reproduces all of the properties of the macroscopic classical thermodynamics of Clausius. (See article: Entropy (statistical views)

Entropy (statistical views)

In classical statistical mechanics, the entropy function earlier introduced by Clausius is changed to statistical entropy using probability theory...

).

The logarithm

Logarithm

The logarithm of a number is the exponent by which another fixed value, the base, has to be raised to produce that number. For example, the logarithm of 1000 to base 10 is 3, because 1000 is 10 to the power 3: More generally, if x = by, then y is the logarithm of x to base b, and is written...

can also be taken to the natural base in the case of information entropy. This is equivalent to choosing to measure information in nat

Nat (information)

A nat is a logarithmic unit of information or entropy, based on natural logarithms and powers of e, rather than the powers of 2 and base 2 logarithms which define the bit. The nat is the natural unit for information entropy...

s instead of the usual bit

Bit

A bit is the basic unit of information in computing and telecommunications; it is the amount of information stored by a digital device or other physical system that exists in one of two possible distinct states...

s. In practice, information entropy is almost always calculated using base 2 logarithms, but this distinction amounts to nothing other than a change in units. One nat is about 1.44 bits.

The presence of Boltzmann's constant k in the thermodynamic definitions is a historical accident, reflecting the conventional units of temperature. It is there to make sure that the statistical definition of thermodynamic entropy matches the classical entropy of Clausius, thermodynamically conjugate to temperature

Temperature

Temperature is a physical property of matter that quantitatively expresses the common notions of hot and cold. Objects of low temperature are cold, while various degrees of higher temperatures are referred to as warm or hot...

. For a simple compressible system that can only perform volume work, the first law of thermodynamics

First law of thermodynamics

The first law of thermodynamics is an expression of the principle of conservation of work.The law states that energy can be transformed, i.e. changed from one form to another, but cannot be created nor destroyed...

becomes

But one can equally well write this equation in terms of what physicists and chemists sometimes call the 'reduced' or dimensionless entropy, σ = S/k, so that

Just as S is conjugate to T, so σ is conjugate to kT (the energy that is characteristic of T on a molecular scale).

Continuous case

The most obvious extension of the Shannon entropy is the differential entropyDifferential entropy

Differential entropy is a concept in information theory that extends the idea of entropy, a measure of average surprisal of a random variable, to continuous probability distributions.-Definition:...

,

As long as f(x) is a probability density function, p. d. f., H represents the entropy (average information, disorder

Disorder

Disorder may refer to :* Chaos, unpredictability and in the metaphysical sense, it is the opposite of law and order* Civil disorder, one or more forms of disturbance caused by a group of people...

, diversity, etc.) of f(x). For any uniform p. d. f. f(x), the exponential of H is the volume covered by f(x) (in analogy to the cardinality in the discrete case). The volume covered by a n-dimensional multivariate Gaussian distribution with moment matrix M is proportional to the volume of the ellipsoid of concentration and is equal to

. The volume is always positive.

. The volume is always positive.Entropy may be maximized using Gaussian adaptation

Gaussian adaptation

Gaussian adaptation is an evolutionary algorithm designed for the maximization of manufacturing yield due to statistical deviation of component values of signal processing systems...

- one of the evolutionary algorithms - keeping the mean fitness - i. e. the probability of becoming a parent to new individuals in the population - constant (and without the need for any knowledge about entropy as a criterion function). This is illustrated by the figure below, showing Gaussian adaptation climbing a mountain crest in a phenotypic landscape. The lines in the figure are part of a contour line enclosing a region of acceptability in the landscape. At the start the cluster of red points represents a very homogeneous population with small variances in the phenotypes. Evidently, even small environmental changes in the landscape, may cause the process to become extinct.

After a sufficiently large number of generations, the increase in entropy may result in the green cluster. Actually, the mean fitness is the same for both red and green cluster (about 65%). The effect of this adaptation is not very salient in a 2-dimensional case, but in a high-dimensional case, the efficiency of the search process may be increased by many orders of magnitude.

Besides, a Gaussian distribution has the highest entropy compared to other distributions having the same second order moment matrix (Middleton 1960).

But it turns out that this is not in general a good measure of uncertainty or information. For example, the differential entropy can be negative; also it is not invariant under continuous coordinate transformations. Jaynes

Jaynes

Jaynes is a surname, and may refer to* Dwight Jaynes, American sports journalist* Edwin Thompson Jaynes, American physicist and theorist of probability* Jeremy Jaynes, American convicted spammer* Julian Jaynes, American psychologist...

showed in fact that the expression above is not the correct limit of the expression for a finite set of probabilities.

The correct expression, appropriate for the continuous case, is the relative entropy of a distribution, defined as the Kullback-Leibler divergence from the distribution to a reference measure m(x),

(or sometimes the negative of this).

The relative entropy carries over directly from discrete to continuous distributions, and is invariant under coordinate reparametrisations. The relative entropy is moreover, always positive, or zero in the case that f(x)=m(x).

Theoretical relationship

Despite all that, there is an important difference between the two quantities. The information entropy H can be calculated for any probability distribution (if the "message" is taken to be that the event i which had probability pi occurred, out of the space of the events possible). But the thermodynamic entropy S refers to thermodynamic probabilities pi specifically.Furthermore, the thermodynamic entropy S is dominated by different arrangements of the system, and in particular its energy, that are possible on a molecular scale. In comparison, information entropy of any macroscopic event is so small as to be completely irrelevant.

However, a connection can be made between the two, if the probabilities in question are the thermodynamic probabilities pi: the (reduced) Gibbs entropy σ can then be seen as simply the amount of Shannon information needed to define the detailed microscopic state of the system, given its macroscopic description. Or, in the words of G. N. Lewis writing about chemical entropy in 1930, "Gain in entropy always means loss of information, and nothing more". To be more concrete, in the discrete case using base two logarithms, the reduced Gibbs entropy is equal to the minimum number of yes/no questions that need to be answered in order to fully specify the microstate, given that we know the macrostate.

Furthermore, the prescription to find the equilibrium distributions of statistical mechanics, such as the Boltzmann distribution, by maximising the Gibbs entropy subject to appropriate constraints (the Gibbs algorithm

Gibbs algorithm

In statistical mechanics, the Gibbs algorithm, first introduced by J. Willard Gibbs in 1878, is the injunction to choose a statistical ensemble for the unknown microscopic state of a thermodynamic system by minimising the average log probability H = \sum_i p_i \ln p_i \, subject to the probability...

), can now be seen as something not unique to thermodynamics, but as a principle of general relevance in all sorts of statistical inference, if it desired to find a maximally uninformative probability distribution

Principle of maximum entropy

In Bayesian probability, the principle of maximum entropy is a postulate which states that, subject to known constraints , the probability distribution which best represents the current state of knowledge is the one with largest entropy.Let some testable information about a probability distribution...

, subject to certain constraints on the behaviour of its averages. (These perspectives are explored further in the article Maximum entropy thermodynamics

Maximum entropy thermodynamics

In physics, maximum entropy thermodynamics views equilibrium thermodynamics and statistical mechanics as inference processes. More specifically, MaxEnt applies inference techniques rooted in Shannon information theory, Bayesian probability, and the principle of maximum entropy...

).

Szilard's engine

A neat physical thought-experiment demonstrating how just the possession of information might in principle have thermodynamic consequences was established in 1929 by Leó SzilárdLeó Szilárd

Leó Szilárd was an Austro-Hungarian physicist and inventor who conceived the nuclear chain reaction in 1933, patented the idea of a nuclear reactor with Enrico Fermi, and in late 1939 wrote the letter for Albert Einstein's signature that resulted in the Manhattan Project that built the atomic bomb...

, in a refinement of the famous Maxwell's demon

Maxwell's demon

In the philosophy of thermal and statistical physics, Maxwell's demon is a thought experiment created by the Scottish physicist James Clerk Maxwell to "show that the Second Law of Thermodynamics has only a statistical certainty." It demonstrates Maxwell's point by hypothetically describing how to...

scenario.

Consider Maxwell's set-up, but with only a single gas particle in a box. If the supernatural demon knows which half of the box the particle is in (equivalent to a single bit of information), it can close a shutter between the two halves of the box, close a piston unopposed into the empty half of the box, and then extract

joules of useful work if the shutter is opened again. The particle can then be left to isothermally expand back to its original equilibrium occupied volume. In just the right circumstances therefore, the possession of a single bit of Shannon information (a single bit of negentropy

joules of useful work if the shutter is opened again. The particle can then be left to isothermally expand back to its original equilibrium occupied volume. In just the right circumstances therefore, the possession of a single bit of Shannon information (a single bit of negentropyNegentropy

The negentropy, also negative entropy or syntropy, of a living system is the entropy that it exports to keep its own entropy low; it lies at the intersection of entropy and life...

in Brillouin's term) really does correspond to a reduction in the entropy of the physical system. The global entropy is not decreased, but information to energy conversion is possible.

Using a phase-contrast microscope equipped with a high speed camera connected to a computer, as demon, the principle has been actually demonstrated. In this experiment, information to energy conversion is performed on a Brownian

Brownian motion

Brownian motion or pedesis is the presumably random drifting of particles suspended in a fluid or the mathematical model used to describe such random movements, which is often called a particle theory.The mathematical model of Brownian motion has several real-world applications...

particle by means of feedback control; that is, synchronizing the work given to the particle with the information obtained on its position. Computing energy balances for different feedback protocols, has confirmed that the Jarzynski equality

Jarzynski equality

The Jarzynski equality is an equation in statistical mechanics that relates free energy differences between two equilibrium states and non-equilibrium processes...

requires a generalization that accounts for the amount of information involved in the feedback.

Landauer's principle

In fact one can generalise: any information that has a physical representation must somehow be embedded in the statistical mechanical degrees of freedom of a physical system.Thus, Rolf Landauer

Rolf Landauer

Rolf William Landauer was an IBM physicist who in 1961 argued that when information is lost in an irreversible circuit, the information becomes entropy and an associated amount of energy is dissipated as heat...

argued in 1961, if one were to imagine starting with those degrees of freedom in a thermalised state, there would be a real reduction in thermodynamic entropy if they were then re-set to a known state. This can only be achieved under information-preserving microscopically deterministic dynamics if the uncertainty is somehow dumped somewhere else – i.e. if the entropy of the environment (or the non information-bearing degrees of freedom) is increased by at least an equivalent amount, as required by the Second Law, by gaining an appropriate quantity of heat: specifically kT ln 2 of heat for every 1 bit of randomness erased.

On the other hand, Landauer argued, there is no thermodynamic objection to a logically reversible operation potentially being achieved in a physically reversible way in the system. It is only logically irreversible operations – for example, the erasing of a bit to a known state, or the merging of two computation paths – which must be accompanied by a corresponding entropy increase. When information is physical, all processing of its representations, i.e. generation, encoding, transmission, decoding and interpretation, are natural processes where entropy increases by consumption of free energy.

Applied to the Maxwell's demon/Szilard engine scenario, this suggests that it might be possible to "read" the state of the particle into a computing apparatus with no entropy cost; but only if the apparatus has already been SET into a known state, rather than being in a thermalised state of uncertainty. To SET (or RESET) the apparatus into this state will cost all the entropy that can be saved by knowing the state of Szilard's particle.

Negentropy

Shannon entropy has been related by physicist Léon BrillouinLéon Brillouin

Léon Nicolas Brillouin was a French physicist. He made contributions to quantum mechanics, radio wave propagation in the atmosphere, solid state physics, and information theory.-Early life:...

to a concept sometimes called negentropy

Negentropy

The negentropy, also negative entropy or syntropy, of a living system is the entropy that it exports to keep its own entropy low; it lies at the intersection of entropy and life...

. In his 1962 book Science and Information Theory, Brillouin described the Negentropy Principle of Information or NPI, the gist of which is that acquiring information about a system’s microstates is associated with a decrease in entropy (work is needed to extract information, erasure leads to increase in thermodynamic entropy).

There is no violation of the second law of thermodynamics, according to Brillouin, since a reduction in any local system’s thermodynamic entropy results in an increase in thermodynamic entropy elsewhere. Negentropy was considered as controversial because its earlier understanding can yield Carnot efficiency higher than one.

In 2009, Mahulikar & Herwig redefined thermodynamic negentropy as the specific entropy deficit of the dynamically ordered sub-system relative to its surroundings. This definition enabled the formulation of the Negentropy Principle, which is mathematically shown to follow from the 2nd Law of Thermodynamics, during order existence.

Black holes

Stephen HawkingStephen Hawking

Stephen William Hawking, CH, CBE, FRS, FRSA is an English theoretical physicist and cosmologist, whose scientific books and public appearances have made him an academic celebrity...

often speaks of the thermodynamic entropy of black hole

Black hole

A black hole is a region of spacetime from which nothing, not even light, can escape. The theory of general relativity predicts that a sufficiently compact mass will deform spacetime to form a black hole. Around a black hole there is a mathematically defined surface called an event horizon that...

s in terms of their information content. Do black holes destroy information? It appears that there are deep relations between the entropy of a black hole and information loss See Black hole thermodynamics

Black hole thermodynamics

In physics, black hole thermodynamics is the area of study that seeks to reconcile the laws of thermodynamics with the existence of black hole event horizons...

and Black hole information paradox

Black hole information paradox

The black hole information paradox results from the combination of quantum mechanics and general relativity. It suggests that physical information could disappear in a black hole, allowing many physical states to evolve into the same state...

.

Quantum theory

Hirschman showed in 1957, however, that Heisenberg's uncertainty principle can be expressed as a particular lower bound on the sum of the entropies of the observable probability distributions of a particle's position and momentum, when they are expressed in Planck unitsPlanck units

In physics, Planck units are physical units of measurement defined exclusively in terms of five universal physical constants listed below, in such a manner that these five physical constants take on the numerical value of 1 when expressed in terms of these units. Planck units elegantly simplify...

. (One could speak of the "joint entropy" of these distributions by considering them independent, but since they are not jointly observable, they cannot be considered as a joint distribution

Joint distribution

In the study of probability, given two random variables X and Y that are defined on the same probability space, the joint distribution for X and Y defines the probability of events defined in terms of both X and Y...

.)

It is well known that a Shannon based definition of information entropy leads in the classical case to the Boltzmann entropy. It is tempting to regard the Von Neumann entropy

Von Neumann entropy

In quantum statistical mechanics, von Neumann entropy, named after John von Neumann, is the extension of classical entropy concepts to the field of quantum mechanics....

as the corresponding quantum mechanical definition. But the latter is problematic from quantum information point of view. Consequently Stotland, Pomeransky, Bachmat and Cohen have introduced a new definition of entropy that reflects the inherent uncertainty of quantum mechanical states. This definition allows to distinguish between the minimum uncertainty entropy of pure states, and the excess statistical entropy of mixtures.

The fluctuation theorem

The fluctuation theoremFluctuation theorem

The fluctuation theorem , which originated from statistical mechanics, deals with the relative probability that the entropy of a system which is currently away from thermodynamic equilibrium will increase or decrease over a given amount of time...

provides a mathematical justification of the second law of thermodynamics

Second law of thermodynamics

The second law of thermodynamics is an expression of the tendency that over time, differences in temperature, pressure, and chemical potential equilibrate in an isolated physical system. From the state of thermodynamic equilibrium, the law deduced the principle of the increase of entropy and...

under these principles, and precisely defines the limitations of the applicability of that law to the microscopic realm of individual particle movements.

Is information quantized?

In 1995, Dr Tim Palmer signalled two unwritten assumptions about Shannon's definition of information that may make it inapplicable as such to quantum mechanicsQuantum mechanics

Quantum mechanics, also known as quantum physics or quantum theory, is a branch of physics providing a mathematical description of much of the dual particle-like and wave-like behavior and interactions of energy and matter. It departs from classical mechanics primarily at the atomic and subatomic...

:

- The supposition that there is such a thing as an observable state (for instance the upper face of a dice or a coin) before the observation begins

- The fact that knowing this state does not depend on the order in which observations are made (commutativity)

The article Conceptual inadequacy of the Shannon information in quantum measurement, published in 2001 by Anton Zeilinger

Anton Zeilinger

Anton Zeilinger is an Austrian quantum physicist. He is currently professor of physics at the University of Vienna, previously University of Innsbruck. He is also the director of the Vienna branch of the Institute for Quantum Optics and Quantum Information IQOQI at the Austrian Academy of Sciences...

and Caslav Brukner, synthesized and developed these remarks. The so-called Zeilinger's principle suggests that the quantization observed in QM could be bound to information quantization (one cannot observe less than one bit, and what is not observed is by definition "random"). But these claims remain highly controversial.

See also

- Thermodynamic entropyEntropyEntropy is a thermodynamic property that can be used to determine the energy available for useful work in a thermodynamic process, such as in energy conversion devices, engines, or machines. Such devices can only be driven by convertible energy, and have a theoretical maximum efficiency when...

- Information entropyInformation entropyIn information theory, entropy is a measure of the uncertainty associated with a random variable. In this context, the term usually refers to the Shannon entropy, which quantifies the expected value of the information contained in a message, usually in units such as bits...

- ThermodynamicsThermodynamicsThermodynamics is a physical science that studies the effects on material bodies, and on radiation in regions of space, of transfer of heat and of work done on or by the bodies or radiation...

- Statistical mechanicsStatistical mechanicsStatistical mechanics or statistical thermodynamicsThe terms statistical mechanics and statistical thermodynamics are used interchangeably...

- Information theoryInformation theoryInformation theory is a branch of applied mathematics and electrical engineering involving the quantification of information. Information theory was developed by Claude E. Shannon to find fundamental limits on signal processing operations such as compressing data and on reliably storing and...

- Physical informationPhysical informationIn physics, physical information refers generally to the information that is contained in a physical system. Its usage in quantum mechanics In physics, physical information refers generally to the information that is contained in a physical system. Its usage in quantum mechanics In physics,...

- Fluctuation theoremFluctuation theoremThe fluctuation theorem , which originated from statistical mechanics, deals with the relative probability that the entropy of a system which is currently away from thermodynamic equilibrium will increase or decrease over a given amount of time...

- Black hole entropy

- Black hole information paradoxBlack hole information paradoxThe black hole information paradox results from the combination of quantum mechanics and general relativity. It suggests that physical information could disappear in a black hole, allowing many physical states to evolve into the same state...

- Entropy (information theory)

- Entropy (statistical thermodynamics)

- Entropy (order and disorder)Entropy (order and disorder)In thermodynamics, entropy is commonly associated with the amount of order, disorder, and/or chaos in a thermodynamic system. This stems from Rudolf Clausius' 1862 assertion that any thermodynamic processes always "admits to being reduced to the alteration in some way or another of the arrangement...

- Orders of magnitude (entropy)Orders of magnitude (entropy)The following list shows different orders of magnitude of entropy.-References:...

External links

- Entropy is Simple...If You Avoid the Briar Patches. Dismissive of direct link between information-theoretic and thermodynamic entropy.

- Information Processing and Thermodynamic Entropy Stanford Encyclopedia of Philosophy.

- An Intuitive Guide to the Concept of Entropy Arising in Various Sectors of Science - a wikibook on the interpretation of the concept of entropy.